Cross-scene pedestrian searching method based on depth learning

A deep learning and search method technology, applied in the information field, can solve the problems of weak feature robustness and low search application accuracy, and achieve the goal of reducing local feature loss, high actual search accuracy, and strong feature robustness Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

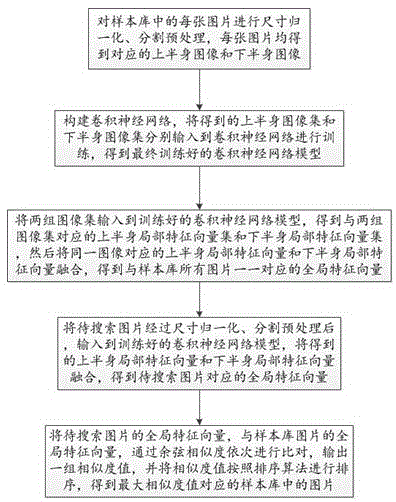

[0017] The present invention provides a cross-scene pedestrian search method based on deep learning. First, the image is segmented based on the image content to construct a deep network structure suitable for pedestrian search, and then the processed image is put into training to obtain a training model. Then output the ranking results according to this ranking algorithm, and finally achieve the purpose of searching for pedestrians across scenes.

[0018] see figure 1 , the specific method is as follows:

[0019] Step S101: Construct a sample library, perform size normalization and segmentation preprocessing on each picture in the sample library, and obtain corresponding upper body images and lower body images for each picture, after the above processing, the sample library includes two sets of images Sets are the upper body image set and the lower body image set respectively;

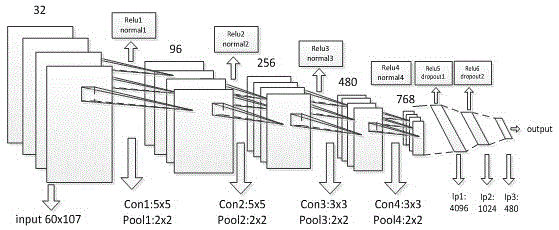

[0020] Step S102: Construct a convolutional neural network, input the upper body image set and lo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com