Cache replacement method under heterogeneous memory environment

A cache replacement and heterogeneous memory technology, which is applied in the field of computer science, can solve problems such as improving cache usage efficiency, inconsistent cache miss costs, and cache replacement algorithms affecting cache usage efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

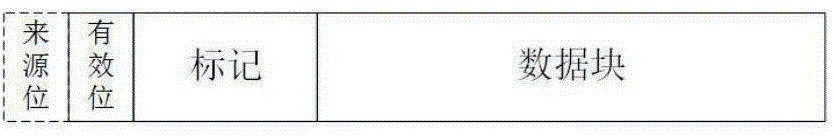

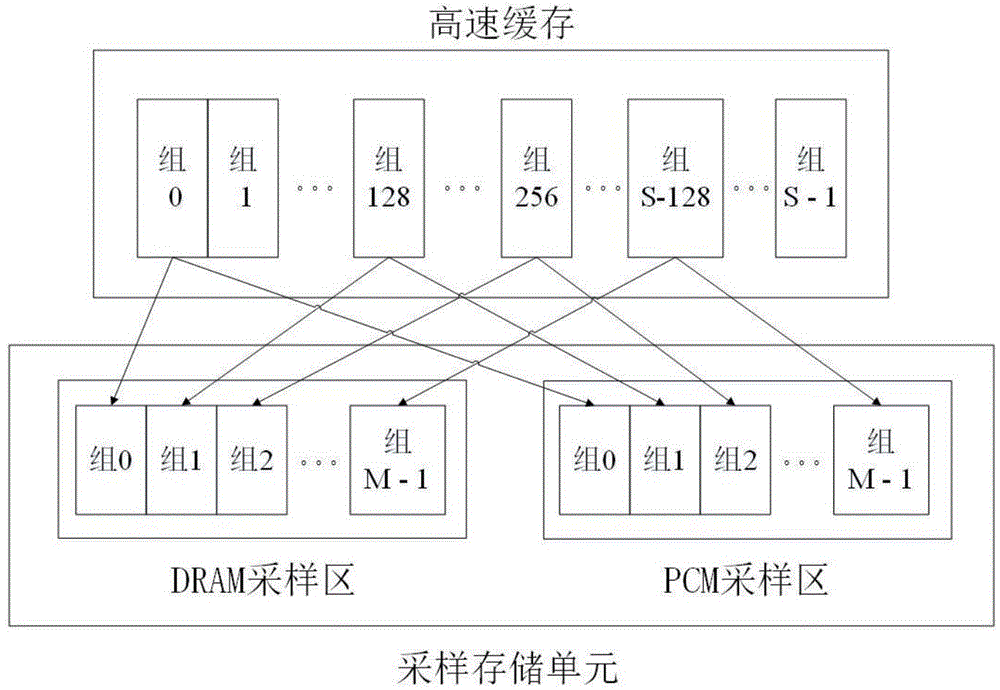

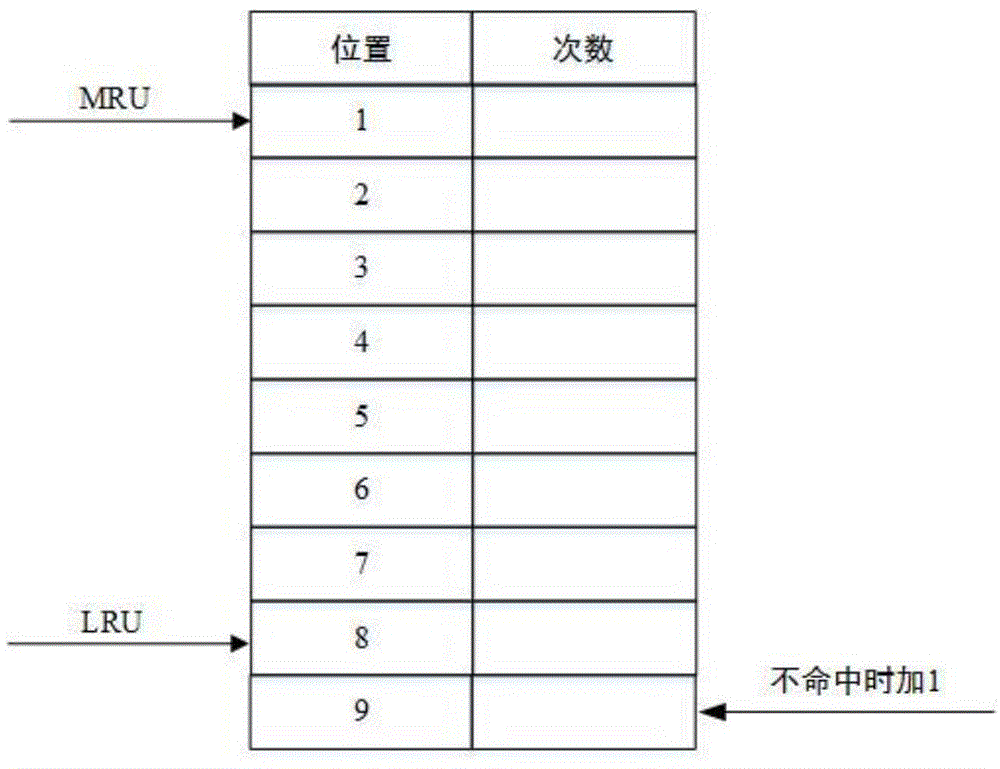

[0053] This embodiment records a cache replacement method in a heterogeneous memory environment. like figure 1 As shown, the cache replacement method includes: adding a source flag in the cache line hardware structure, which is used to mark whether the cache line data comes from DRAM or PCM; figure 2 As shown, the cache replacement method also includes: adding a sampling storage unit in the CPU, and statistical data reuse distance information; image 3 Shown is the format of the reuse distance statistics table in the cache replacement method; as Figure 4 As shown, the cache replacement method also includes three sub-methods: a sampling method, an equivalent location calculation method and a replacement method. position, the replacement submethod is used to determine the cache line that needs to be replaced.

[0054] The source flag is counted as I, and its setting method is: when the cache misses and needs to read data from the memory, the received data block is determine...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com