Binocular vision obstacle detection method based on three-dimensional point cloud segmentation

A technology of obstacle detection and 3D point cloud, applied in image analysis, image data processing, instruments, etc., can solve problems such as low accuracy, impossibility of direct application, complex application environment, etc., and achieve high reliability and practicability Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

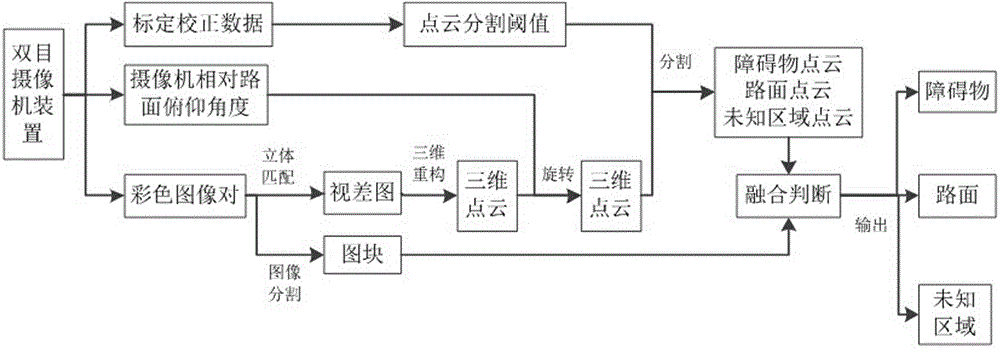

[0028]An automatic obstacle detection method based on 3D point cloud segmentation and fusion of color information, such as figure 1 shown, including the following steps:

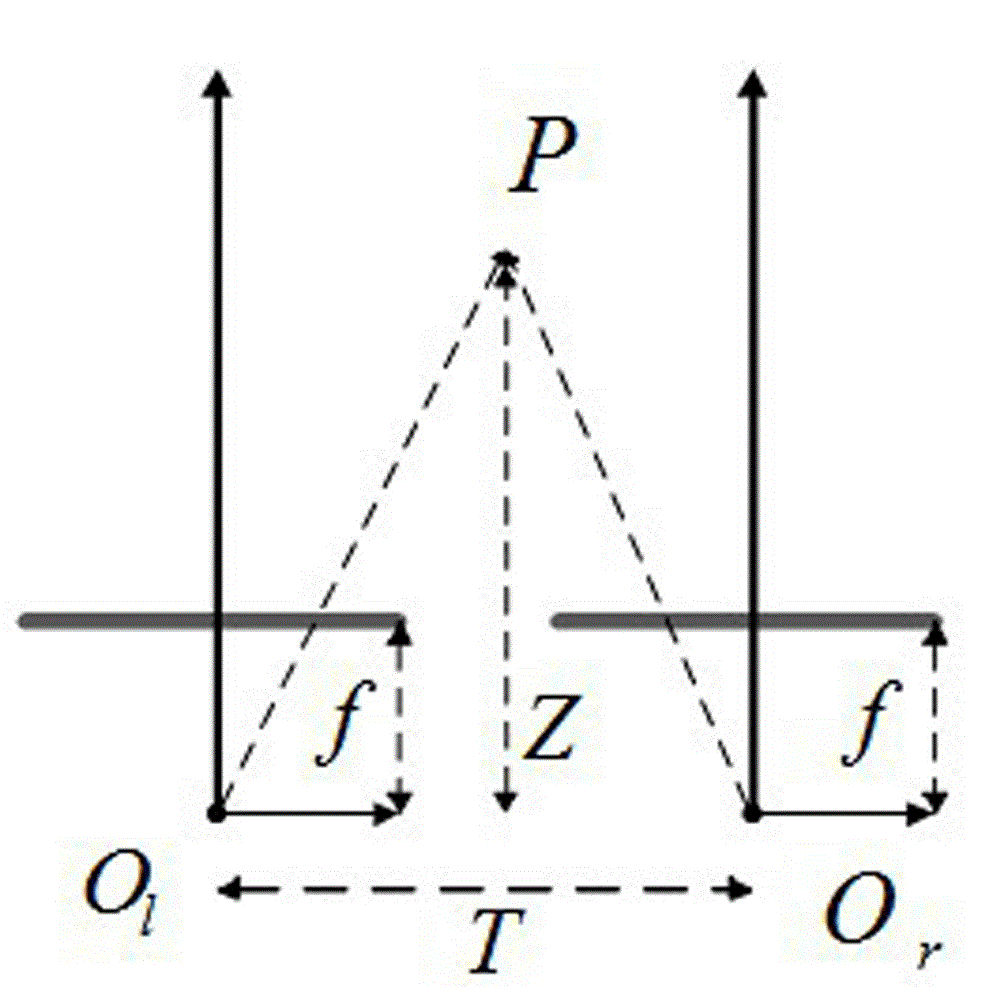

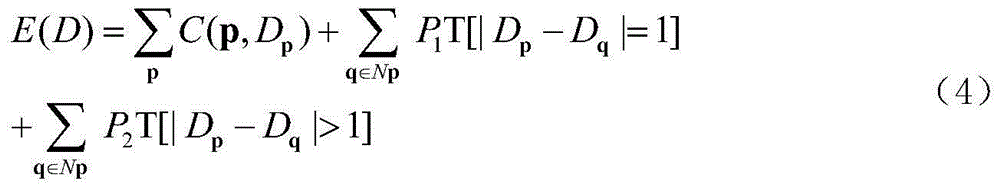

[0029] Step 1: Obtain two color images through two cameras at different positions, use the stereo calibration method to calibrate the binocular camera, calculate the internal and external parameters and relative positional relationship of the two cameras, and eliminate the distortion of the two cameras according to these parameters Align with the row (or column) so that the imaging origin coordinates of the two color images are consistent, and a corrected binocular color view is obtained. The pitch angle and height of the camera relative to the road surface are acquired or predetermined by the sensor. The relative position and focal length of the two cameras are fixed, that is, once calibrated, the relative position and focal length of the two cameras will not be changed. The pitch angle and height of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com