Multi-camera cooperative character tracking method in complex scene

A complex scene and camera technology, applied in image data processing, instruments, closed-circuit television systems, etc., can solve undiscovered problems, improve effectiveness and reliability, and ensure effective monitoring

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

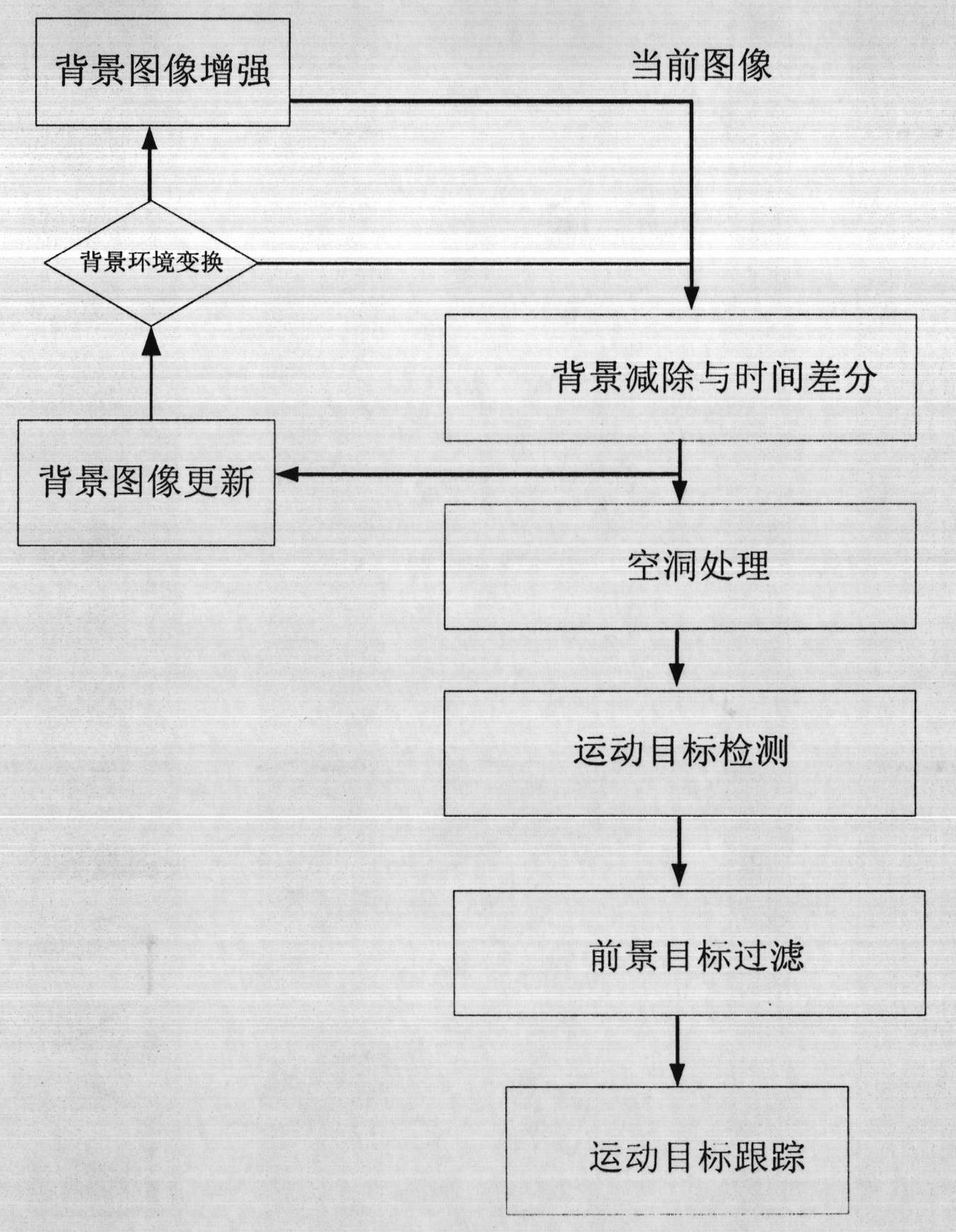

[0036] see figure 1 It is a schematic diagram of the operation flow of the present invention, according to figure 1 Flow process, detection method of the present invention comprises the following steps:

[0037] 1. Moving target monitoring: analyze the surveillance video obtained by each camera, convert the video into a corresponding image sequence, use an improved time difference method combined with the background subtraction method to establish a background model, and adopt different update strategies for different regions . First, the binary image is obtained by the time difference method to find the approximate motion area, and the background is updated according to formula (1). The background model of the foreground pixel in the detection result is not updated, but the relatively stable background pixel is updated, and can be Different update speeds are adopted according to whether there is a moving target in the detection result, where B t (x, y) and B t-1 (x, y) ...

Embodiment 2

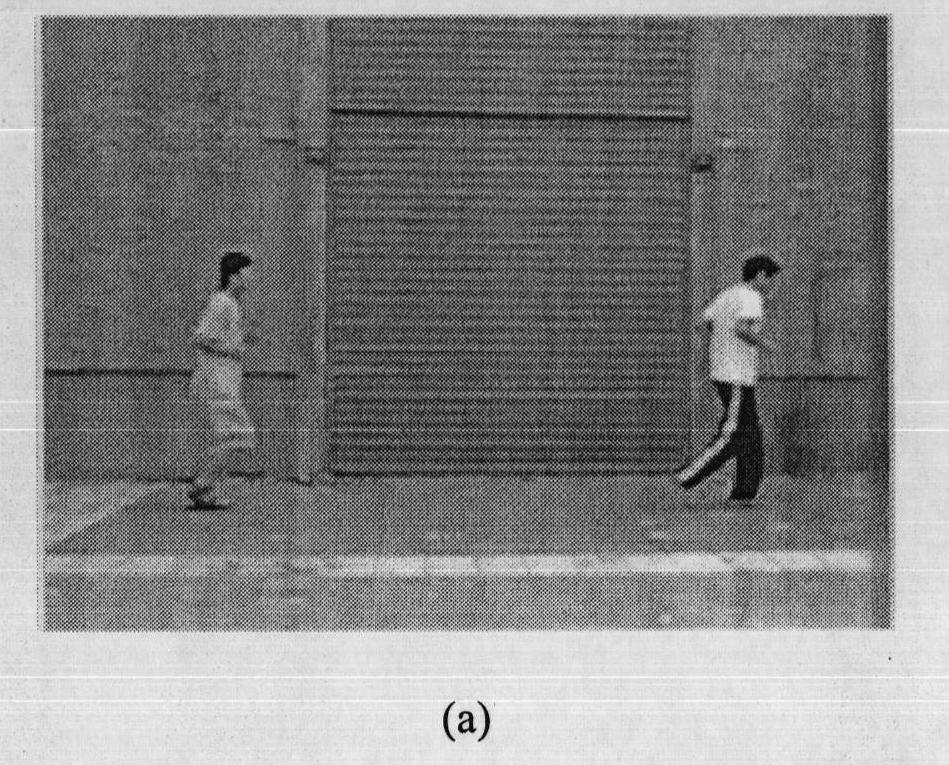

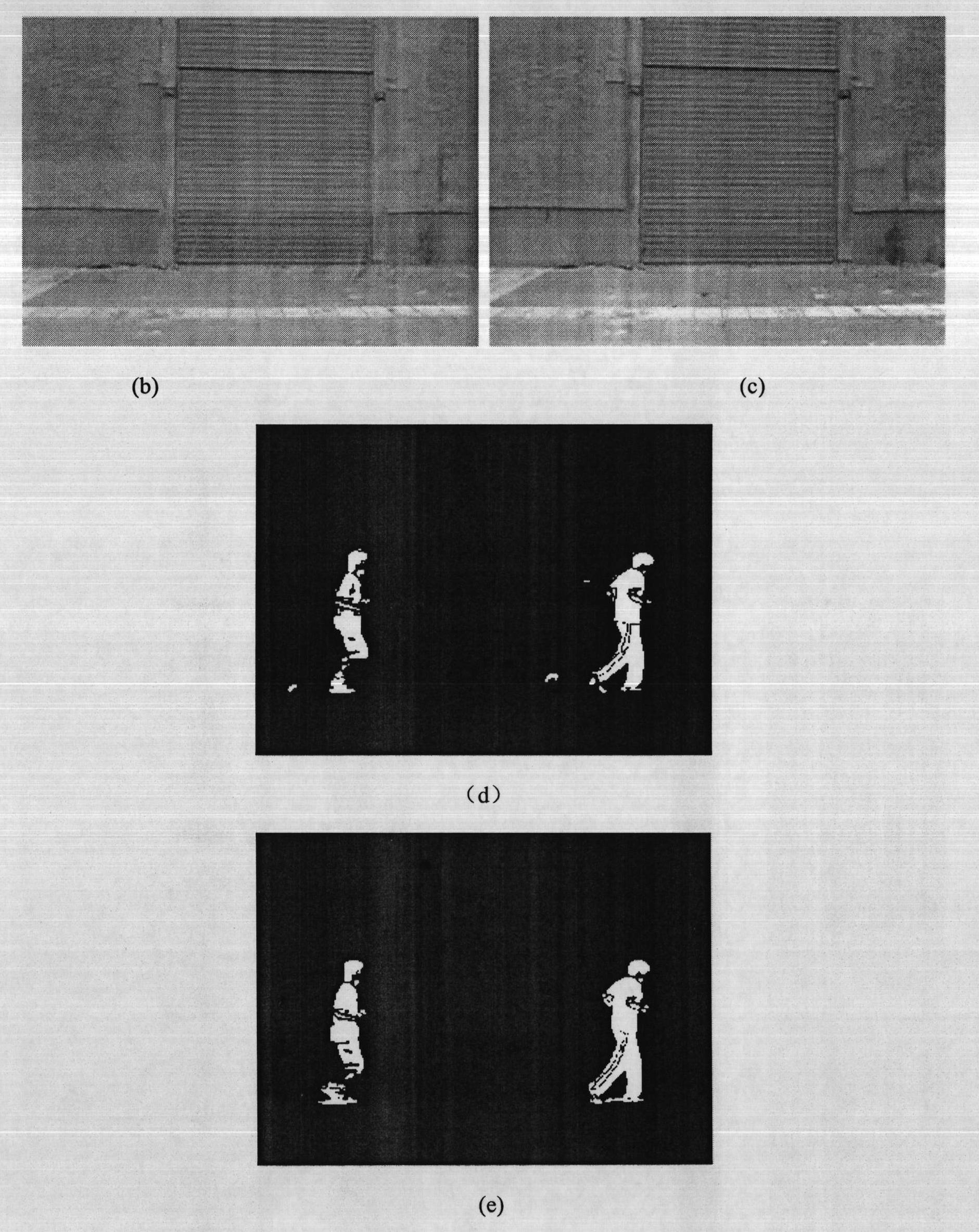

[0043] Traditional monitors are fixed at the same place. Due to restrictions such as day and night changes or ambient light and darkness, the surveillance videos shot at different time points in the same space are not the same. The video images shot during the daytime have sufficient light sources, and their field of view is large and moving. The target is obvious and easy to see; on the contrary, night images and cloudy conditions appear blurred due to the uneven distribution of light sources in the scene, so that it is difficult to easily track the target. In order to make the system adaptable to different lighting, different climates and complex backgrounds Robust object detection and tracking methods under conditions. Based on the fuzzy theory, the brightness value of the scene image is converted into a fuzzy matrix, and the real-time fuzzy enhancement is performed on this matrix to enhance the dark part of the image, and retain the original saturation information of each p...

Embodiment 3

[0068] In addition, the present invention also uses image connected region marks to identify different objects, that is, each object is independent of each other and does not intersect each other. If there is an intersection, it is regarded as the same object. The binary image is processed, because the binary image has only two colors of black and white, so its connected components are generally in units of a black or white area, when each black (white) pixel in the given image , judge whether there is (white) black in the adjacent pixels, assuming that each object in the image is white, scan each pixel in each row from top to bottom in sequence, if the point is a white point, then judge the left, top, and For the four pixels adjacent to the upper left and upper right, the order of judging according to the number of white pixels is as follows:

[0069] 1.0: Given a new label and consider the point to belong to a new object.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com