A cluster GPU resource scheduling system and method

A resource scheduling and resource scheduling module technology, applied in resource allocation, multi-programming devices, etc., can solve problems such as low efficiency, the inability of a single GPU to carry complex computing tasks, and the inability of GPU cards to be plug-and-play

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

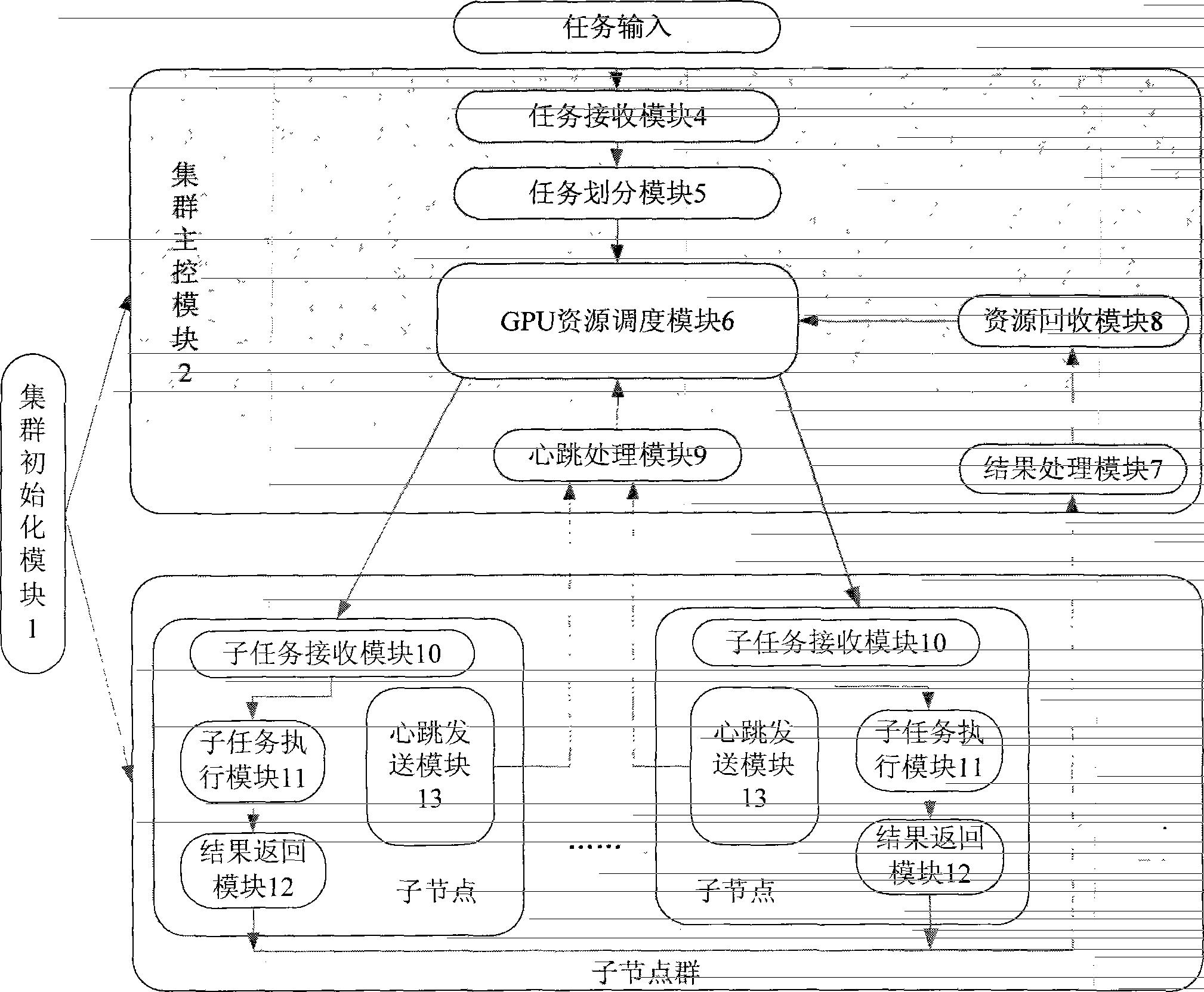

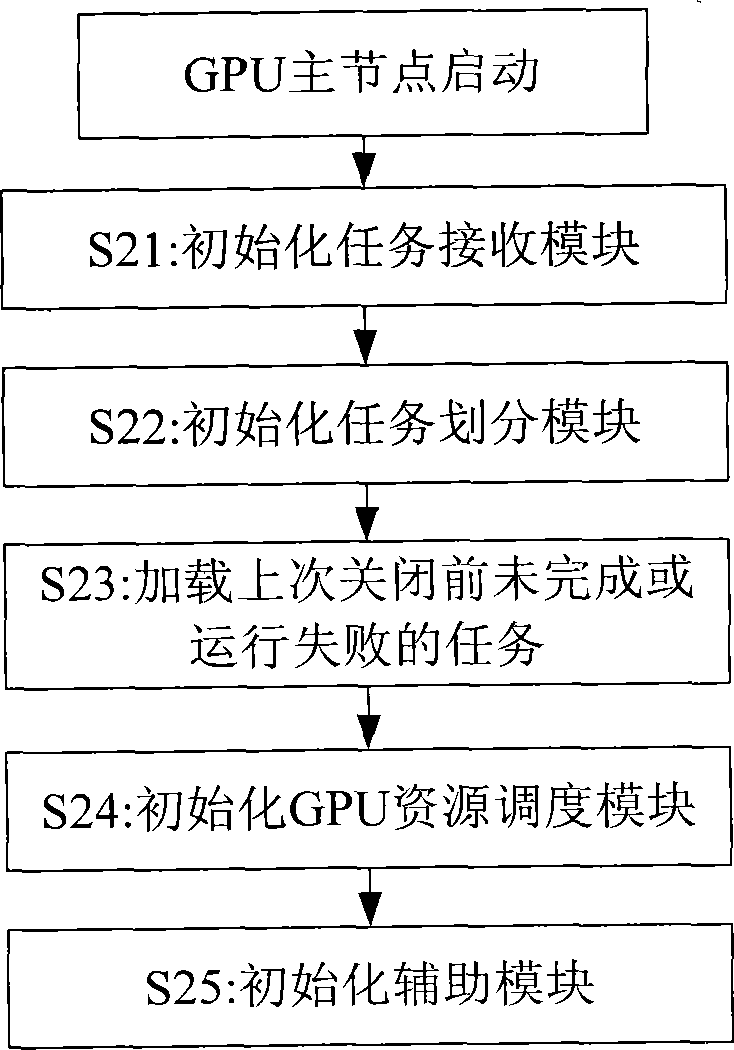

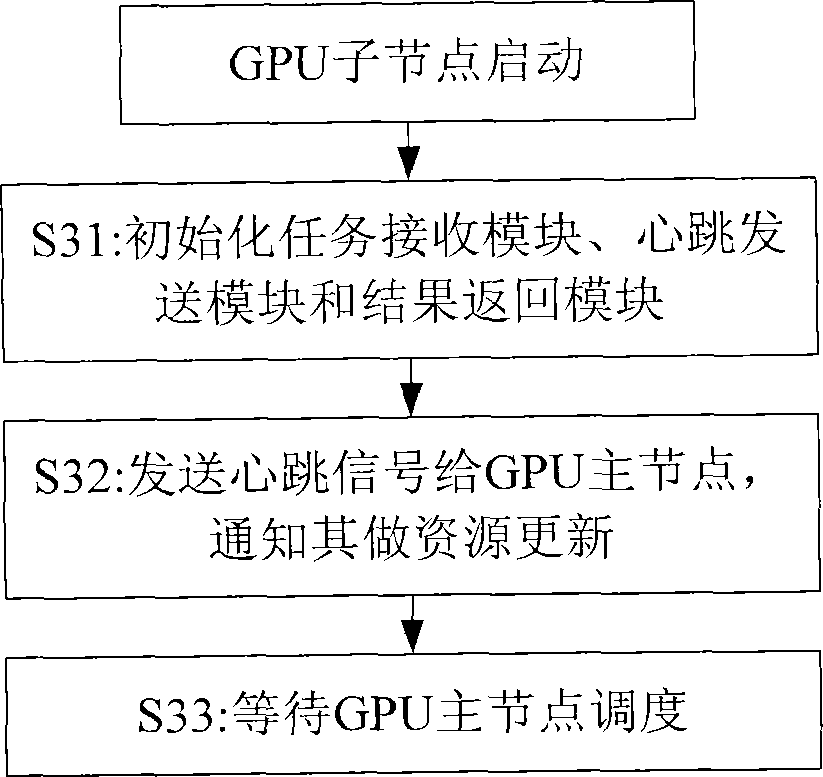

[0040] In order to solve the problems in the prior art, the embodiment of the present invention provides a cluster GPU resource scheduling system and method. The solution provided by the present invention forms all GPU resources into a cluster, and the master node uniformly schedules each child node in the cluster, each The child node only needs to set a unique ID number and computing power, and send its own information to the master node, and the master node will classify GPU resources according to the received information of each node; for the input task, the master node will The task is basically divided and distributed to each sub-node, and each scheduled sub-node further divides the sub-task into fine blocks to match the parallel computing mode of the GPU.

[0041] Embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings.

[0042] figure 1A schematic structural diagram of a cluster GPU resource scheduling system...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com