Management method by using rapid non-volatile medium as cache

A management method and non-volatile technology, applied in memory systems, electrical digital data processing, memory address/allocation/relocation, etc., can solve problems such as data loss

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

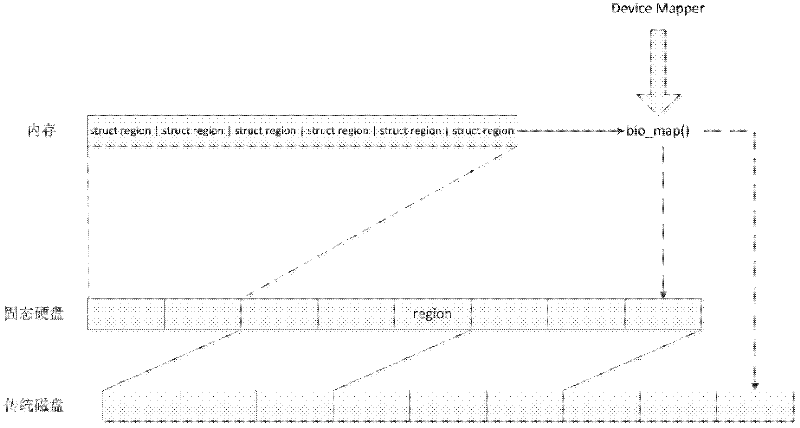

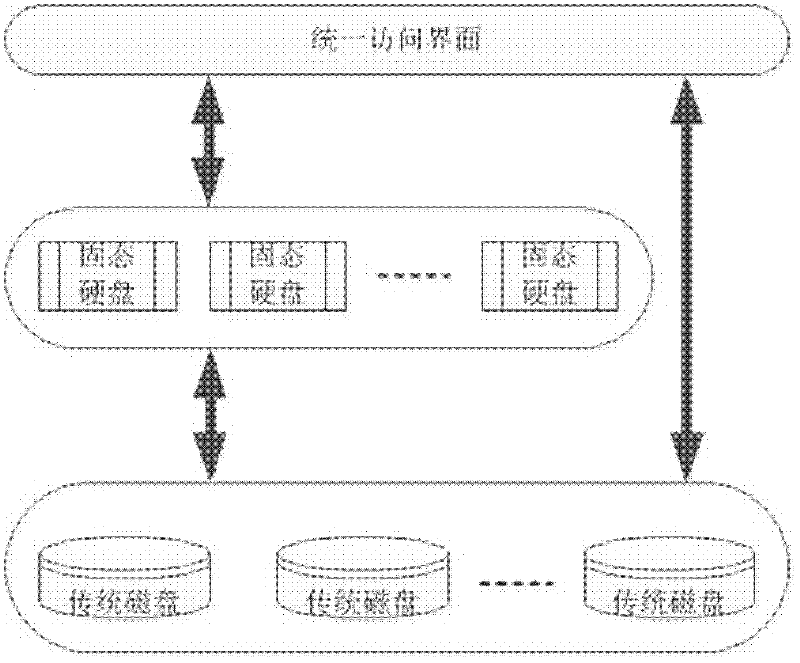

[0015] The present invention utilizes the Device Mapper mechanism of Linux to manage multiple block devices, and uses the high-speed devices as caches for low-speed devices to build a two-level storage system, thereby obtaining higher storage performance at a lower cost. The overall structure is as follows: figure 1 shown.

[0016] The invention divides the managed devices into cache devices and disk devices, wherein the cache devices use high-performance solid-state hard disks, and in actual use, multiple solid-state hard disks can be used to form a RAID to improve the performance of the cache. Neither the cache device nor the disk device is visible to the user, but the present invention provides the user with pseudo devices with the same number and characteristics as the disk device. The present invention can manage multiple cache devices and disk devices, and the cache device and disk devices have a one-to-many relationship, that is, one cache device can be shared by multip...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com