Cache line flush micro-architectural implementation method ans system

A buffer memory and high-speed cache technology, which is applied in the direction of memory system, instrument, memory address/allocation/relocation, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

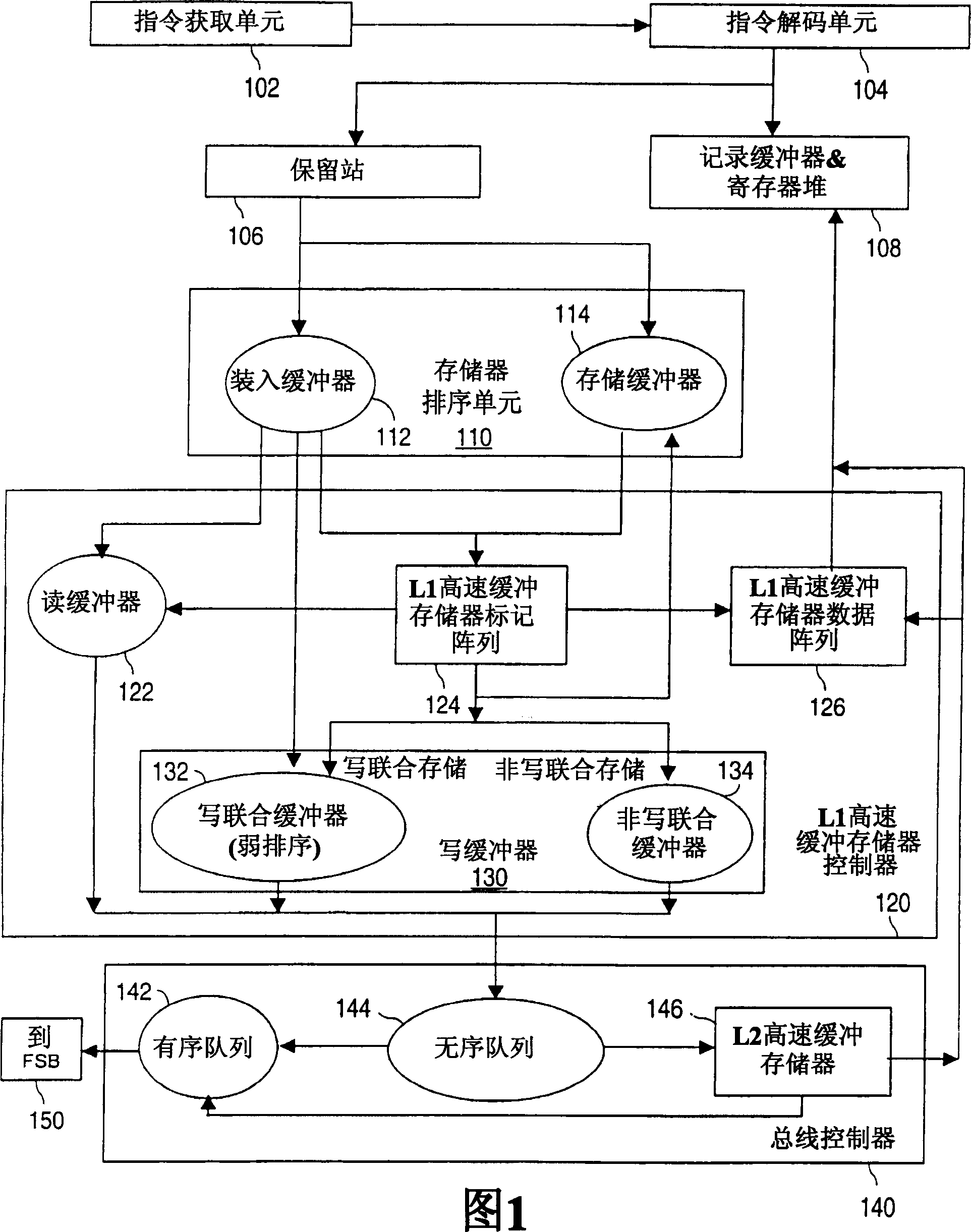

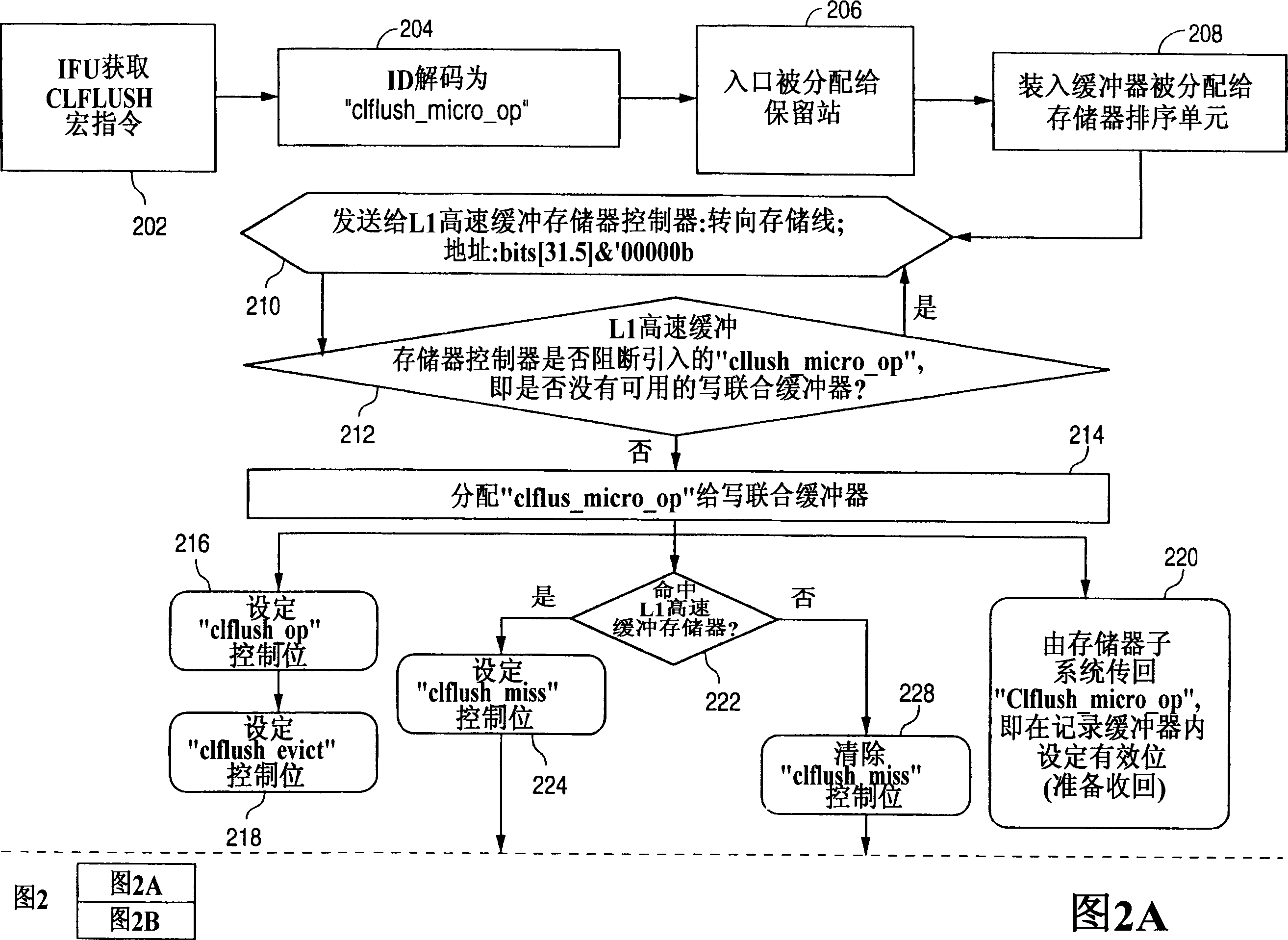

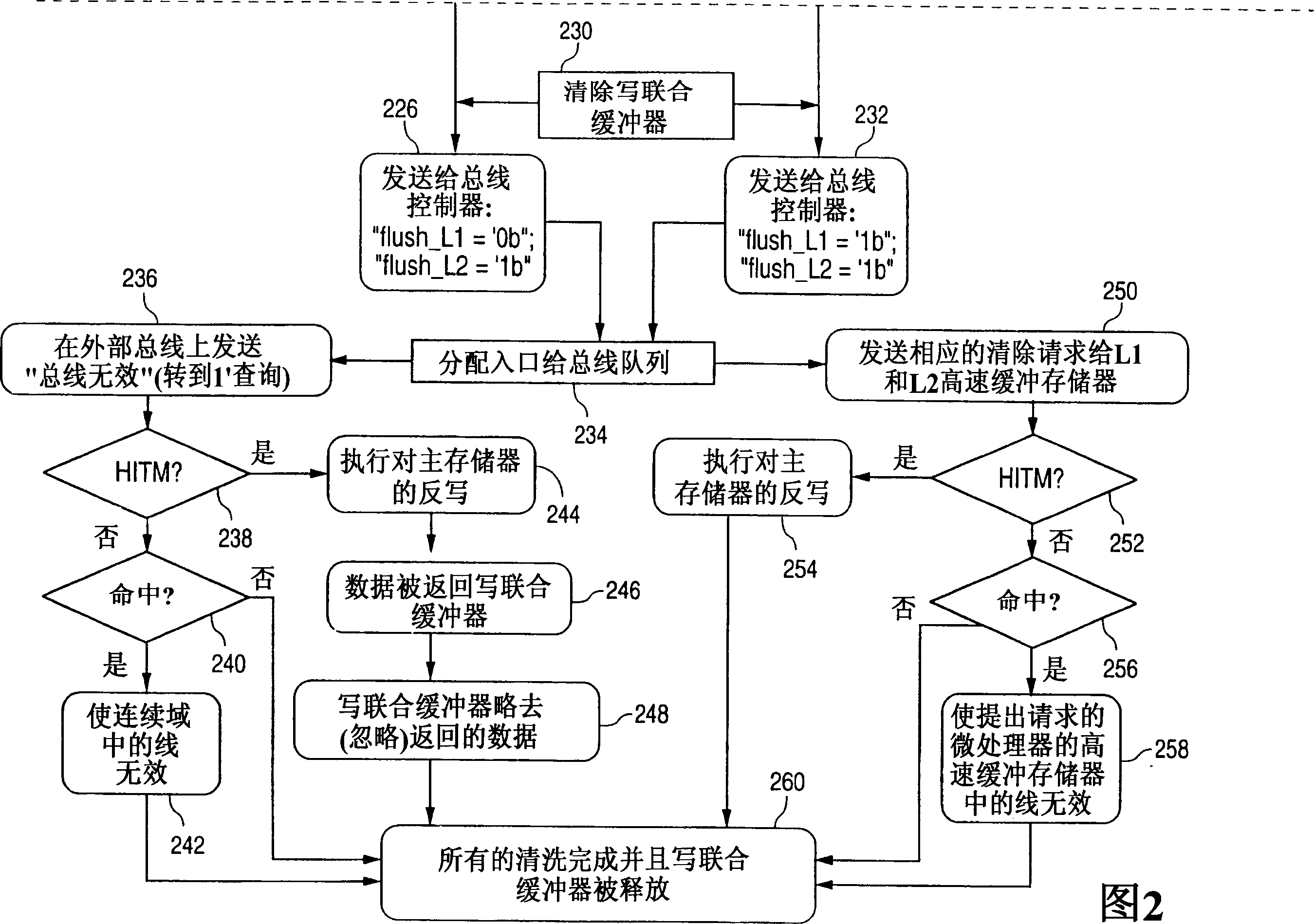

Image

Examples

Embodiment Construction

[0016] By definition, a cache line is either completely valid or completely invalid. A cache line is never partially valid. For example, when the processor only wants to read one byte, all bytes for an applicable cache line must be stored in cache; otherwise, cache "misses" may occur. The cache lines form the actual cache memory. The cache directory is used only for cache management. A cache line typically contains more data than it can potentially translate in a single bus cycle. To this end, most cache memory controllers implement burst mode, in which a pre-set sequence of addresses enables data to be transferred across the bus more quickly. This is used for cache line fills, or for writing back cache lines, since these cache lines represent a contiguous and aligned region of addresses.

[0017] Techniques for flushing cache lines may be associated with linear memory addresses. When executed, this technique flushes cache lines associated with operands from all caches in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com