Knowledge distillation-based text classification method and system

A text classification and knowledge technology, applied in neural learning methods, character and pattern recognition, instruments, etc., to achieve the effect of ensuring the accuracy of results, improving accuracy, and facilitating deployment and use

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0089] as attached Figure 5 As shown, this embodiment provides a text classification method based on knowledge distillation, and the method is as follows:

[0090] S1. Obtain unsupervised corpus (data 1) and perform data preprocessing on the unsupervised corpus;

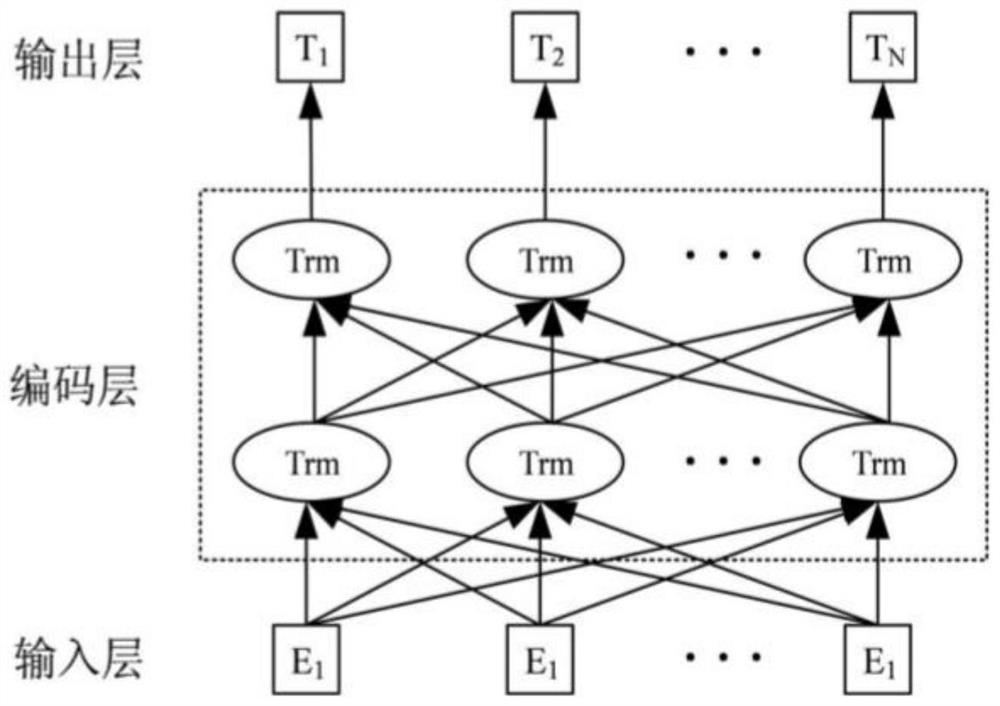

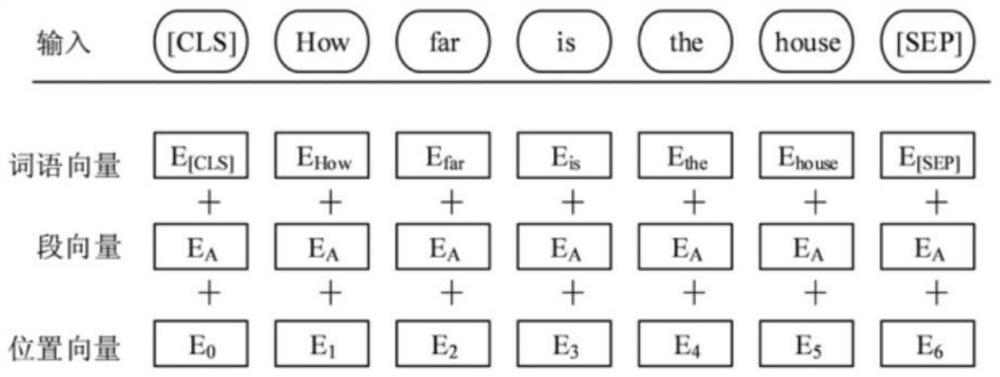

[0091] S2. Obtain a teacher language model (model T) based on large-scale unsupervised corpus training;

[0092] S3. Use the supervised training corpus for the specific classification task to train the teacher language model (model T) on the classification task through fine-tuning, and obtain a trained teacher language model (model T);

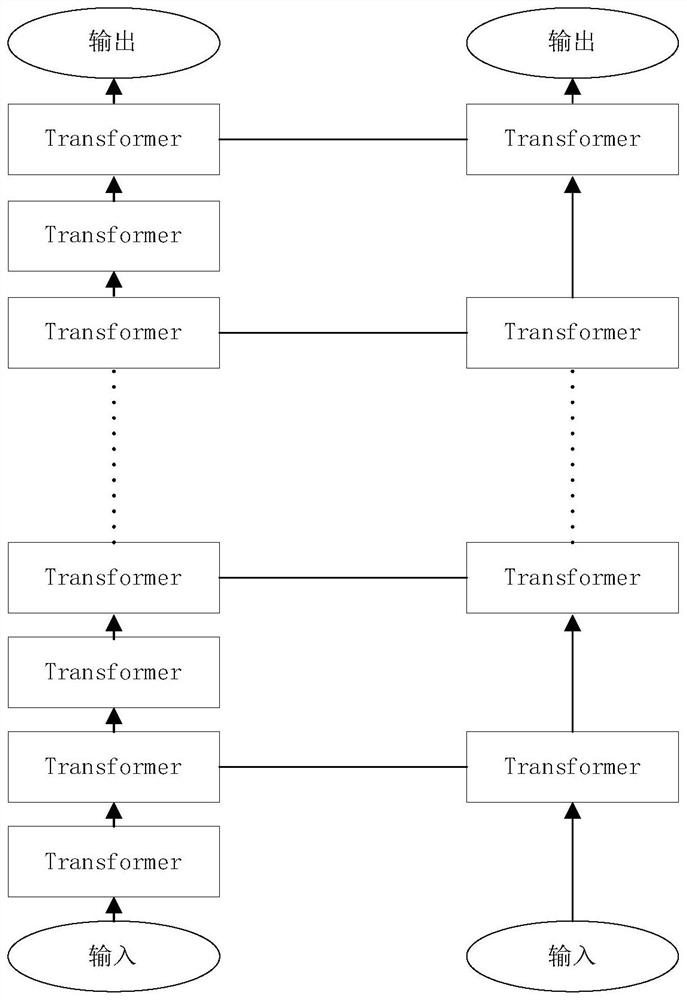

[0093] S4. Construct a student model (model S) according to the specific classification task and the trained teacher language model (model T);

[0094] S5. According to the intermediate layer output and final output of the teacher language model (model T), construct a loss function, train the student model (model S), and obtain the final student model (model S);

[0095] S6. Use ...

Embodiment 2

[0147] This embodiment provides a text classification system based on knowledge distillation, and the system includes:

[0148] The first acquisition module is used to acquire the unsupervised corpus (data 1) and perform data preprocessing on the unsupervised corpus;

[0149] The first training module is used to obtain a teacher language model (model T) based on large-scale unsupervised corpus training;

[0150] The second training module is used to use the supervised training corpus (data 2) for the specific classification task to train the teacher language model (model T) on the classification task through fine-tuning, and obtain a trained teacher language model (model T);

[0151] The construction module is used to construct the student model (model S) according to the specific classification task and the trained teacher language model (model T);

[0152] The second acquisition module is used to construct a loss function according to the intermediate layer output and final...

Embodiment 3

[0155] This embodiment also provides an electronic device, including: a memory and a processor;

[0156] Wherein, the memory stores computer execution instructions;

[0157] The processor executes the computer-executable instructions stored in the memory, so that the processor executes the text classification method based on knowledge distillation in any embodiment of the present invention.

[0158] The processor may be a central processing unit (CPU), or other general-purpose processors, digital signal processors (DSPs), application-specific integrated circuits (ASICs), off-the-shelf programmable gate arrays (FPGAs), or other programmable logic devices, Discrete gate or transistor logic devices, discrete hardware components, etc. The processor may be a microprocessor or the processor may be any conventional processor or the like.

[0159] The memory can be used to store computer programs and / or modules, and the processor implements various functions of the electronic device...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com