Method for synthesizing virtual viewpoint image based on implicit neural scene representation

A virtual viewpoint and image synthesis technology, which is applied in the field of virtual viewpoint image synthesis and roaming, can solve problems such as slow speed, and achieve the effect of optimizing distribution and improving computing speed and performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] In order to make the purposes, technical solutions and advantages of the embodiments of the present invention clearer, the technical solutions of the present invention will be clearly and completely described below with reference to the specific embodiments of the present application and the corresponding drawings. Obviously, the described embodiments are some, but not all, embodiments of the present invention.

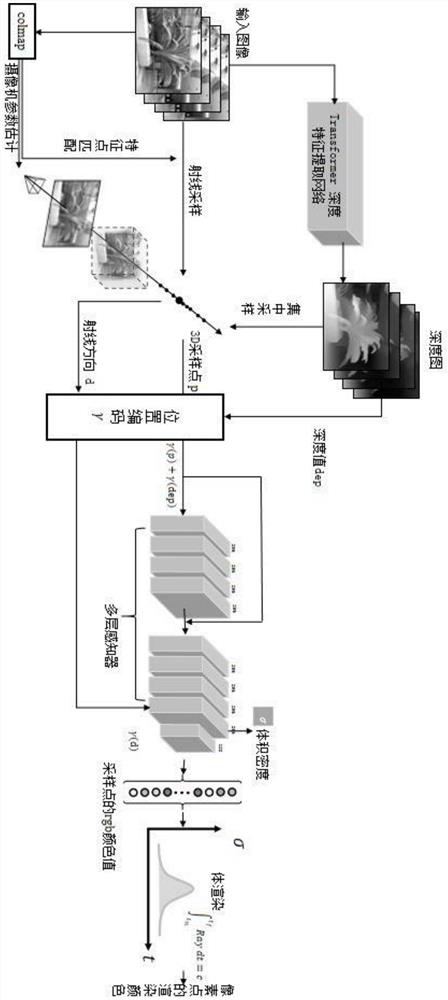

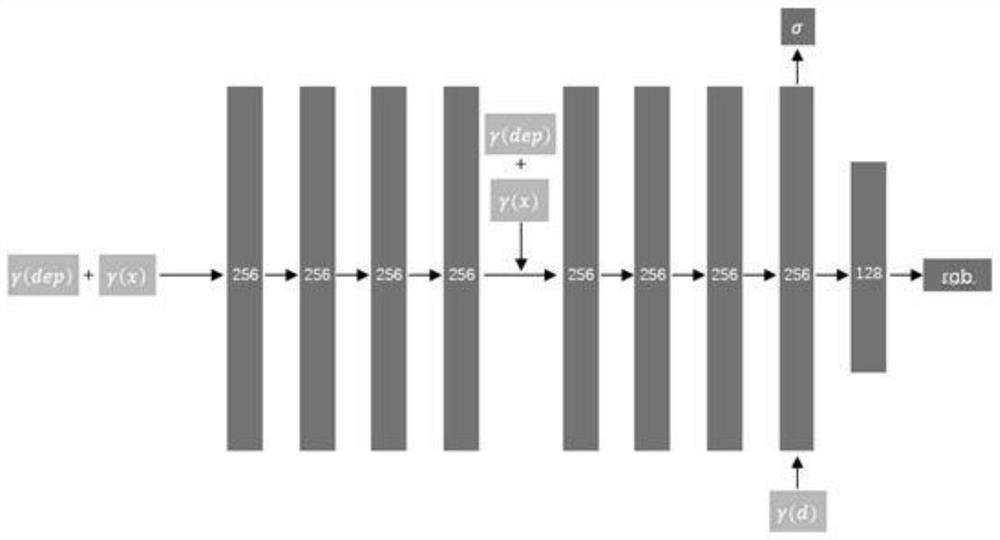

[0045] like figure 1 As shown, in this embodiment, the method for synthesizing virtual viewpoint images based on implicit neural scene representation includes the following steps:

[0046] S1. Acquire datasets used as training images and test images.

[0047]In this example, the dataset includes a large-scale scene dataset captured by a camera. This large-scale scene dataset needs to capture about 30 images to meet the needs of constructing neural scene representation, and the shooting vision needs to cover all corners of the scene; Limits, including line, ar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com