Indoor mobile robot navigation method

A mobile robot and navigation method technology, applied in the field of indoor mobile robot navigation, can solve problems such as time-consuming and laborious, and achieve the effect of reducing the amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0044] This embodiment provides a navigation method for an indoor mobile robot, including the following steps:

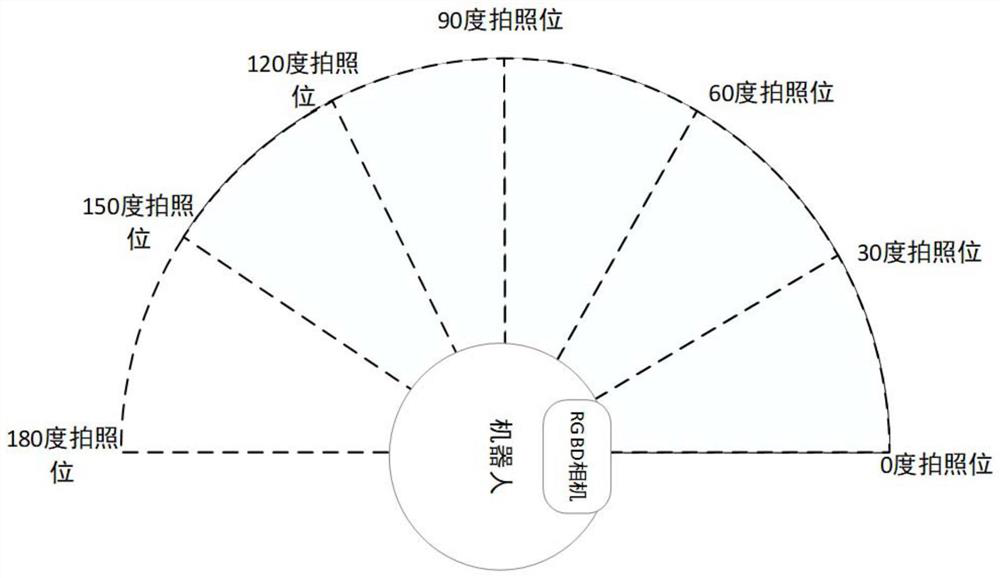

[0045] Step 1: The robot body independently explores and takes photos through the RGBD camera, obtains photos, enters the room from a set starting point, and navigates to control the robot body to complete a slow rotation of 180 degrees from right to left. During the rotation, set the angle at intervals, The RGBD camera collects image data once. The RGBD camera collects the color image and depth image of the current position, and records the current angle of the robot body to take pictures. The set angle is not greater than the horizontal viewing angle of the RGBD camera.

[0046] Step 2: the robot body carries out target detection and recognition according to the photos taken by the RGBD camera, and step 2 includes the following steps:

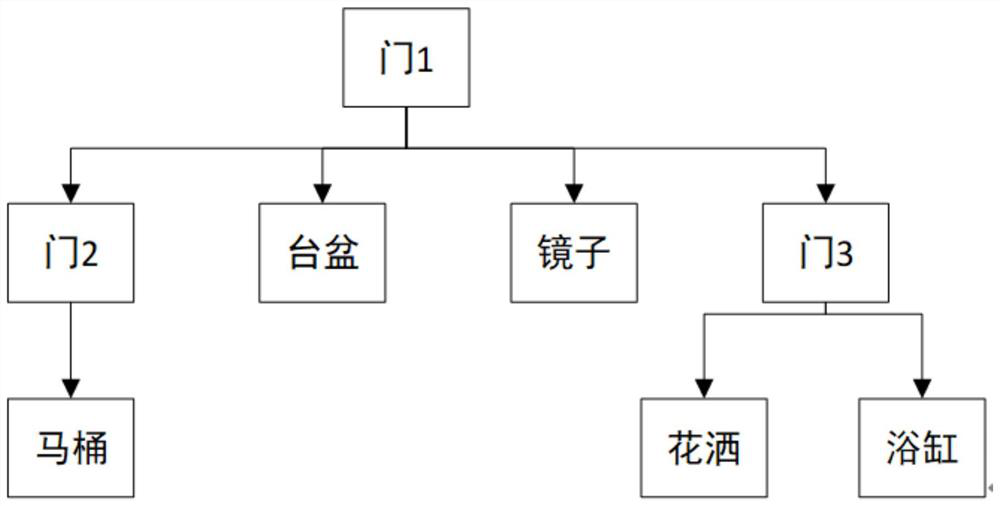

[0047] Step 2.1: Each time the image data is collected, it is sent to the trained deep learning model for detection, the target o...

Embodiment 2

[0063] Those skilled in the art can understand this embodiment as a more specific description of Embodiment 1.

[0064] like Figure 1~3 As shown, this embodiment provides a navigation method for autonomous exploration of an unknown environment based on target recognition. This navigation method is based on a system composed of a mobile robot and an RGBD camera, where the RGBD camera can collect color images and depth images. Installed horizontally in front of the robot.

[0065] This method comprises the steps:

[0066] Step 1: The robot autonomously explores and takes pictures;

[0067] Step 2: target detection and recognition;

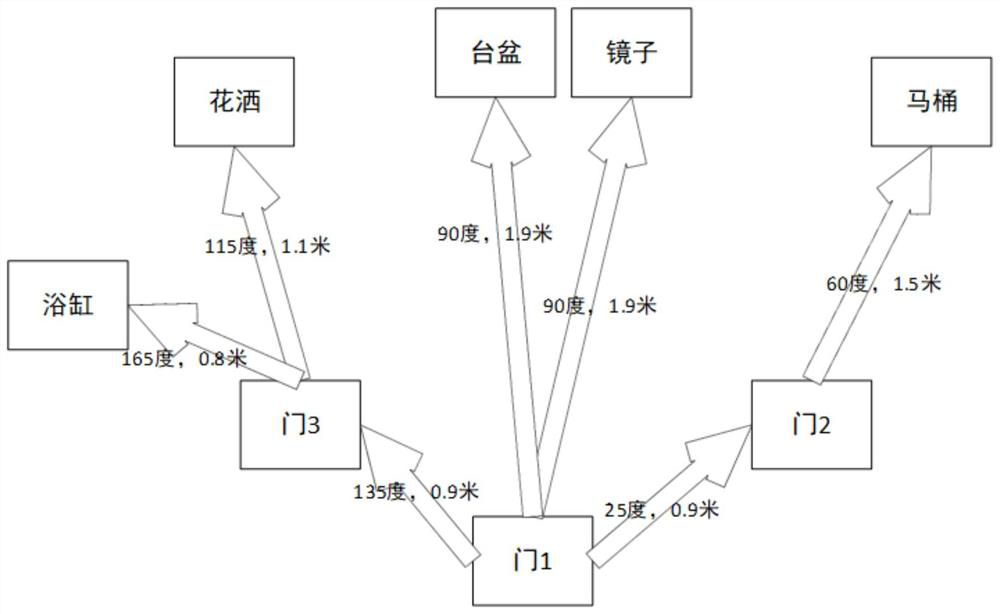

[0068] Step 3: Estimation of the angle and distance of the target;

[0069] Step 4: Object navigation rules.

[0070] Wherein, step 1 includes the following steps:

[0071] Step 1.1: Enter the room from a set starting point, and navigate and control the body of the robot to complete a slow rotation of 180 degrees from right to left. During the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com