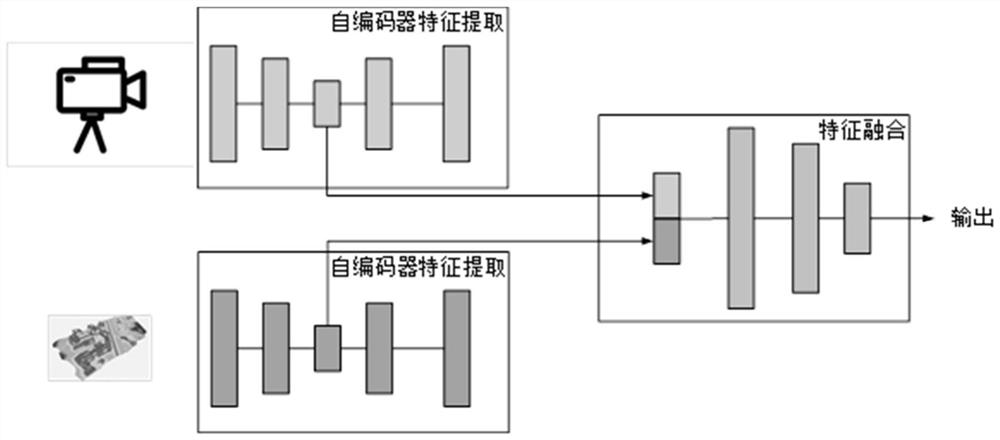

Autonomous system sensing method and system based on multi-modal fusion

An autonomous system and multi-modal technology, applied in the field of autonomous system perception methods and systems, can solve problems such as unstable working status of the perception system, achieve the effects of solving the limitation of vision, reducing uncertainty, and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The specific embodiments of the present invention will be further described below in conjunction with the accompanying drawings. It should be noted here that the descriptions of these embodiments are used to help understand the present invention, but are not intended to limit the present invention. In addition, the technical features involved in the various embodiments of the present invention described below may be combined with each other as long as they do not constitute a conflict with each other.

[0025] With the development of artificial intelligence such as machine learning and deep learning, a large amount of data can be processed and a relatively high-precision model can be established. In fact, no sensor can well reflect the real world situation. Therefore, this application combines different sensors or different views of the same sensor to reconstruct the real situation, which is then fed into a deep learning model.

[0026] see figure 1 The embodiment sho...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com