Neural network accelerator heat effect optimization method based on memristor cross array

A technology of neural network and optimization method, which is applied in the field of thermal effect optimization of memristor-based cross-array neural network accelerators, can solve problems such as reduction and reduction of calculation accuracy, and achieve the effects of improving accuracy, reducing influence, and shortening operation time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0074] A method for optimizing the thermal effect of a neural network accelerator based on a memristor cross array, comprising the following steps:

[0075] Step 1, establish a fast temperature distribution calculation model:

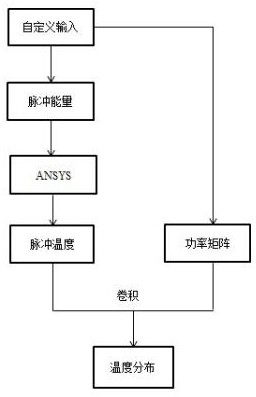

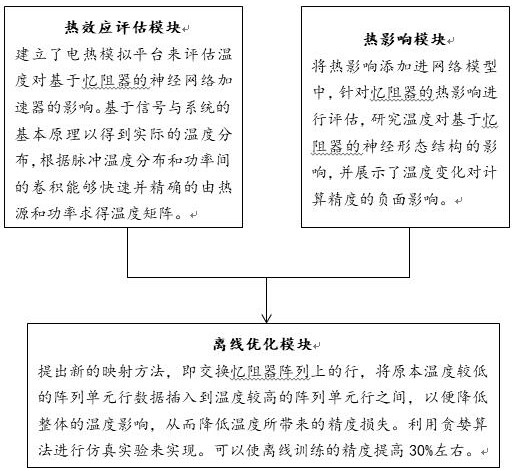

[0076] First, customize the input data, define these data as the power value P to obtain the actual power matrix, select the pulse power of a point (x, y) in the actual power matrix, divide the pulse power by the volume to obtain the value of the pulse heat source, and then divide the pulse heat source Input the value into ANSYS software to obtain a pulse temperature matrix through its finite element calculation function, and finally perform convolution operation on the obtained pulse temperature matrix and actual power matrix, and then divide by the pulse power to obtain the actual temperature distribution matrix;

[0077] Step 2: Establish the MLP neural network failure assessment model: apply the distribution matrix of the actual temperature T obtain...

Embodiment 2

[0081] Embodiment 2 is basically the same as Embodiment 1, and its difference is:

[0082] In said step one, in establishing a fast temperature distribution calculation model, the specific establishment steps are as follows:

[0083] First, customize the input data, define these data as the power value P to obtain the actual power matrix, and use the analytical calculation method to obtain the accurate global temperature distribution of the memristor neural network accelerator, while ensuring the maximum temperature distribution compared with the traditional numerical calculation method. The error is not more than 5%; the following temperature distribution fast calculation model is established, as shown in formula (1):

[0084]

[0085] where T δ (x, y, τ) represents the temperature value at position (x, y) in the memristor-based neural network accelerator at time τ under the thermal action of the pulse signal, the power matrix P is a known quantity, and P(x, y, τ) repres...

Embodiment 3

[0101] Embodiment 3 is basically the same as Embodiment 2, and its difference is:

[0102] The neural network accelerator thermal effect method based on the memristor cross array of the present invention comprises the following steps:

[0103] In the model part, the "power fuzzy" fast temperature calculation method is used to quickly establish a temperature distribution calculation model. The main ideas are as follows: the relationship between heat and temperature distribution is processed by a linear signal system. Heat is used as an input value, and the corresponding temperature distribution is output in response. The basic principle of signals and systems shows that the output of a linear system can be regarded as a time-domain convolution process between the input and the impulse signal response. Because the traditional method calculates all the heat sources through fine numerical calculations, it will take too long, but the method used in the model proposed by the presen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com