Method and system for sound source localization and sound source separation based on dual consistent network

A sound source localization and network technology, applied in neural learning methods, biological neural network models, speech analysis, etc., can solve problems such as slow calculation speed, inability to simultaneously process the architecture, and poor performance, and achieve the effect of enhancing performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

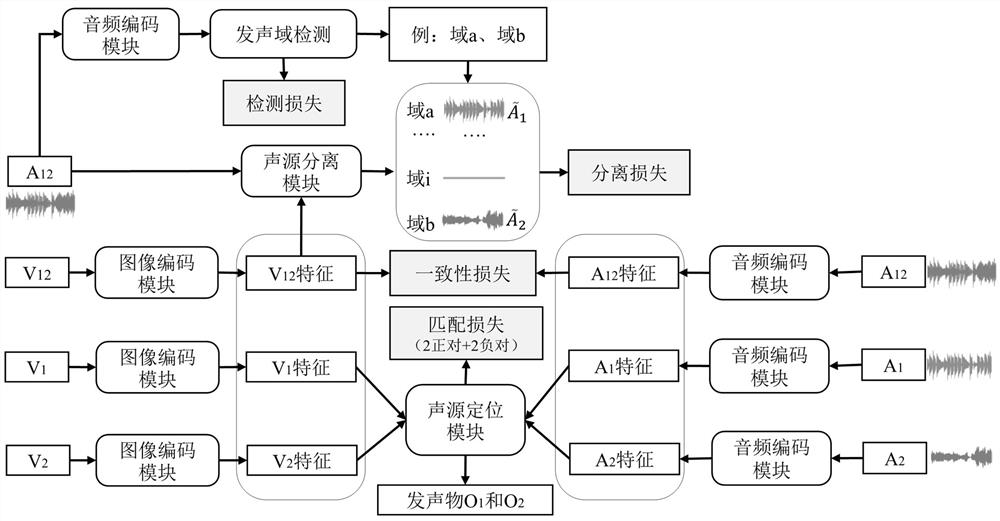

Method used

Image

Examples

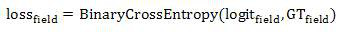

Embodiment

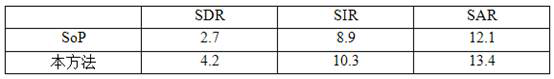

[0114] In order to further demonstrate the implementation effect of the present invention, the present invention is verified experimentally on the MUSIC dataset, which contains 685 untrimmed videos collected from YouTube, including 536 solo and 149 duet videos. The video contains 11 instrument categories: accordion, acoustic guitar, cello, clarinet, erhu, flute, trumpet, tuba, saxophone, violin, xylophone, this dataset is suitable for sound source separation and sound source localization tasks. In order to verify the effectiveness of the present invention, for the sound source localization task, the experiment uses Intersection over Union (IoU) and Area Under the Curve (AUC) as evaluation indicators. Extending existing visual localization methods, SoP (Hang Zhao, Chuang Gan, Andrew Rouditchenko, CarlVondrick, Josh H. McDermott, and Antonio Torralba. The sound of pixels. InECCV, 2018) and DMC (Di Hu, Feiping Nie, and Xuelong Li. Deep multimodal clustering for unsupervised audio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com