No-reference video quality evaluation method based on short-time space-time fusion network and long-time sequence fusion network

A spatiotemporal fusion and fusion network technology, applied in neural learning methods, biological neural network models, television, etc., can solve problems such as poor evaluation performance, and achieve the effect of accurate video scores

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

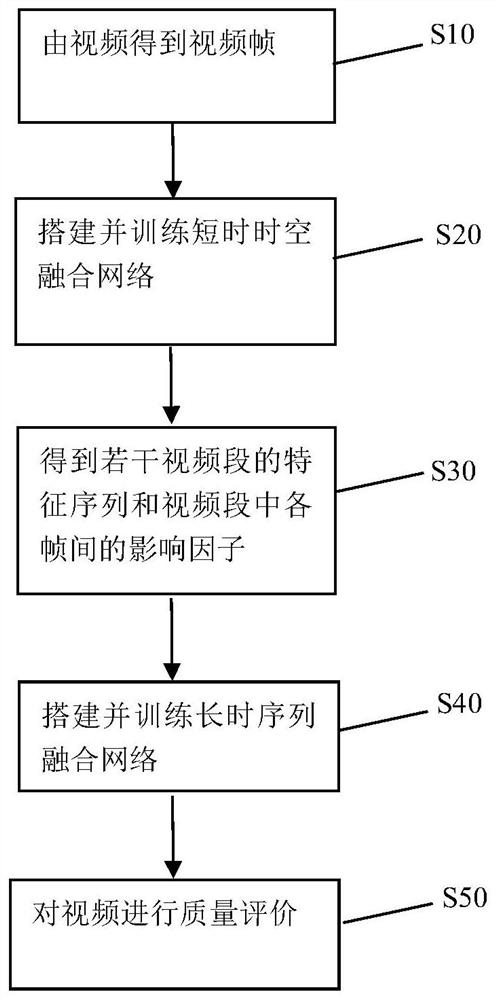

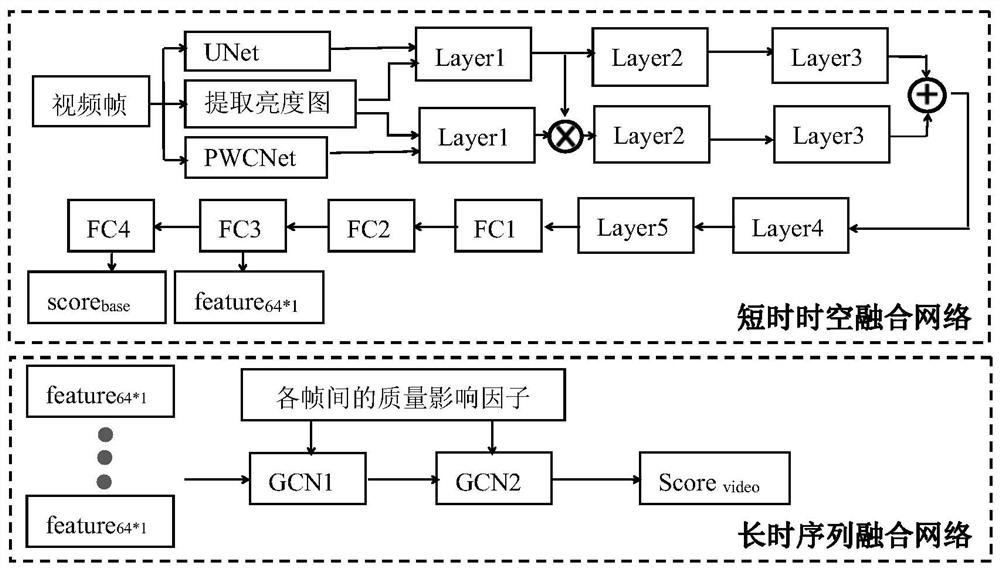

[0049] The flow chart of the implementation is as figure 1 shown, including the following steps:

[0050] Step S10, obtaining a video frame from the video;

[0051] Step S20, building and training a short-time spatio-temporal fusion network;

[0052] Step S30, obtaining the feature sequences of several video segments and the mutual influence factors of each frame in the video segment;

[0053] Step S40, building and training a long-term sequence fusion network;

[0054] Step S50, evaluating the quality of the video;

[0055] Obtaining the video frame adjustment step S10 from the video of the embodiment also includes the following steps:

[0056] Step S100, extracting video frames, converting the complete video sequence from formats such as YUV to BMP format, and saving it frame by frame;

[0057] Step S110, sampling video frames, selecting video frames at intervals of 4, and discarding other video frames directly due to redundancy.

[0058] Step S120, generating a lumina...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com