Video construction method and system

A construction method and video technology, applied to TV system components, TVs, color TVs, etc., can solve the problems of terminal equipment running time that is difficult to meet user needs and large amount of calculation, so as to reduce the amount of calculation and memory usage, reduce workload effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

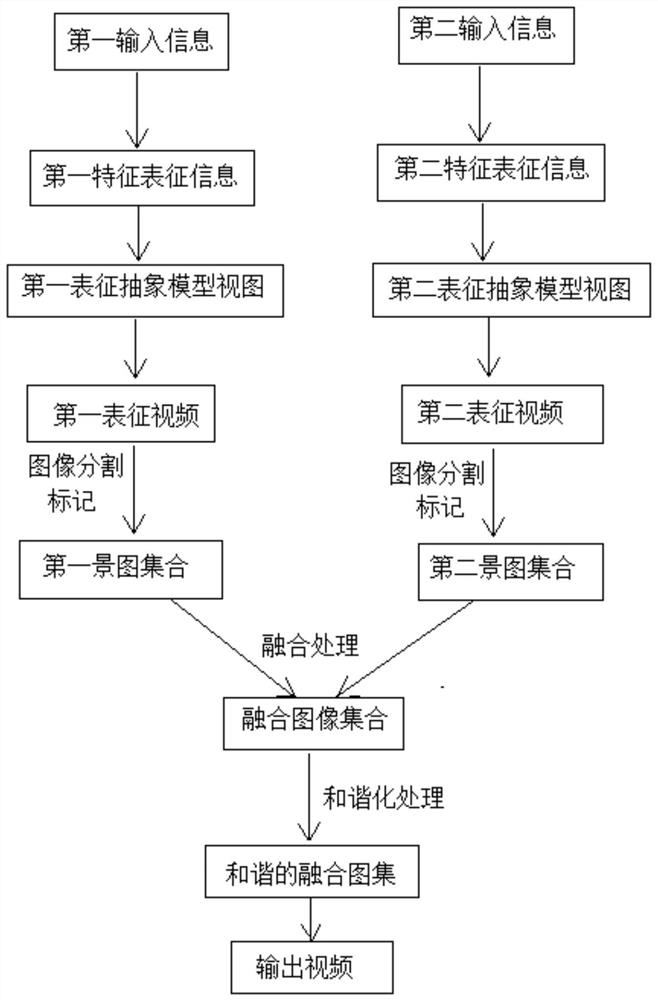

[0044] like figure 1 As shown, the present embodiment provides a video construction method, and its steps are specifically:

[0045] Step 1, performing feature conversion on the first input information, and obtaining first feature representation information for the first input information;

[0046] Step 2, matching the first representation information obtained in step 1 with the abstract model library to generate a first representation abstract model view based on the first input information;

[0047] Step 3, the video generation algorithm model performs a video generation operation on the first abstract model view generated in step 2, and generates a first representation video for the first input information;

[0048] Step 4, performing feature conversion on the second input information to obtain second feature representation information for the second input information; matching the second representation information with the abstract model library to generate a second repre...

Embodiment 2

[0062] The scene video construction in the animation production task is taken as an example for further description.

[0063] In this embodiment, the "scene of characters walking on the grassland" in the animation video scene construction is taken as an example, in which the initial image and text description are used as input information, and an abstract model library based on the style of ink and wash is used to generate an adversarial network to perform video generation In the method, image segmentation processing, background marking, and image harmonization processing are performed by a codec structure neural network, and image fusion processing of each frame of a video is performed by an image fusion method based on a deep neural network.

[0064] Step S1, using the first original hand-painted character picture and the description text of the image as input information to perform feature conversion to obtain feature representation information for character information;

...

Embodiment 3

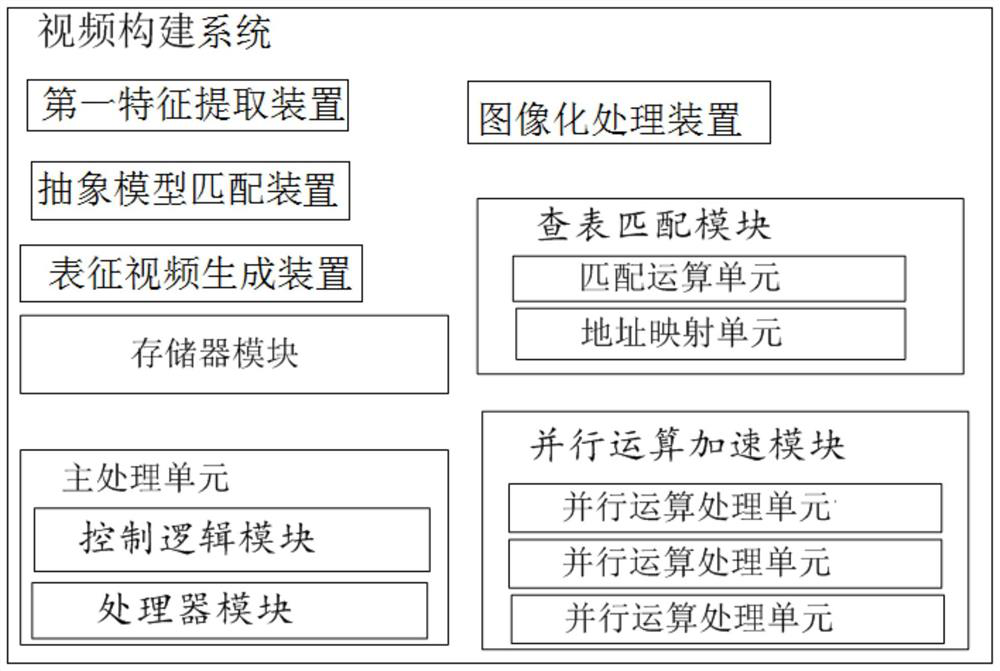

[0073] like figure 2 As shown, a video construction system provided in this embodiment includes: a first feature extraction device, an abstract model matching device, a characterization video generation device, an image processing device, a fusion device and a computing device;

[0074] The first feature extraction device is used to perform feature conversion on a plurality of input information respectively to obtain feature representation information of each input information;

[0075] The abstract model matching device is used to match the characteristic representation information of each input information with the abstract model library, and generate a representation abstract model view based on each input information;

[0076] The characterization video generating device respectively inputs the characterization abstract model views of each input information into the video generation algorithm model to generate corresponding characterization videos;

[0077] The image pro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com