Neural network model training method and device and storage medium

A technology of neural network model and training method, which is applied in the field of devices, storage media, and neural network model training methods, and can solve problems such as not including teacher network knowledge and incomplete knowledge transfer

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

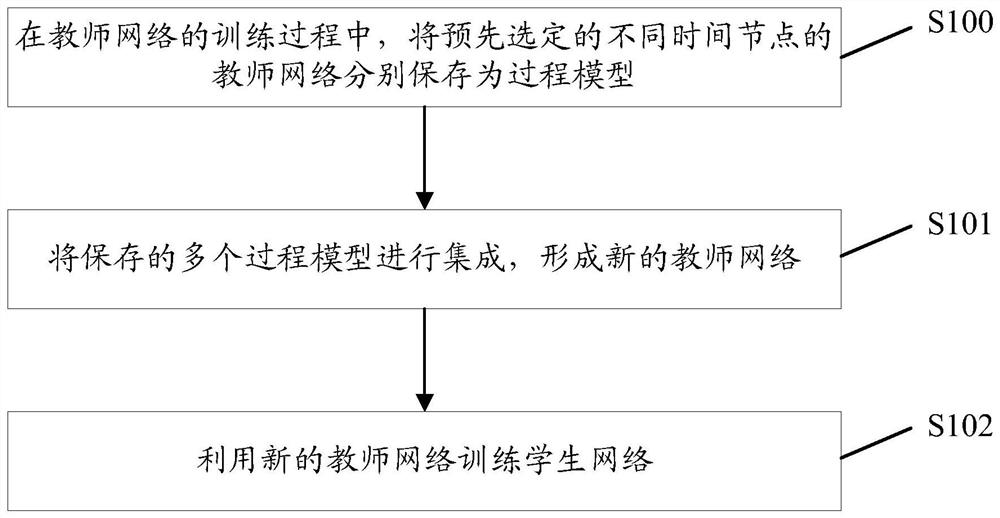

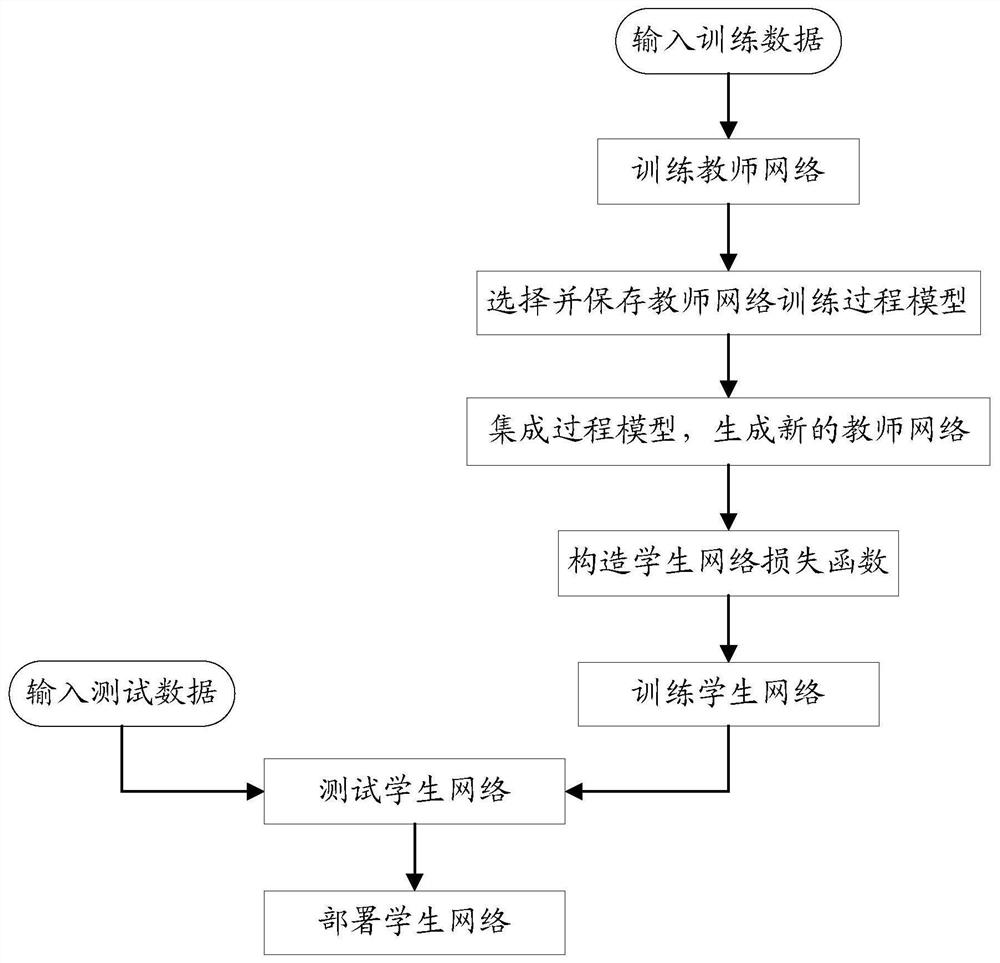

[0087] The following uses Example 1 to illustrate the neural network model training method in the embodiment of the present application:

[0088] example one

[0089] Taking the application scenario of image classification as an example, the benchmark data set is CIFAR-100, the training set and the test set are divided into standard division methods, including 50,000 and 10,000 pictures respectively, the evaluation criterion is the top-1 accuracy rate, and the teacher network chooses ResNet18, ResNet50, DenseNet121, the student network chooses Mobilenetv2, Shufflenetv2, the classifier uses a linear classifier, and the sample enhancement strategy uses random cropping and horizontal flipping.

[0090] The main parameters are set as follows:

[0091]The batch size is 128, the number of iterations is 200, the optimizer is Adam, the encoding tool is Pytorch, and the model training uses the Titan Xp graphics card. The time to save the process model is an integer multiple of 20 epo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com