Multi-sensor fusion SLAM algorithm and system thereof

A multi-sensor fusion and algorithm technology, applied in radio wave measurement systems, satellite radio beacon positioning systems, instruments, etc., to achieve reliable results and solve the limitations of a single sensor

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

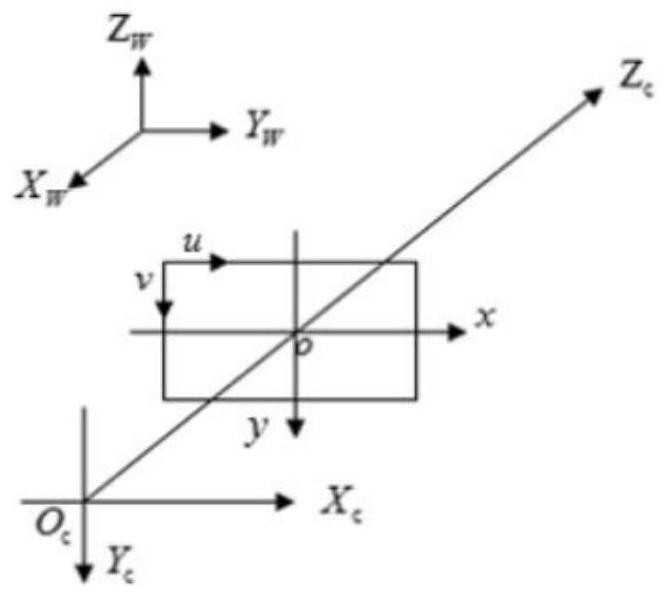

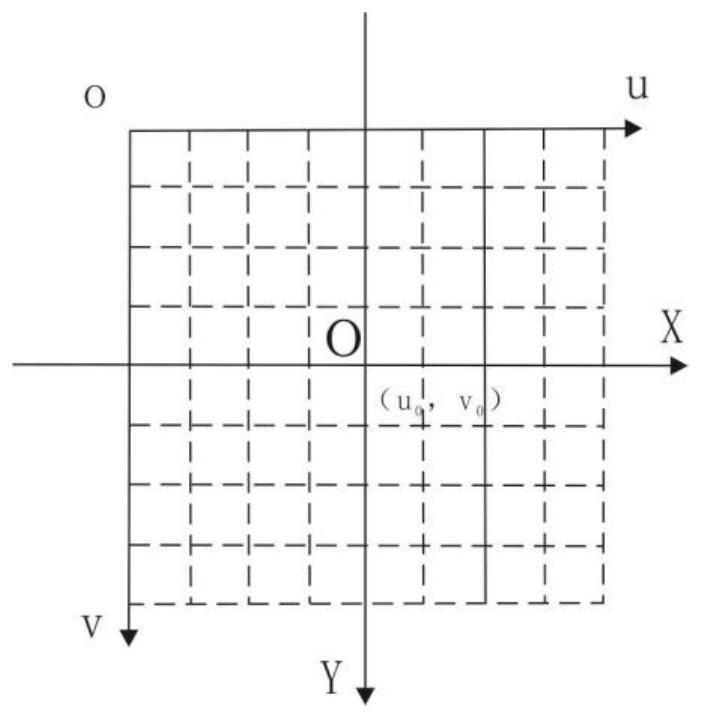

[0039] refer to figure 1 The illustration is a SLAM fusion algorithm based on multi-type cameras proposed in this embodiment, which solves the bottleneck solution of a single type of camera with low hardware cost; and only three groups of cameras are needed to meet the requirements of multiple scenes and solve the problem of single-type cameras. The application restrictions of the type of camera meet the requirements of the vehicle's advanced driver assistance system. GPS+IMU combined into high-precision inertial navigation. In the case of access to differential RTK, the positioning accuracy can reach centimeter-level positioning. Binocular camera + IMU can provide positioning in the case of weak GPS and rich texture. If the texture is not rich enough, the vision cannot provide reliable information. In a short period of time, IMU positioning can be relied on. Various scenarios are considered and multiple data are fused , the positioning results are reliable and can solve the...

Embodiment 2

[0114] refer to Figure 7-9 In this embodiment, it should be further explained that the hardware synchronization module 200 is realized by using a synchronous exposure module hardware circuit and a GPS time correction module. The time information receiving module of the single-chip microcomputer receives the time information and time pulse of the GPS time receiver, and the RTC ( The real-time clock) chip is connected to the RTC module of the single-chip microcomputer, and the single-chip exposure signal control module controls the exposure part of the 4-way camera, and the 4-way camera feedback signal is connected to the feedback signal detection module of the single-chip microcomputer. It is not difficult to find that this embodiment is the feedback signal of the 3-way camera .

[0115] The specific process is that when the single-chip microcomputer receives the external request exposure signal, the 3-way pins will immediately act and delay. Since the camera exposure action h...

Embodiment 3

[0132] refer to Figure 10-11 For illustration, this embodiment proposes a SLAM fusion system based on multi-type cameras. The method of the above embodiment can be implemented relying on this system, and its system framework includes image processing: monocular is mainly responsible for image correction, ORB feature point Extraction, for the binocular camera, also includes the calculation of the depth map, and the depth camera includes the alignment of the color map and the depth map; the decision-making unit: mainly selects the appropriate camera combination according to the scene; the data fusion unit: multi-camera image fusion. Specifically, the system includes a camera module 100, a hardware synchronization module 200, and a SLAM fusion module 300; wherein the camera module 100 is arranged on the vehicle body 400 and is parallel to the horizontal plane of multiple types of cameras, and also includes a monocular camera module 101 and a binocular camera module 102 , RGB-D c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com