Attack judgment method for fooling explainable algorithm of deep neural network

A deep neural network, interpreting algorithm technology, applied in the field of attack judgment that fools deep neural network interpretable algorithms

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0059] The present invention will be further described below in conjunction with drawings and embodiments.

[0060] According to the example that the summary of the invention complete method of the present invention implements and its implementation situation are as follows:

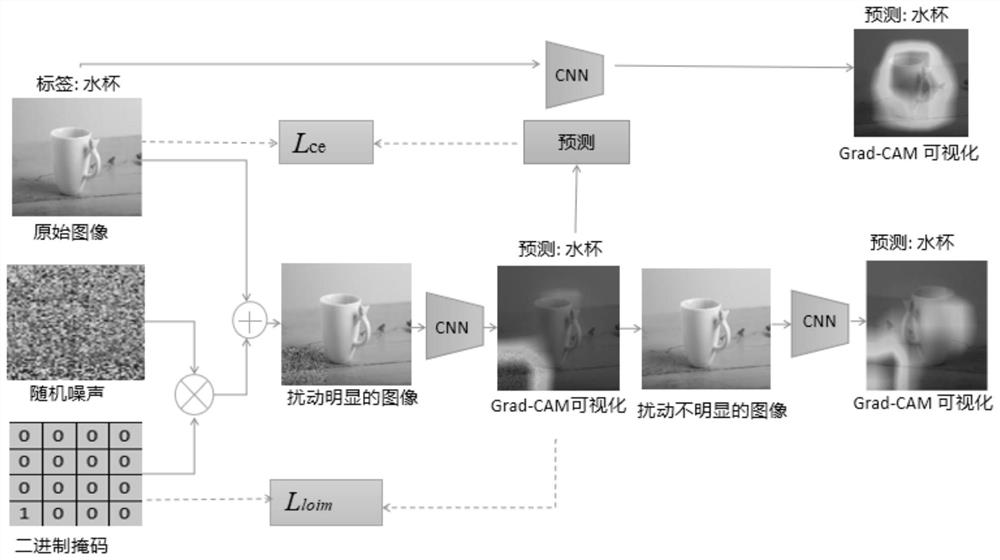

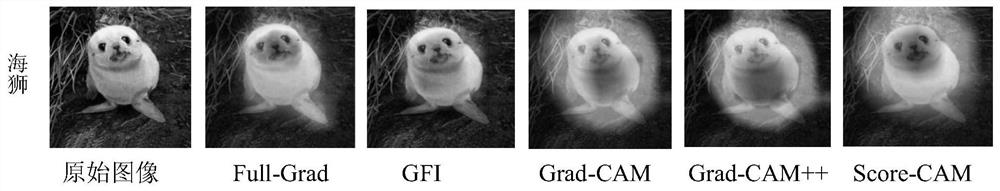

[0061] The present invention is implemented on the deep neural network VGG19 model trained on the ImageNet data set, taking Grad-CAM as an example, detailed description is as follows:

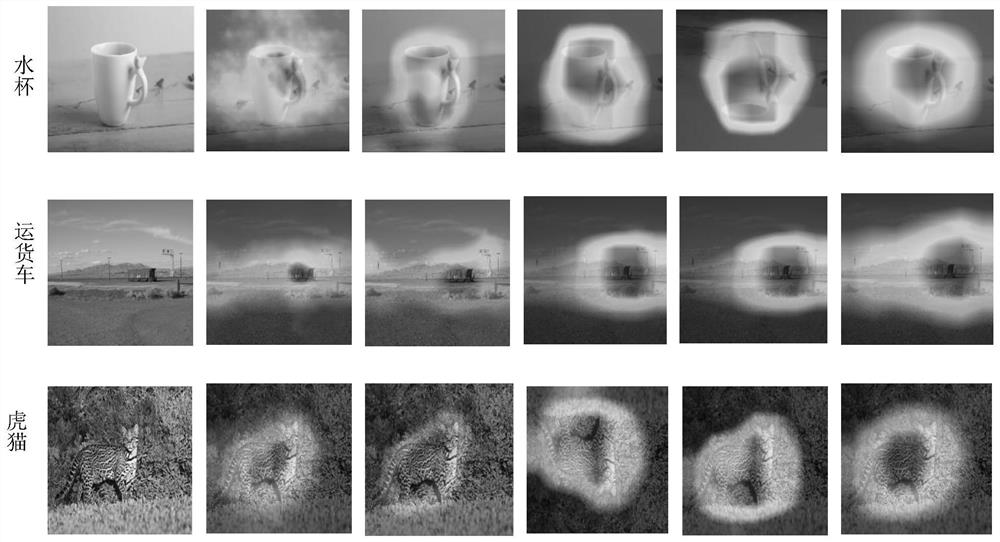

[0062] 1) Generate a random initialization noise and generate a binary mask, if it is a single image of a single target object, such as figure 2 As shown in the first column, set the value of the position of the corresponding square area to 0, and other areas to 1; if it is a single image with multiple targets, such as Figure 5 As shown in the first column, set the values corresponding to the positions of the two square areas to 0, and set the other areas to 1.

[0063] 2) Multiply the noise and the binary mask, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com