Model training method, device and equipment and storage medium

A model training, type of technology, applied in the field of deep learning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

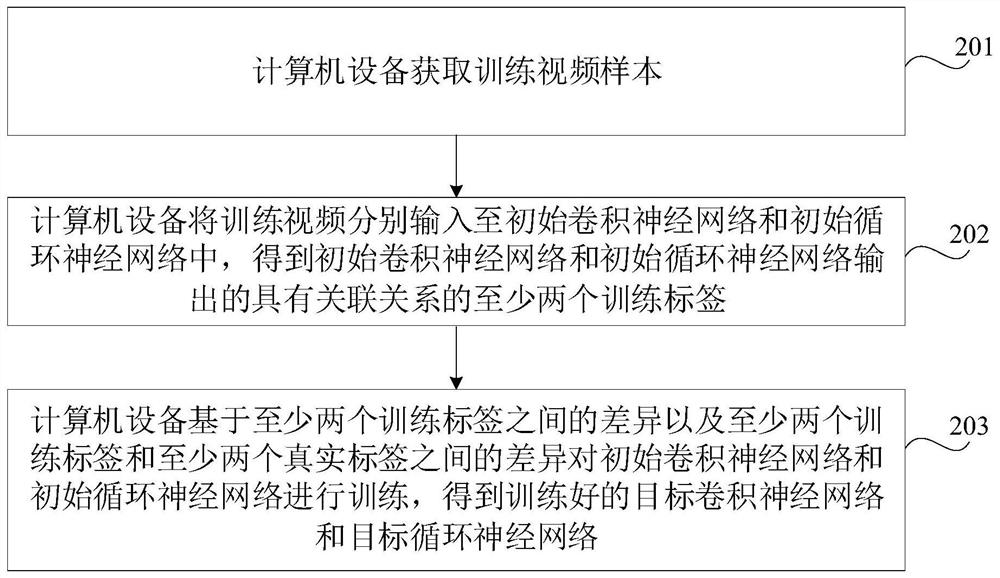

[0022] In order to make the purpose, technical solution and advantages of the present application clearer, the implementation manners of the present application will be further described in detail below in conjunction with the accompanying drawings.

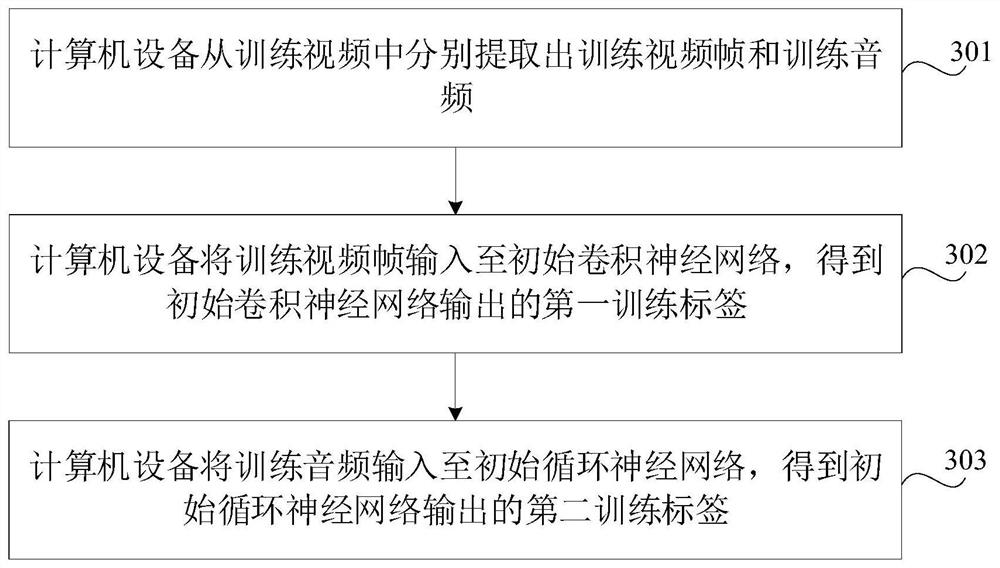

[0023] In practical applications, some features of content that do not have serialization characteristics in videos can usually be identified by convolutional neural networks. For example, video frames in videos do not have serialization characteristics, so their features can usually be identified by convolution neural network for recognition. The features of some serialized content in the video can usually be identified through a cyclic neural network. For example, the audio contained in the video has a serialized feature, so its features can usually be identified through a cyclic neural network.

[0024] In related technologies, according to the features to be identified, the convolutional neural network can be selected to iden...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com