Fast-RCNN target detection method based on FPGA

A target detection and test result technology, applied in the field of intelligent recognition, can solve the problems of accelerating faster-RCNN target detection and slow recognition speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

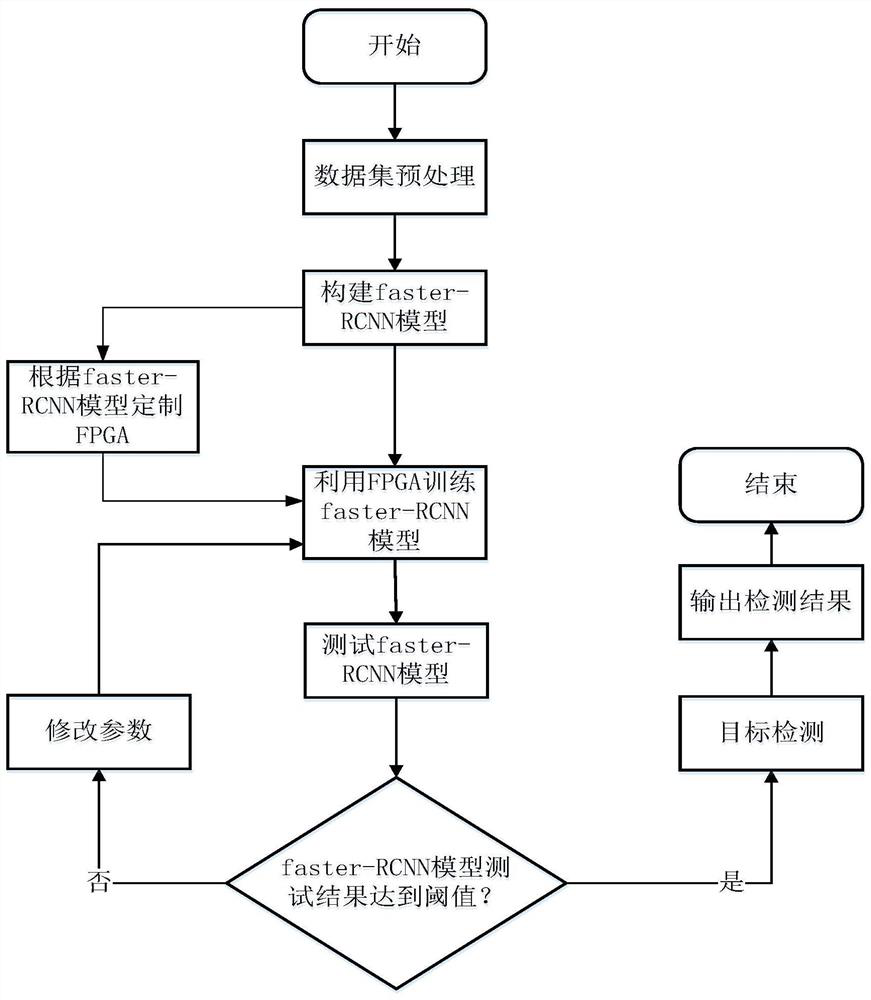

[0036] Such as figure 1 Shown, the present invention is a kind of faster-RCNN object detection method based on FPGA, and this method specifically comprises the following steps:

[0037] Step 1: Obtain an existing data set for target detection and preprocess the data set;

[0038] Step 2: Build a faster-RCNN model;

[0039] Step 3: Load the dataset from step 1 into the faster-RCNN model, and customize the FPGA according to the faster-RCNN model;

[0040] Step 4: Use the customized FPGA to train the faster-RCNN model in step 3;

[0041] Step 5: Set an average precision rate AP threshold, and test according to the training results of the faster-RCNN model. If the test result is lower than the average precision rate AP threshold, modify the parameters, and perform step 4 again to test the training results until the test The result reaches the threshold;

[0042] Step 6: Input the picture to be detected, and use the trained faster-RCNN model for target recognition.

[0043] In...

Embodiment 2

[0046] This embodiment is a further description of the present invention.

[0047] The steps for building the faster-RCNN model in step 1 are:

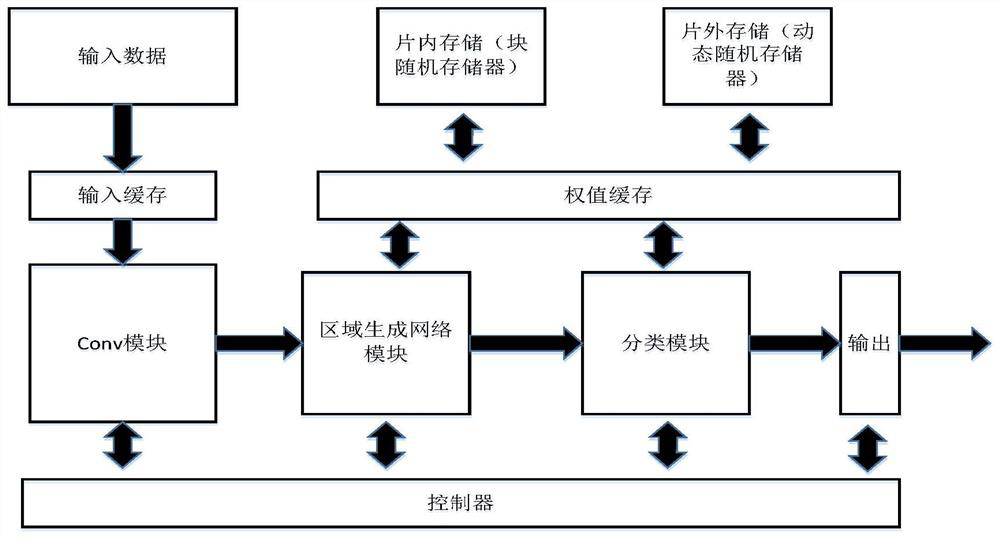

[0048] Step 21: Build Conv layers for extracting feature maps of images, including conv, pooling, and relu layers;

[0049] Step 22: Build the area generation network layer, and use the area generation network layer to generate the detection frame, that is, initially extract the target candidate area in the picture;

[0050] Step 23: Build the pooling layer of the region of interest, obtain the feature map of step 21 and the target candidate area of step 22, and extract the candidate feature map after synthesizing the information;

[0051] Step 24: Build a classification layer, use border regression to obtain the final precise position of the detection frame, and determine the target category through the candidate feature map.

[0052] In this embodiment, the faster-RCNN model includes Conv layers, region generation network layer,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com