Adaptive Fast Incremental Read-ahead Method for Wide Area Network File System

A file system and wide-area network technology, applied to the challenges faced by wide-area high-performance computing, in the field of adaptive fast incremental pre-reading, it can solve problems such as low efficiency, only considering serial file reading, and not taking care of speed efficiency, etc. To achieve the effect of reducing performance loss, increasing speed, and reducing the number of times

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0062] The present invention will be described in further detail below in conjunction with the accompanying drawings.

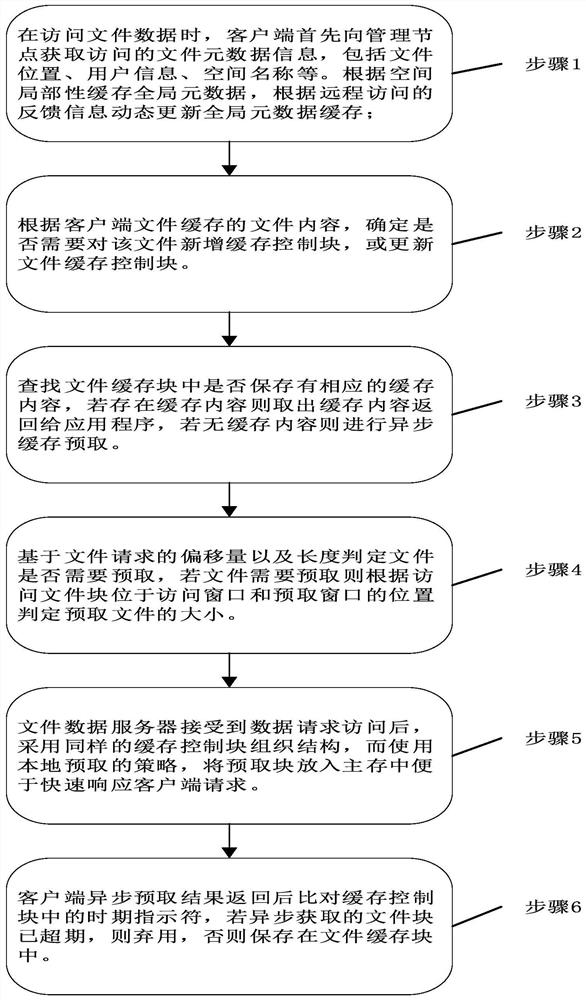

[0063] Such as figure 1 Shown is the implementation flowchart of the present invention. An adaptive incremental fast read-ahead method for wide-area network file systems, comprising the following steps:

[0064] 1) When accessing file data, the client first obtains the metadata information of the accessed file from the management node, including file location, user information, and space name. Cache global metadata according to spatial locality, and dynamically update global metadata cache according to feedback information from remote access;

[0065] 2) According to the file content in the client file cache, determine whether to add a cache control block to the file, or update the file cache control block.

[0066] 3) Find whether there is corresponding cache content saved in the file cache block, if there is cache content, take out the cache content and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com