Hardware circuit design and method of data loading device for accelerating calculation of deep convolutional neural network and combining with main memory

A main memory, deep convolution technology, applied in biological neural network models, neural architectures, physical implementations, etc., can solve the waste of data reuse accelerator resources, complex data segmentation and arrangement methods, and excessive coupling of central processing units. It can simplify the space complexity, improve the utilization of computing resources and memory bandwidth, and simplify the connection complexity.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention will be described in further detail below with reference to the accompanying drawings and examples.

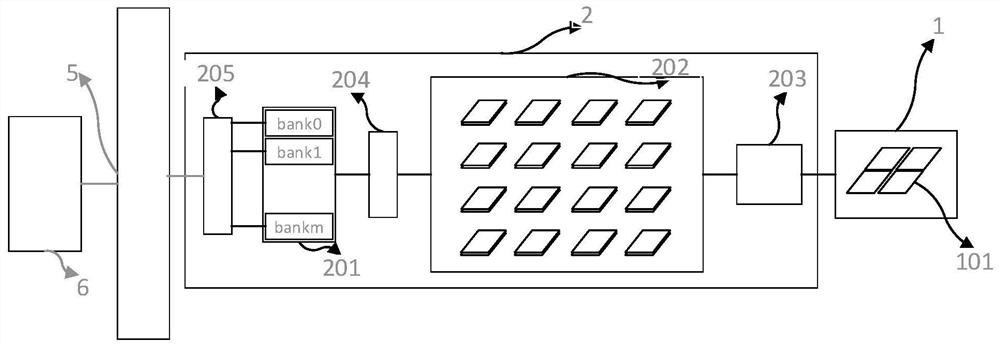

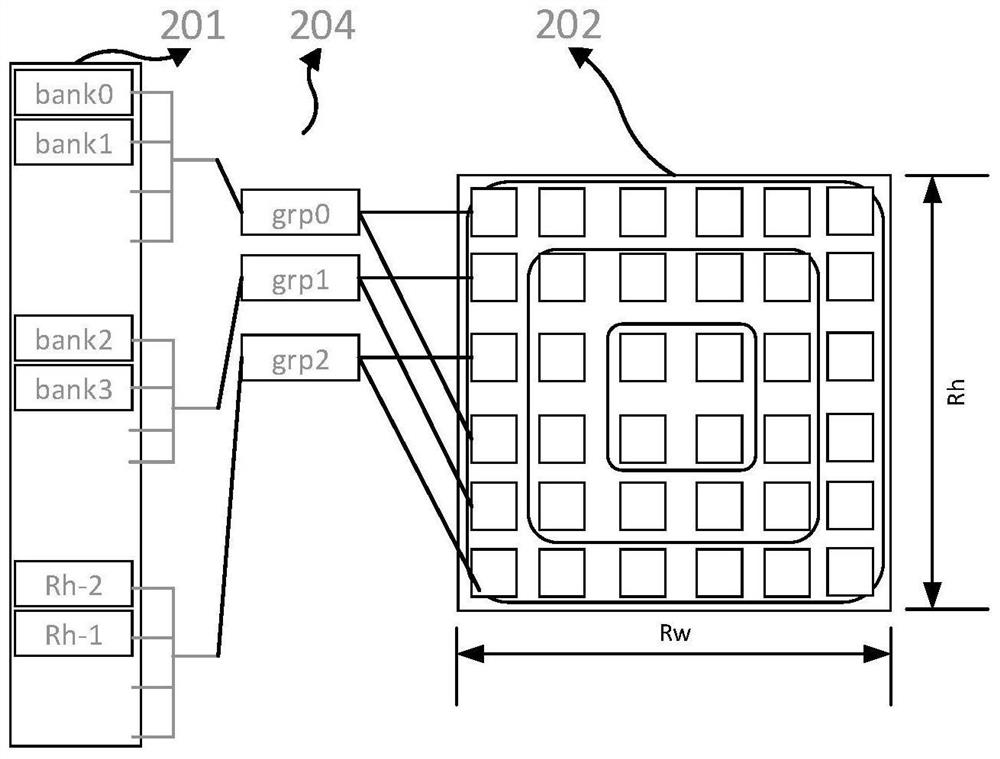

[0035] figure 1 It is a structural diagram of a data loading device combined with a main memory of the present invention, the data loading device 2 includes:

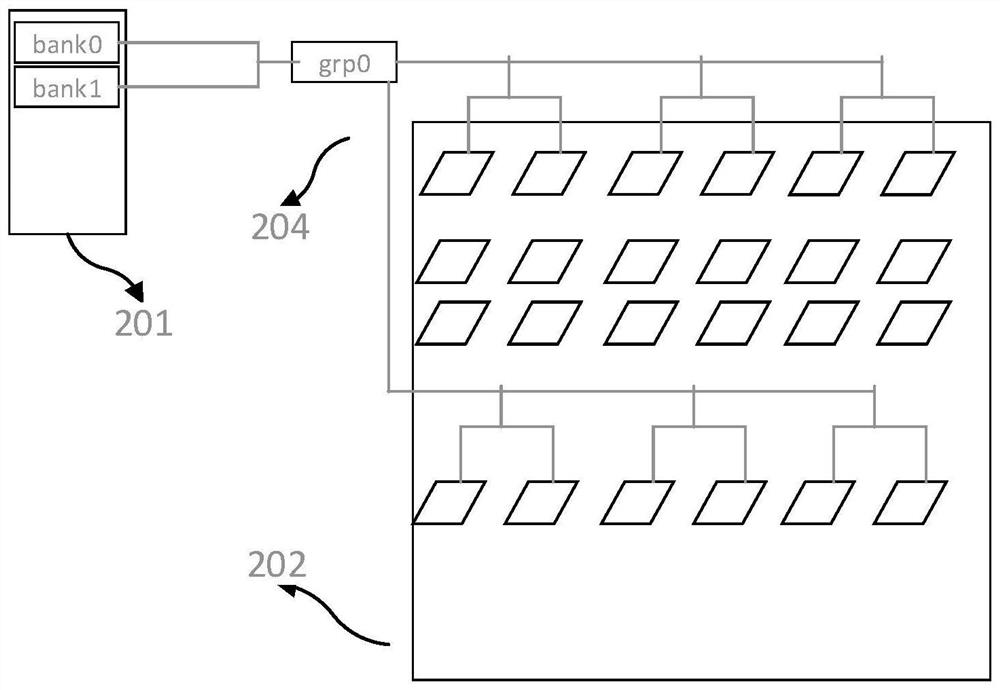

[0036] Tensor-type input cache random access controller 205 performs fusion, arrangement and data format conversion on the input data from main memory 6 or / and other memories, and then distributes them to the partitioned areas of input cache unit 201. The working mode Can be reconfigured by software;

[0037] The divisible input cache unit 201 is the local cache of the data loading device described in the present invention. It is composed of multiple storage pages. The design and storage method correspond to the dimensions of the input data and the parallel input register array 202, and support the software Data format changes caused by reconfiguration;

[0038] The tensor data loading de...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com