Mobile user deep neural network calculation unloading time delay minimization method

A deep neural network and mobile user technology, applied in the field of intelligent application computing offloading delay minimization, can solve the problem of increased failure rate of computing offloading, and achieve the effect of delay minimization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] The present invention will be further described below in conjunction with the accompanying drawings.

[0042] refer to Figure 1 to Figure 5 , a mobile user deep neural network calculation offloading delay minimum method, comprising the following steps:

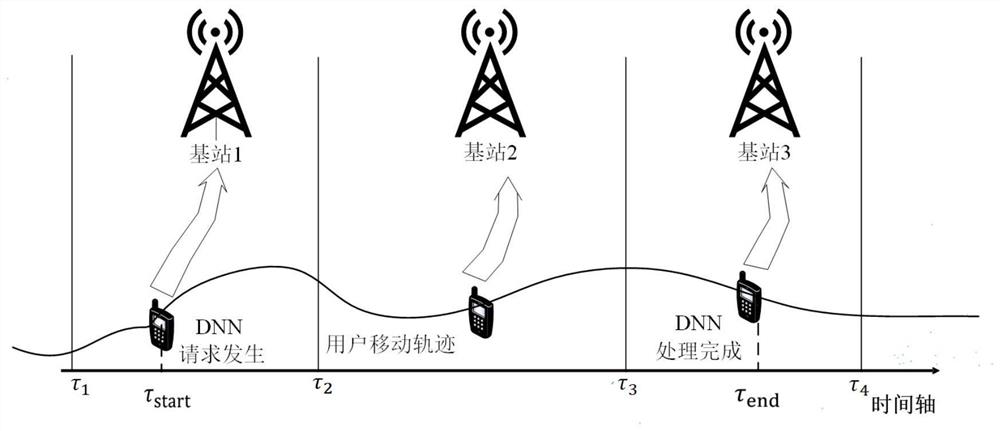

[0043] Step 1: Divide the execution time required by the deep neural network into multiple unequal time periods. The principle of division is whether the base station connected to the user changes during the mobile process. Each base station is deployed with a cloud server, and the DNN request is set to send The moment τ start , the time when the task is completed is τ end, during this period, the time that the user stays in the communication area of each base station is a time period, such as figure 1 As shown, the first time period starts from τ start start, τ 2 end, the second time period starts from τ 2 start, τ 3 end, the third time period starts from τ 3 start, τ end End;

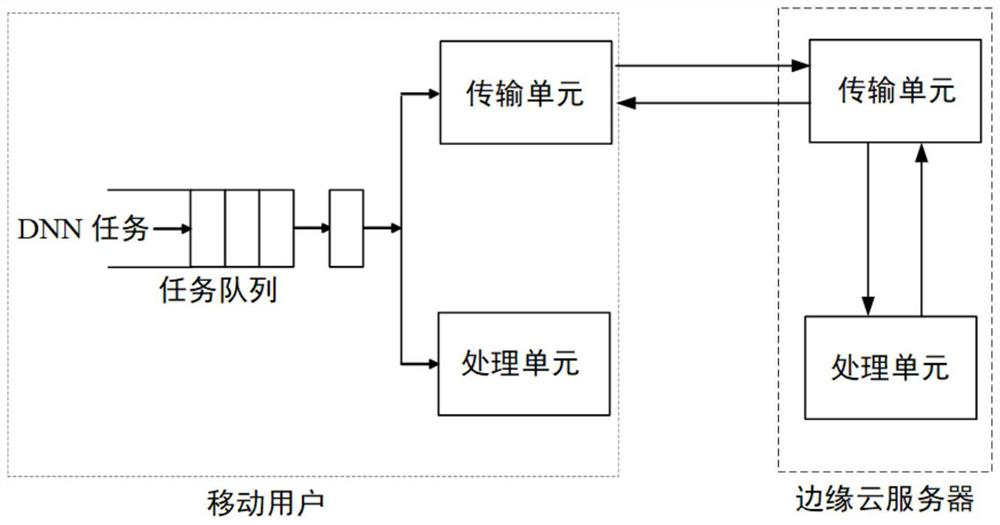

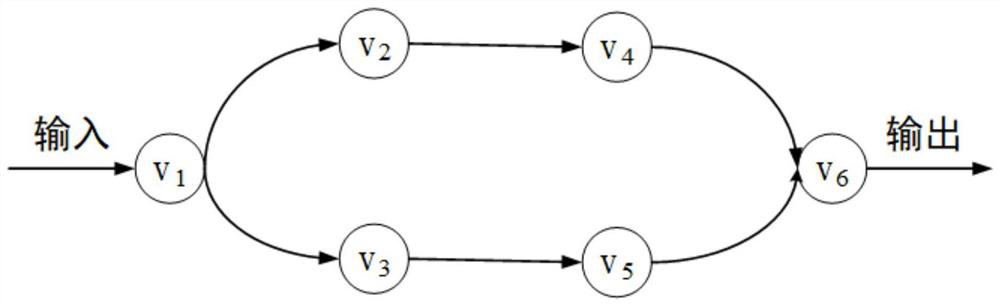

[0044] Step 2: Model the DN...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com