Robot vision semantic navigation method, device and system

A technology of robot vision and navigation methods, applied in the field of devices, systems and computer storage media, and robot vision semantic navigation methods, which can solve problems such as inability to navigate objects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

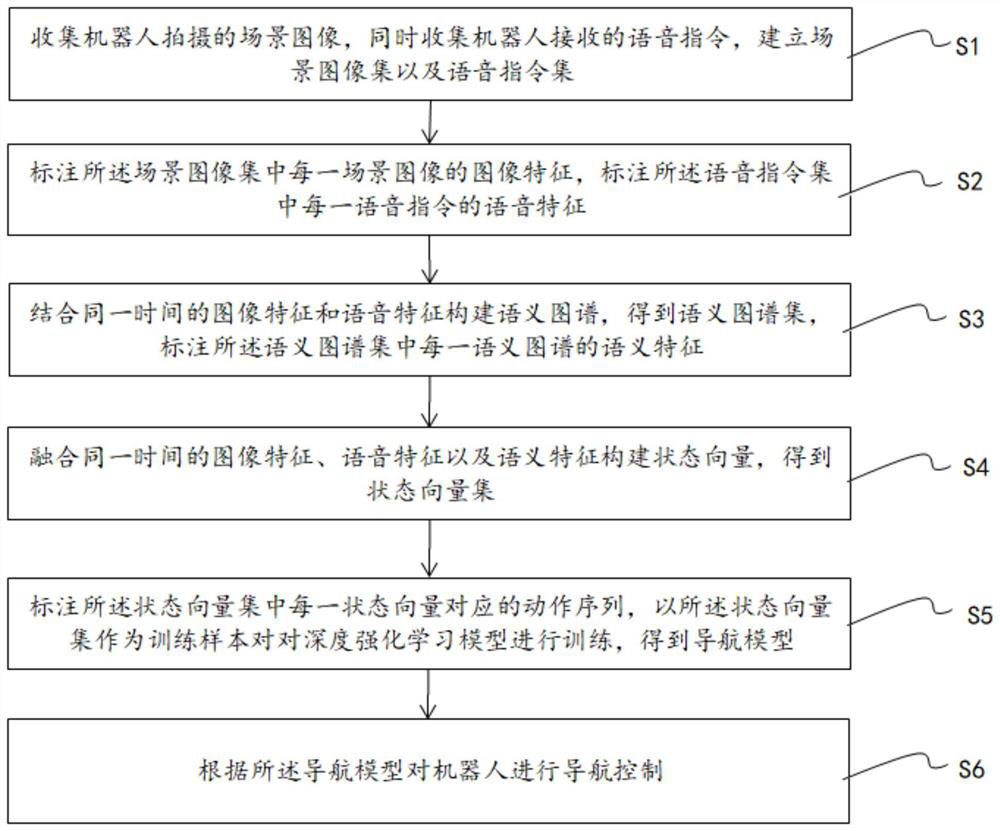

[0025] Such as figure 1 As shown, Embodiment 1 of the present invention provides a robot visual semantic navigation method, comprising the following steps:

[0026] S1. Collect the scene images taken by the robot, and at the same time collect the voice commands received by the robot, and establish a scene image set and a voice command set;

[0027] S2. Mark the image features of each scene image in the scene image set, and mark the voice features of each voice command in the voice command set;

[0028] S3. Combining the image features and voice features at the same time to construct a semantic map, obtain a semantic map set, and mark the semantic features of each semantic map in the semantic map set;

[0029] S4, fusing image features, voice features and semantic features at the same time to construct a state vector to obtain a state vector set;

[0030] S5. Mark the action sequence corresponding to each state vector in the state vector set, and use the state vector set as a...

Embodiment 2

[0071] Embodiment 2 of the present invention provides a robot visual semantic navigation device, including a processor and a memory, and a computer program is stored on the memory. When the computer program is executed by the processor, the robot visual semantics provided by Embodiment 1 is realized. navigation method.

[0072] The robot visual semantic navigation device provided by the embodiment of the present invention is used to implement the robot visual semantic navigation method. Therefore, the robot visual semantic navigation device also possesses the technical effects of the robot visual semantic navigation method, and will not be repeated here.

Embodiment 3

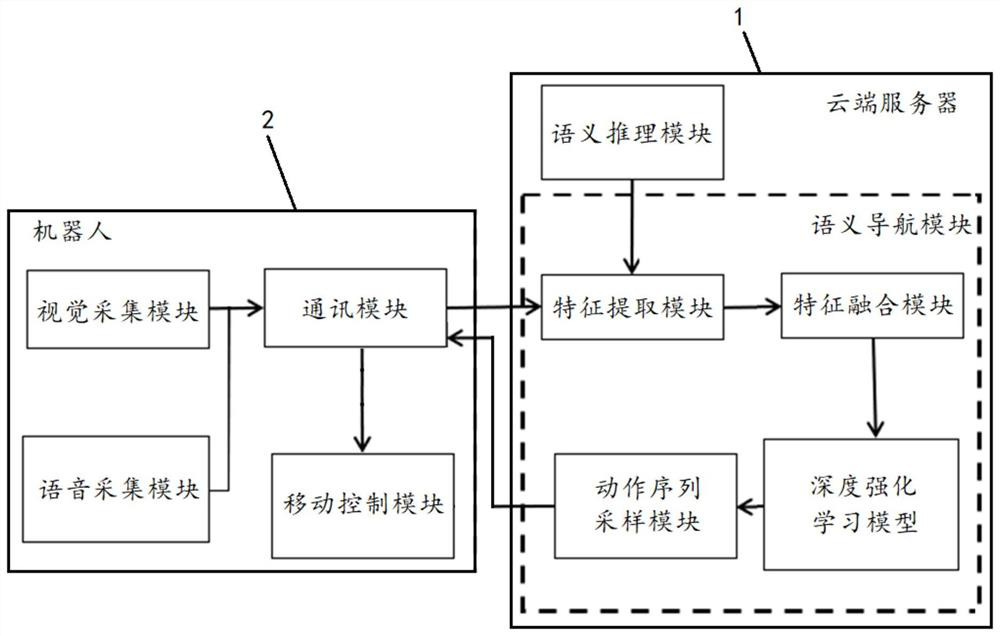

[0074] Such as figure 2 As shown, Embodiment 3 of the present invention provides a robot visual semantic navigation system, including the robot visual semantic navigation device 1 provided in Embodiment 2, and also includes a robot 2;

[0075] Described robot 2 comprises visual collection module, voice collection module, communication module and mobile control module;

[0076] The visual collection module is used to collect scene images;

[0077] The voice collection module is used to collect voice commands;

[0078] The communication module is used to send the scene image and voice instructions to the robot visual semantic navigation device 1, and receive the navigation control instructions sent by the robot visual semantic navigation device 1;

[0079] The movement control module is used for performing navigation control on the robot joints according to the navigation control instructions.

[0080] In this embodiment, the robot visual semantic navigation device 1 can be ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com