A Sound Generation Method Based on Underwater Target and Environmental Information Features

An underwater target and environmental information technology, applied in speech synthesis, neural learning methods, speech analysis, etc., can solve the problems of application limitation of TTS sound generation model and poor underwater acoustic signal effect, so as to improve the effect and improve the feature accuracy. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

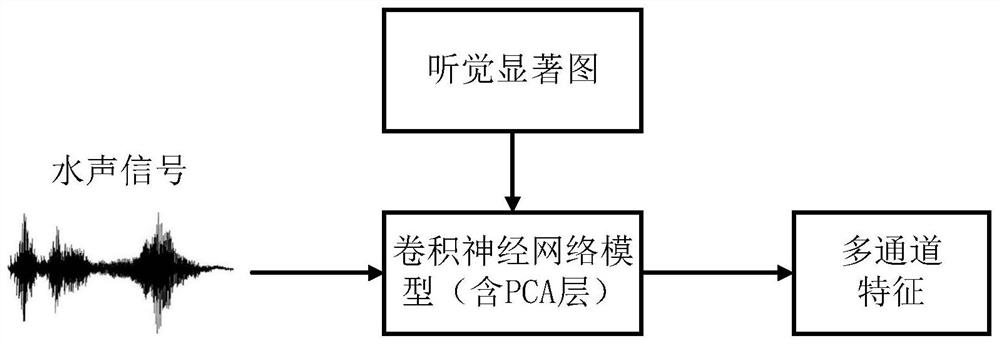

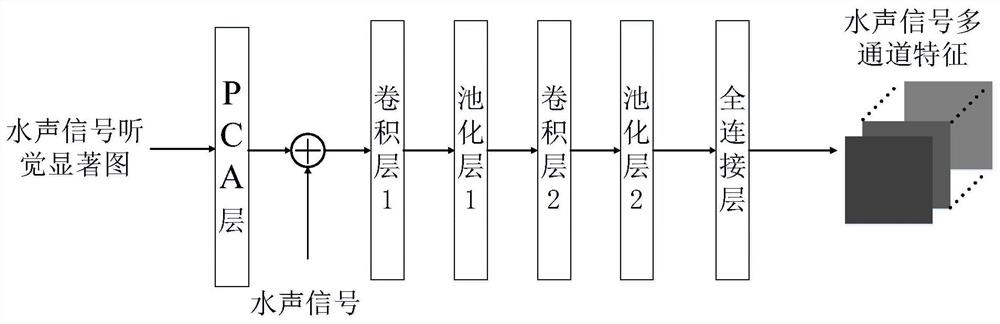

[0044] Specific embodiment one: a kind of sound generation method based on underwater target and environmental information feature described in this embodiment, described method specifically comprises the following steps:

[0045] Step 1. For the underwater target S1, after collecting a sound signal sample of the underwater target (the sound signal sample includes the underwater target signal and the noise signal), the collected sound signal samples are processed in parallel according to the frequency channel, and a system based on Auditory saliency maps for frequency channel processing;

[0046] Step 2. Framing the collected sound signal samples and the constructed auditory saliency map in the time domain to obtain multiple groups of sound signals and auditory saliency maps, and the time lengths of the sound signals and auditory saliency maps in each group are the same;

[0047] The sound signal samples and the auditory saliency map are divided into frames from the beginning ...

specific Embodiment approach 2

[0076] Specific implementation mode two: as figure 1 shown. The difference between this embodiment and the specific embodiment 1 is that in the first step, the collected sound signal samples are processed in parallel according to frequency channels, and an auditory saliency map based on frequency channel processing is constructed. The specific process is as follows:

[0077]The collected sound signal samples are convolved with a bandpass filter formed by superimposing 64 Gammatone filters to obtain the sound signal responses of 64 frequency channels;

[0078] Then the sound signal response of each frequency channel is convoluted in any direction through 8 one-dimensional Gaussian smoothing filters to obtain the convolution result; the convolution result is down-sampled to obtain the sound signal response of each frequency channel in 8 Representation F on a scale i ,i=1,2,…,8, then use F i Compute the auditory salience of the sound signal response for each frequency channel ...

specific Embodiment approach 3

[0088] Specific embodiment three: the difference between this embodiment and specific embodiment two is that: the auditory saliency of each frequency channel sound signal response after normalization on different scales is integrated across scales, and a frequency channel-based The processed auditory saliency map, the specific process is:

[0089]

[0090] In the formula, Map j 'Represents the auditory salience of the sound signal response of the jth frequency channel after cross-scale integration, Map j Represents the auditory salience of the jth frequency channel sound signal response on different scales after normalization, DoG represents a one-dimensional difference Gaussian filter with a length of 50ms, Represents convolution, M nor,j Represents the auditory significance of the jth frequency channel sound signal response on different scales before normalization, j=1,2,...,64;

[0091] Then to Map j ' Perform linear integration to obtain the auditory saliency map A...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com