Network model capable of jointly realizing semantic segmentation and depth-of-field estimation and training method

A technology of semantic segmentation and network model, applied in biological neural network model, character and pattern recognition, computing and other directions, can solve the problem that the single-task model cannot simultaneously perform semantic segmentation and depth estimation, and the single-task model has an unsatisfactory effect of concentration and computing. problem of large quantity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0061] The present invention will be further described below in conjunction with accompanying drawing and embodiment:

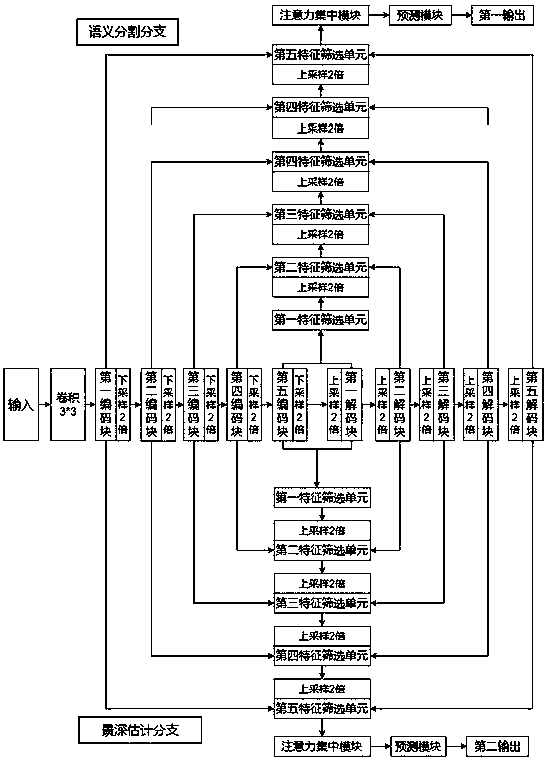

[0062] On the one hand, this application provides a network model that can jointly implement semantic segmentation and depth estimation, such as figure 1 As shown, before the image is input into the model, the image is initially extracted through 3*3 standard convolution to obtain the input image. The network model in this embodiment includes:

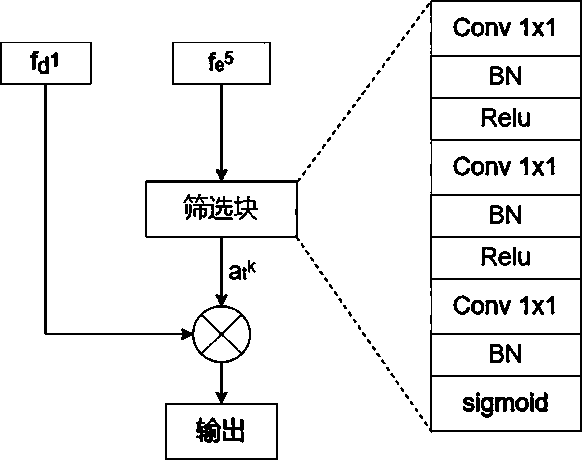

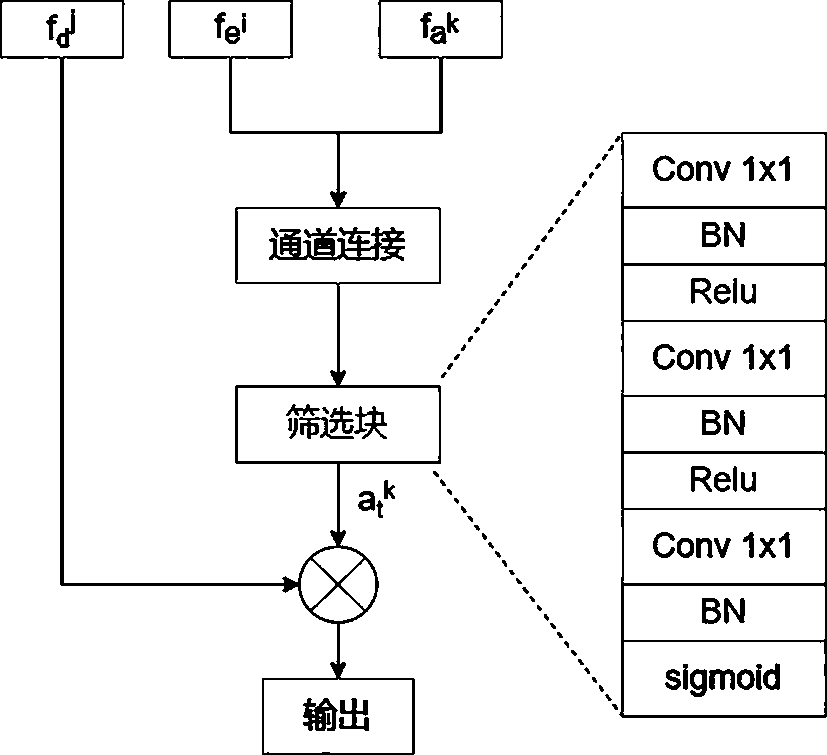

[0063] The feature sharing module is configured to perform feature extraction on the input image through a convolutional neural network to obtain shared features. Specifically, the feature sharing module adopts an encoding-decoding ((encoder-decoder)) structure, including an encoding unit and a decoding unit. The output of the encoding unit is used as the input of the decoding unit. The encoding unit performs feature encoding and down-sampling processing on the input image, and decodes The unit performs upsampling a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com