Multi-modal feature fusion text-guided image restoration method

A technology of feature fusion and image guidance, applied in neural learning methods, character and pattern recognition, biological neural network models, etc. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

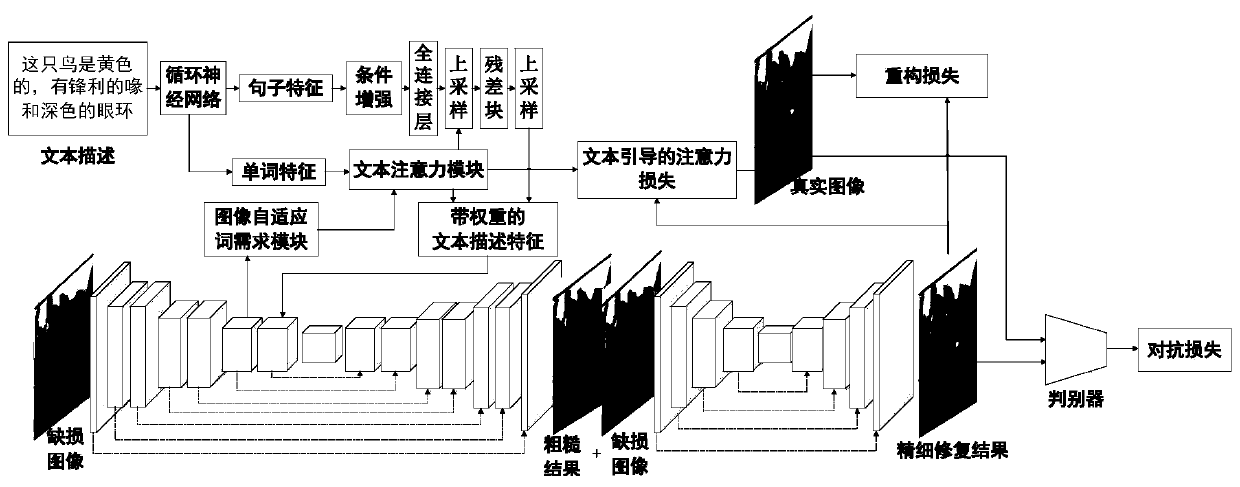

[0045] For an image with a missing object in the central area, mark the missing area as the area to be repaired, and you can use figure 1 The shown network performs image inpainting.

[0046] The specific process is as follows.

[0047] (1) Mark the defect area from the image to be repaired

[0048] For an image with serious object information loss, such as figure 1 The bird image in is missing the central region. First construct an all-zero matrix M with the same size as the input image X, and set the matrix point corresponding to the pixel position of the area to be repaired to 1, that is figure 1 The gray area in the center of the middle defect image is 1, and the rest of the positions are 0.

[0049] (2) Extract text features from the text description T corresponding to the image

[0050] The text description T is fed into a pre-trained recurrent neural network to obtain preliminary sentence features and word embedding features. Sentence features go through a conditi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com