Neural network model compression method, system and device and medium

A technology of neural network model and compression method, which is applied in the field of model compression based on meta-learning and soft pruning, and can solve the problems that cannot be changed according to the situation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

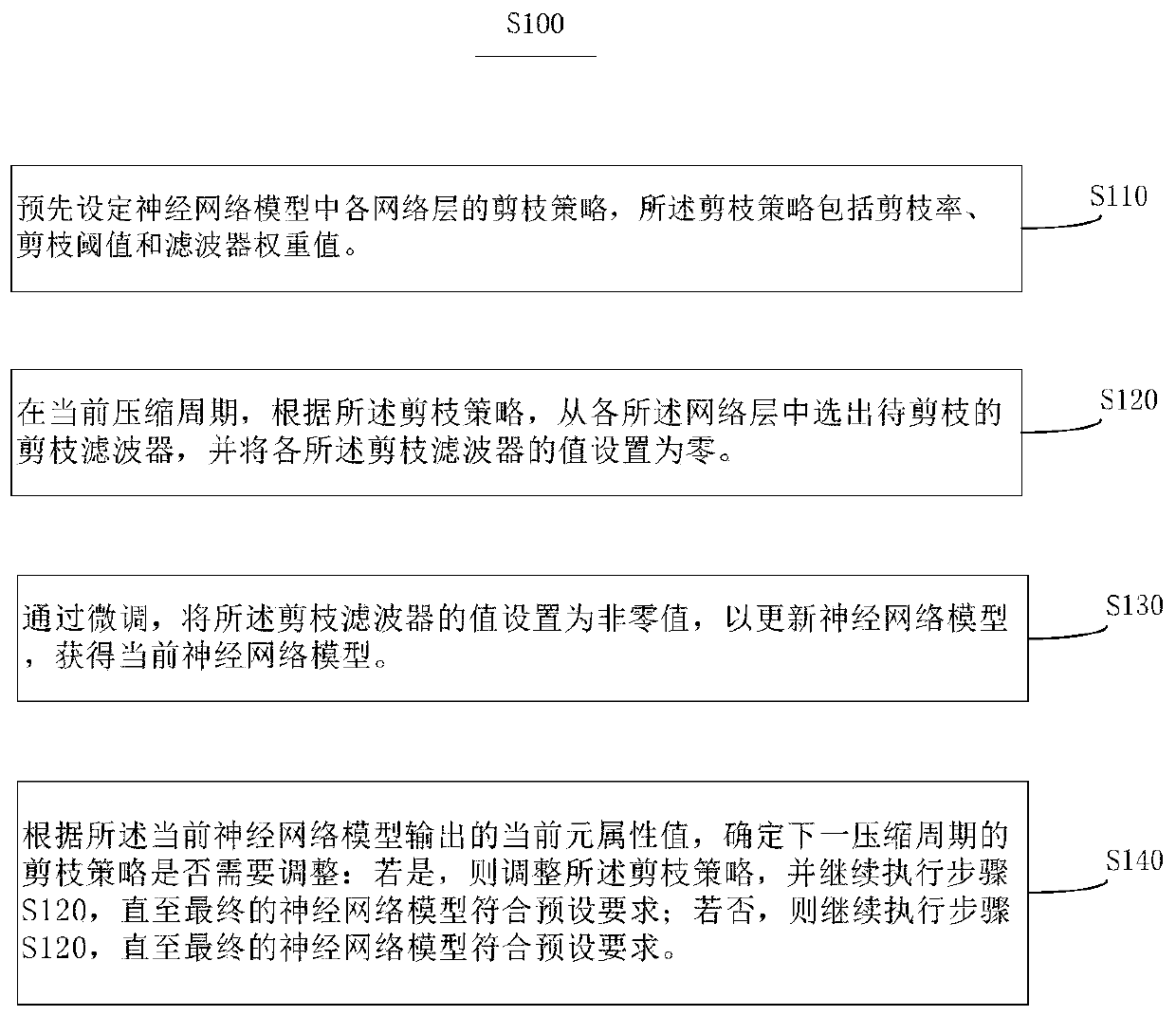

[0053] In order to enable those skilled in the art to better understand the technical solutions of the present invention, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

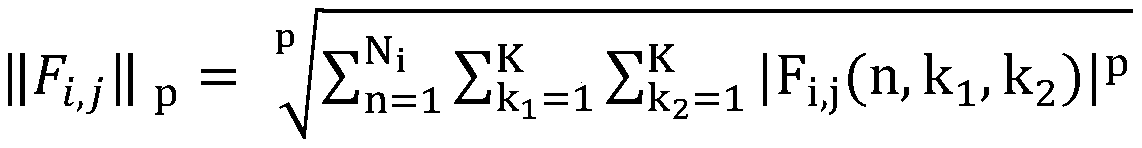

[0054] Based on filter pruning, it is necessary to select a compression strategy in advance based on experience, such as a strategy based on weight size and a strategy based on the similarity between filters. Once the compression strategy is selected, the selected strategy will remain constant throughout the compression debugging process and cannot be adjusted according to changes in the situation, such as changes in the probability distribution of filter parameters or changes in the deep learning model architecture. The present invention provides a neural network model compression method based on meta-learning, which proposes the concept and scheme of meta-learning pruning on the basis of soft pruning filtering, so as to ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com