Motor imagery classification method based on convolutional neural network

A convolutional neural network and motion imagery technology, applied in the information field, can solve the problems of affecting the classification results and the inability to fully extract the characteristics of EEG signals, so as to improve the recognition accuracy and have the effect of portability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] Embodiments of the present invention are described in detail below in conjunction with the accompanying drawings:

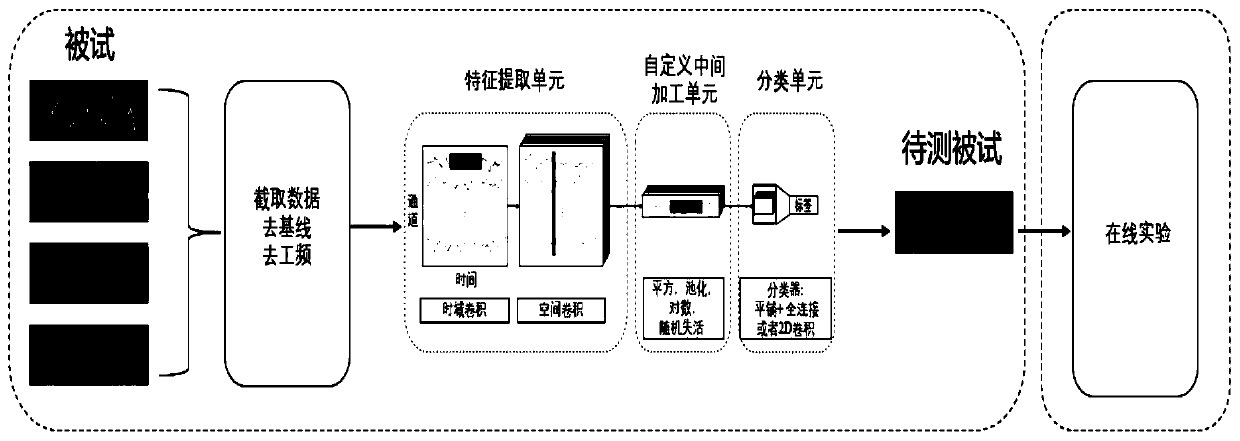

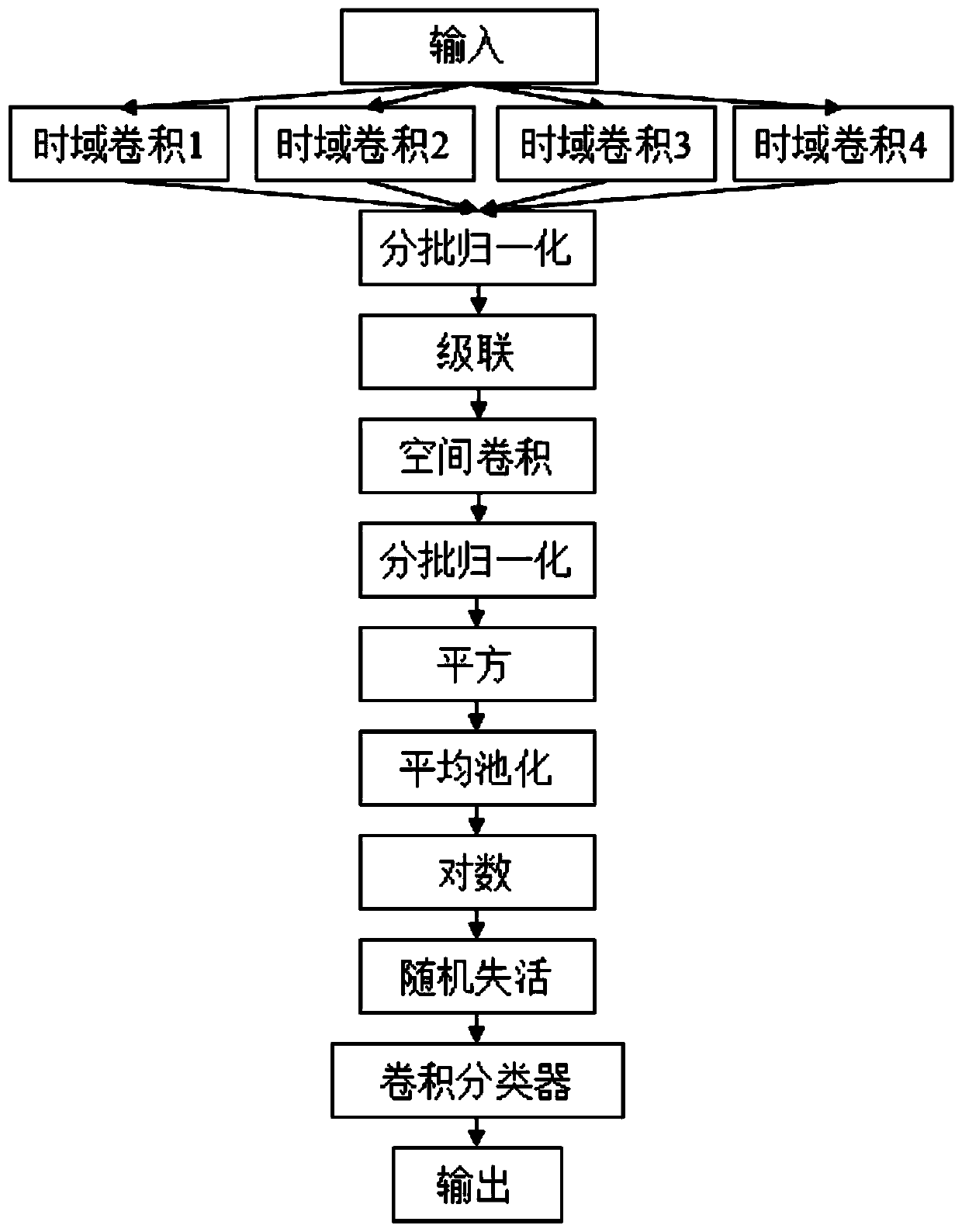

[0038] refer to figure 1 , this embodiment is divided into two parts, the first part is to generate the final convolutional neural network, and the second part is to use the network for online experiments. The specific implementation steps are as follows:

[0039] 1. Generate the final convolutional neural network

[0040] Step 1, collecting imaginative exercise EEG data.

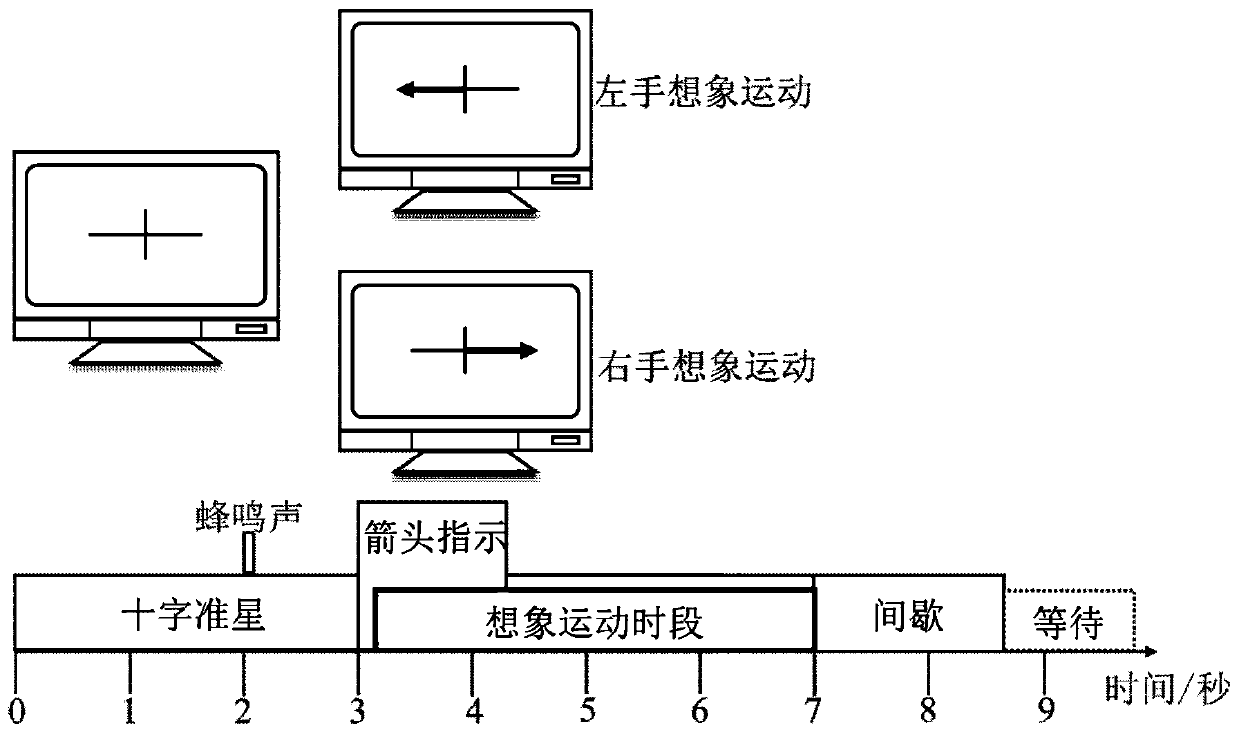

[0041] refer to figure 2 , the specific implementation of this step is as follows:

[0042] (1a) Experimental paradigm:

[0043] The subjects conducted the experiment according to the paradigm of imaginary movement experiment, and there were 4 states in each experiment according to the time sequence, namely: preparation state, imaginary movement state, intermittent state, and waiting state, among which:

[0044] In the ready state, first the crosshairs will appear on the screen to...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com