Monocular image-oriented three-dimensional object detection method based on three-dimensional reconstruction

A technology of 3D object and 3D reconstruction, applied in the field of image processing and computer vision, to achieve the effect of improving detection performance, good scalability, and realizing 3D detection tasks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The specific implementation manners of the present invention will be further described below in conjunction with the accompanying drawings and technical solutions.

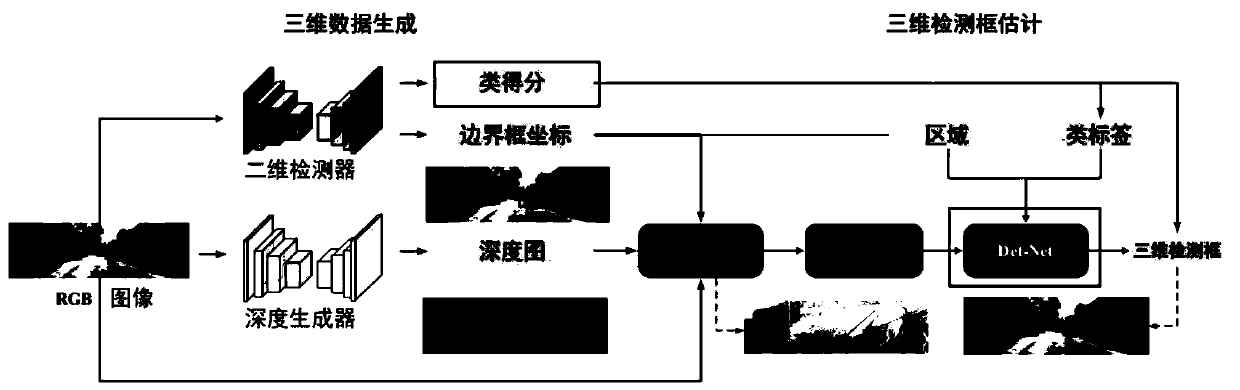

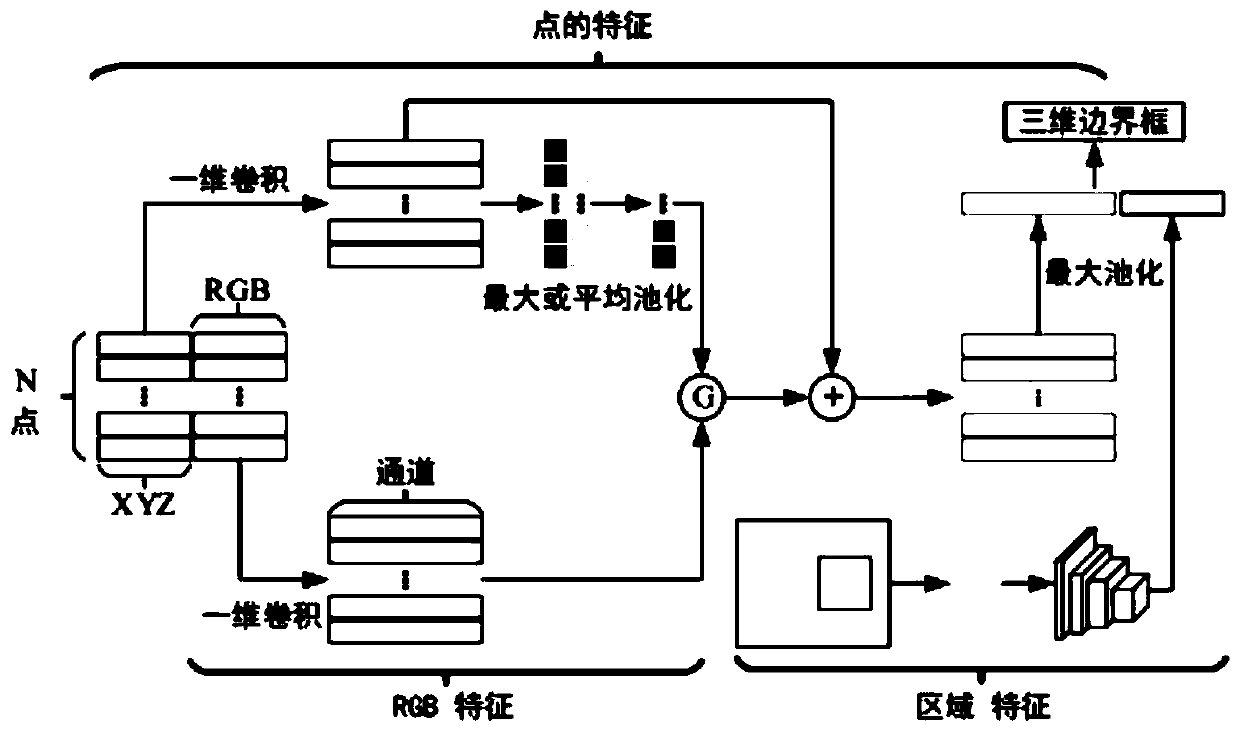

[0051] The present invention uses the pictures acquired by the monocular camera as the sensor as the data, and on this basis, uses the two-dimensional detector and the sparse depth map inferred by CNN's depth prediction and feature method to restore the depth information and establish three-dimensional point cloud data. The implementation process of the whole method is as follows figure 1 As shown, the method includes the following steps:

[0052] 1) First, two CNN networks are used to convolve the RGB image to obtain the approximate position and depth information of the object.

[0053] 1-1) Two-dimensional detector, use the CNN two-dimensional detector to detect and determine the object in the RGB image, and output the score (Class Score) of the detected object category and the coordinates of the two-dim...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com