Automatic pathological image labeling method based on reinforcement learning and deep neural network

A deep neural network and pathological image technology, applied in the field of automatic labeling of pathological images based on reinforcement learning and deep neural network, can solve the problems of subjectivity and fatigue error, time-consuming and laborious manual labeling, etc., to improve accuracy and solve cumbersome labeling. time consuming effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

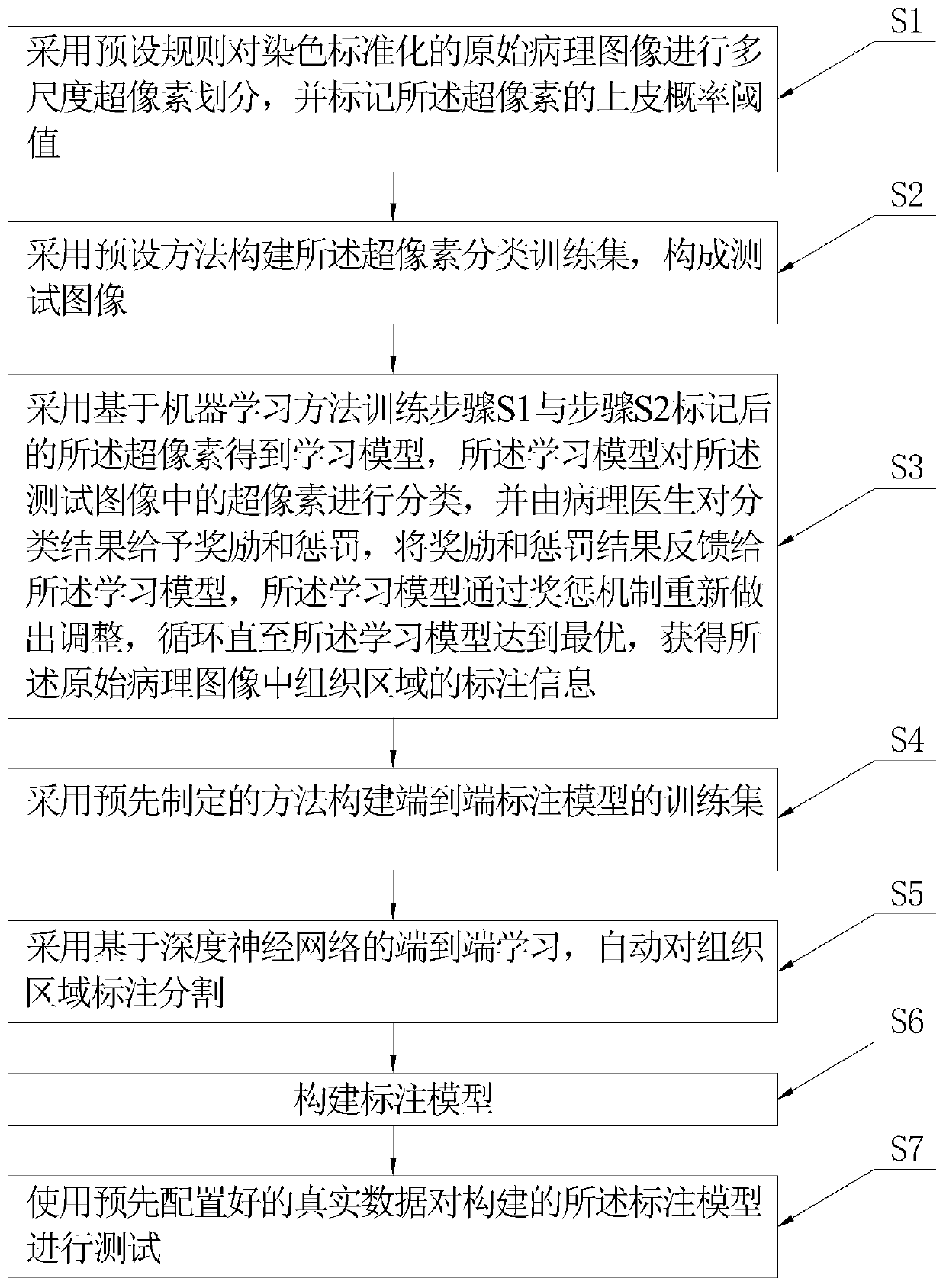

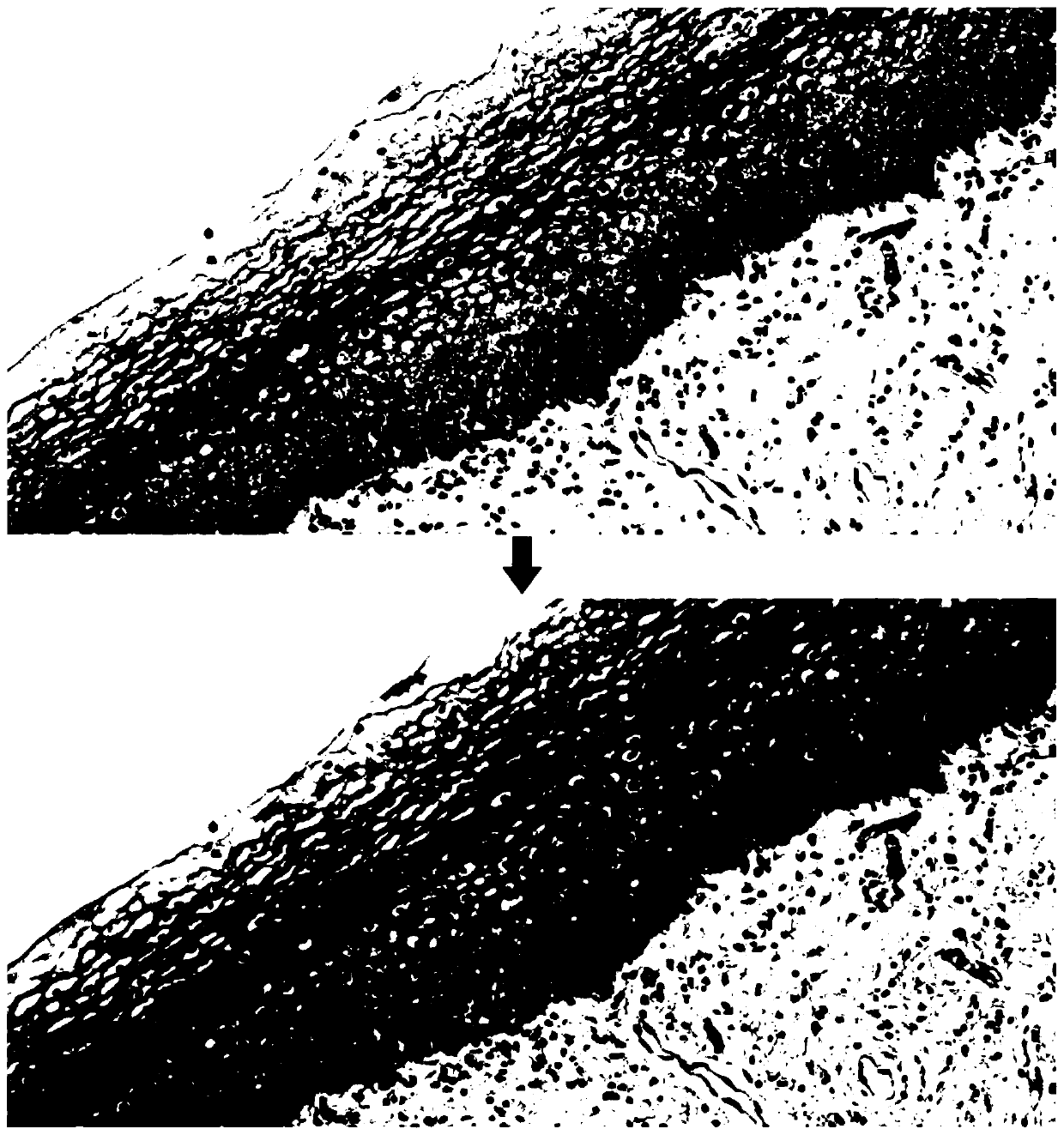

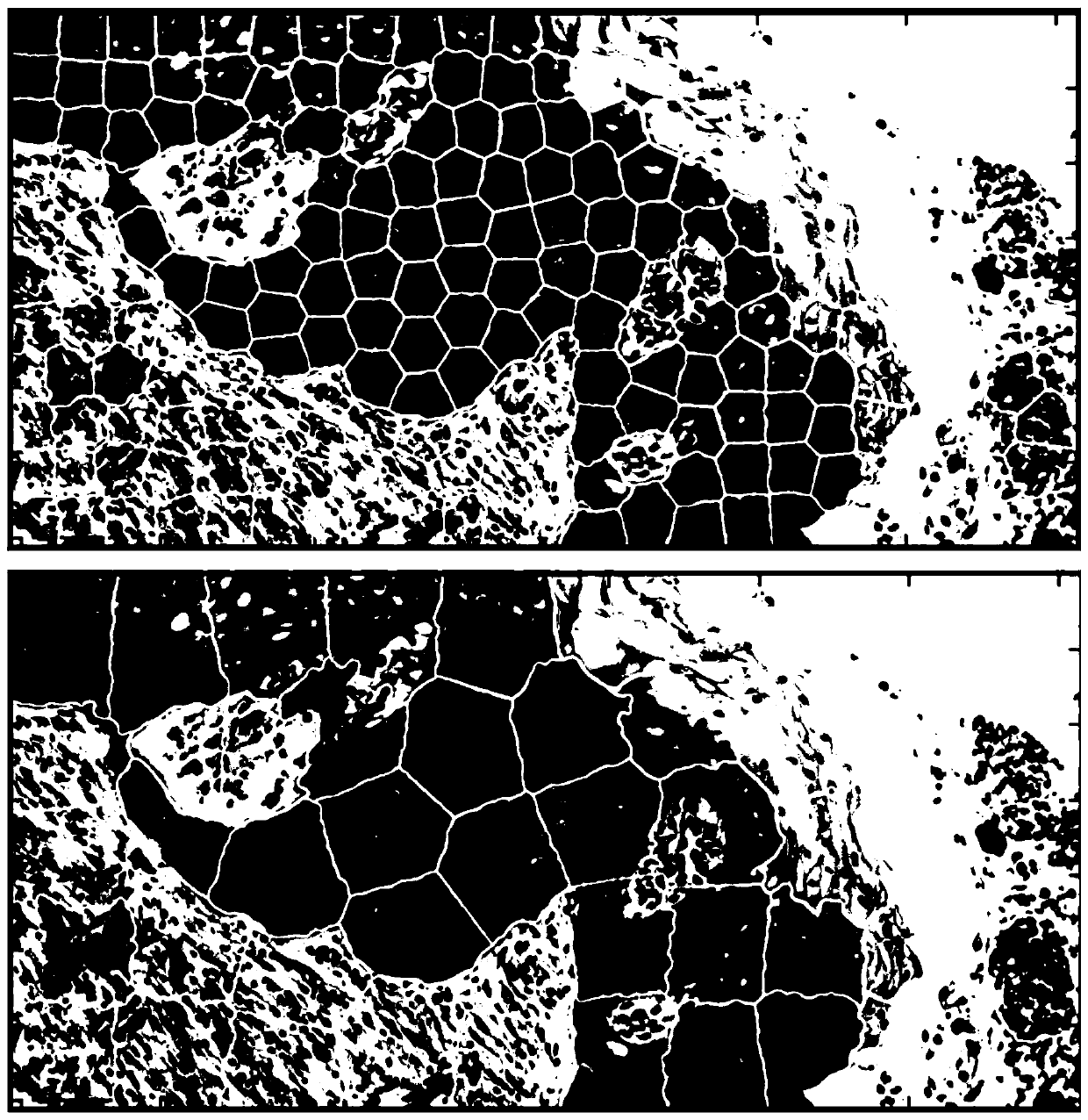

[0080] In order to further illustrate the various embodiments, the present invention provides accompanying drawings, which are part of the disclosure of the present invention, which are mainly used to illustrate the embodiments, and can be used in conjunction with the relevant descriptions in the specification to explain the operating principles of the embodiments, for reference Those of ordinary skill in the art should be able to understand other possible implementations and advantages of the present invention. The components in the figures are not drawn to scale, and similar component symbols are generally used to represent similar components.

[0081] According to an embodiment of the present invention, an automatic labeling method for pathological images based on reinforcement learning and deep neural network is provided.

[0082] Now in conjunction with accompanying drawing and specific embodiment, the present invention is further described, and the pathological image anno...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com