Multi-class Chinese text classification method fusing global and local features

A text classification, local feature technology, applied in the field of text classification of natural language processing, can solve the problem of inability to obtain context information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

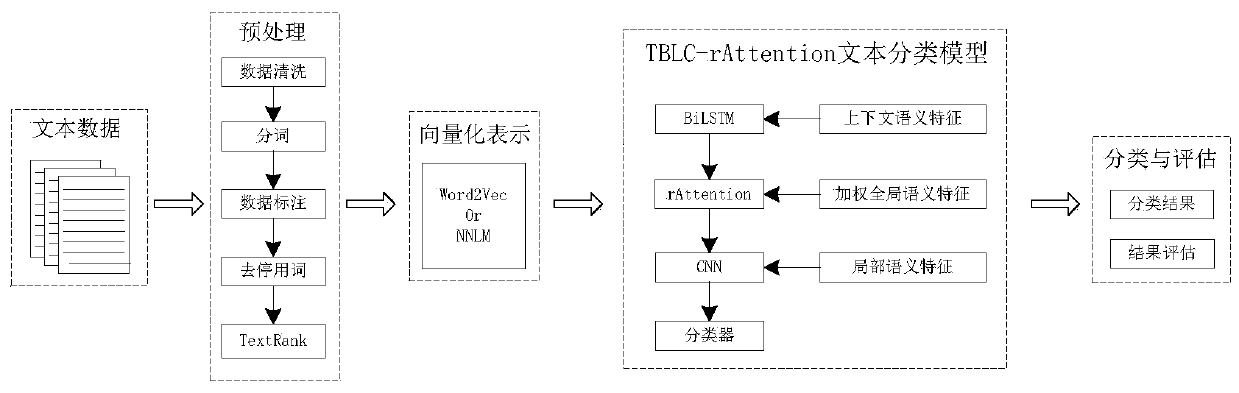

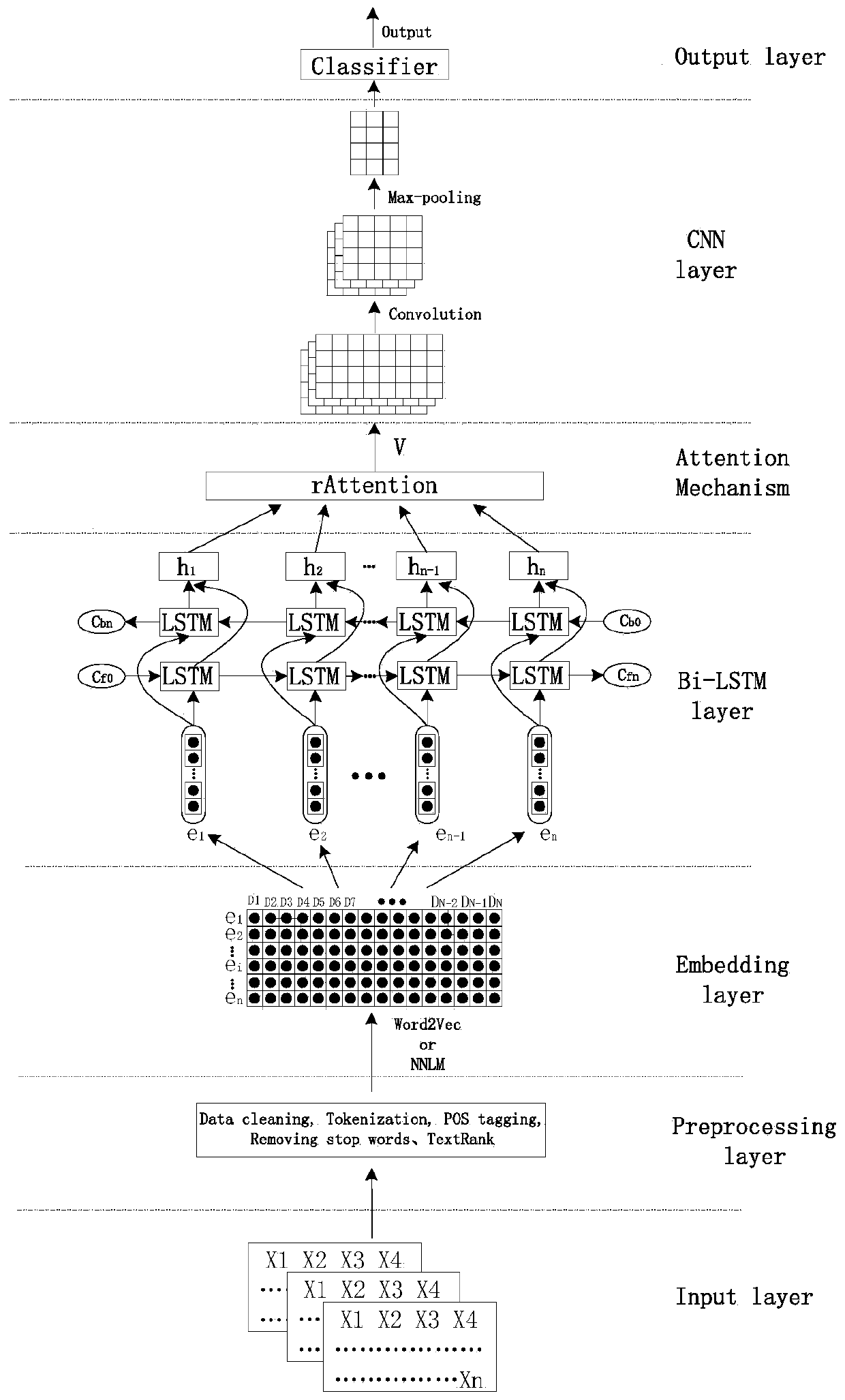

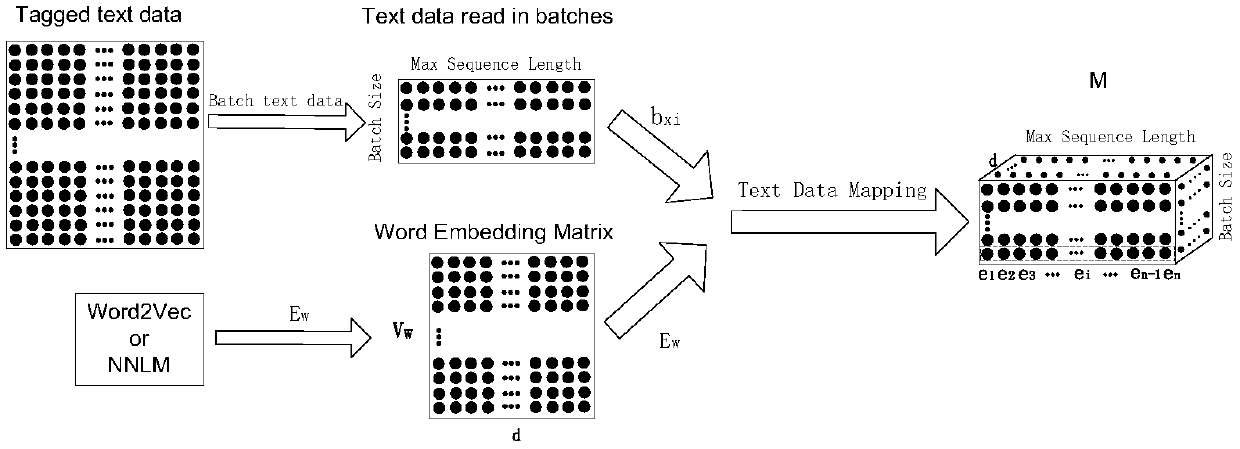

[0084] The present invention can be applied to text classification tasks on the Internet, such as public opinion analysis on e-commerce websites and text classification scenarios on news websites. According to one embodiment of the present invention, a multi-category Chinese text classification method that integrates global and local features is provided. In short, the method includes: preprocessing the text data and vectorizing the representation; using the vectorization representation Data training the text classification model that the present invention proposes; Use the model that the training completes to carry out text classification prediction, concrete process is as follows figure 1 As shown, the method includes the following steps:

[0085] Step S1, acquiring text data and performing preprocessing on the data.

[0086] The corpus data used in this experiment is to use crawler technology to crawl the comment data about the sales of ** cold medicine on a large-scale d...

Embodiment 2

[0117] The model proposed by the present invention is also applicable to long text multi-category Chinese text classification tasks. The long text data adopts the THUCT Chinese text data set released by the Natural Language Processing Laboratory of Tsinghua University. The data set has a large number of texts and many categories, including finance, lottery , real estate, stock, home furnishing, education, technology, society, fashion, current affairs, sports, horoscope, games, and entertainment. There are 14 categories in total. The basic information of the data set division is shown in Table 7. Figure 8 Shows the sentence length distribution of the experimental corpus. The comparison results of the five recurring classification models and the TBLC-rAttention model are shown in Table 8 and Table 9. Among them, Table 8 shows the overall comparison results of each model in the long text multi-classification task; Table 9 shows the results in The accuracy comparison results of e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com