Resource scheduling method, apparatus and device, and readable storage medium

A resource scheduling and computing resource technology, applied in resource allocation, multiprogramming device, program control design, etc., can solve problems such as reducing the task execution efficiency of edge servers and inability to accurately allocate computing resources.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

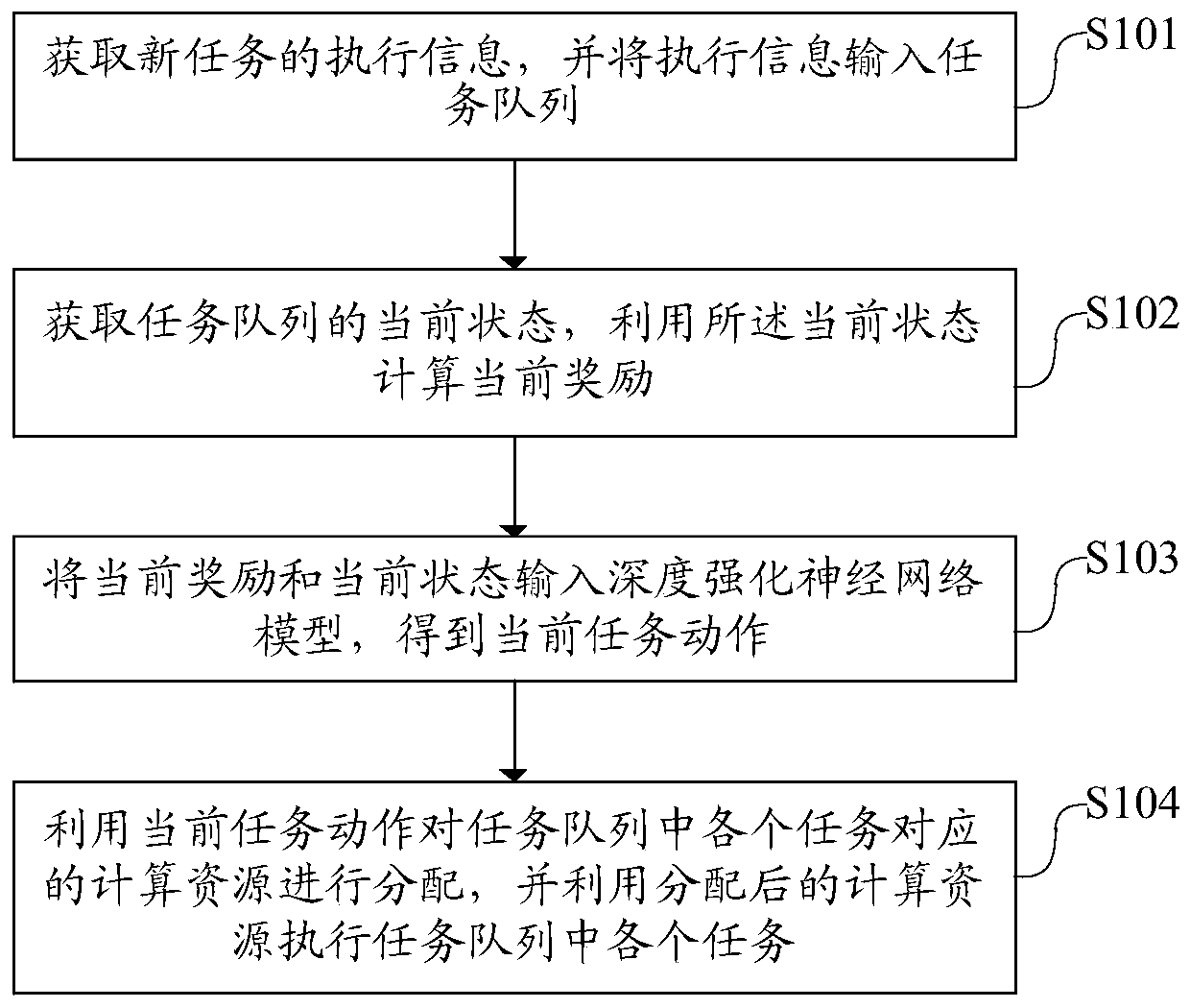

[0053] Please refer to figure 1 , figure 1 A flowchart of a resource scheduling method provided by an embodiment of the present invention. The method includes:

[0054] S101: Acquire execution information of a new task, and input the execution information into a task queue.

[0055]Specifically, when the user uses an artificial intelligence application on the terminal, the terminal will send task information of the new task to the edge server. This embodiment does not limit the type of terminal used by the user, for example, it may be a smart phone; or it may be a tablet computer. This embodiment does not limit the specific application of the above-mentioned artificial intelligence applications. Further, this embodiment does not limit the application of artificial intelligence applications, that is, the task type of the new task. For example, it can be image recognition; or it can be text recognition; it can also be voice recognition. The execution time of different types ...

Embodiment 2

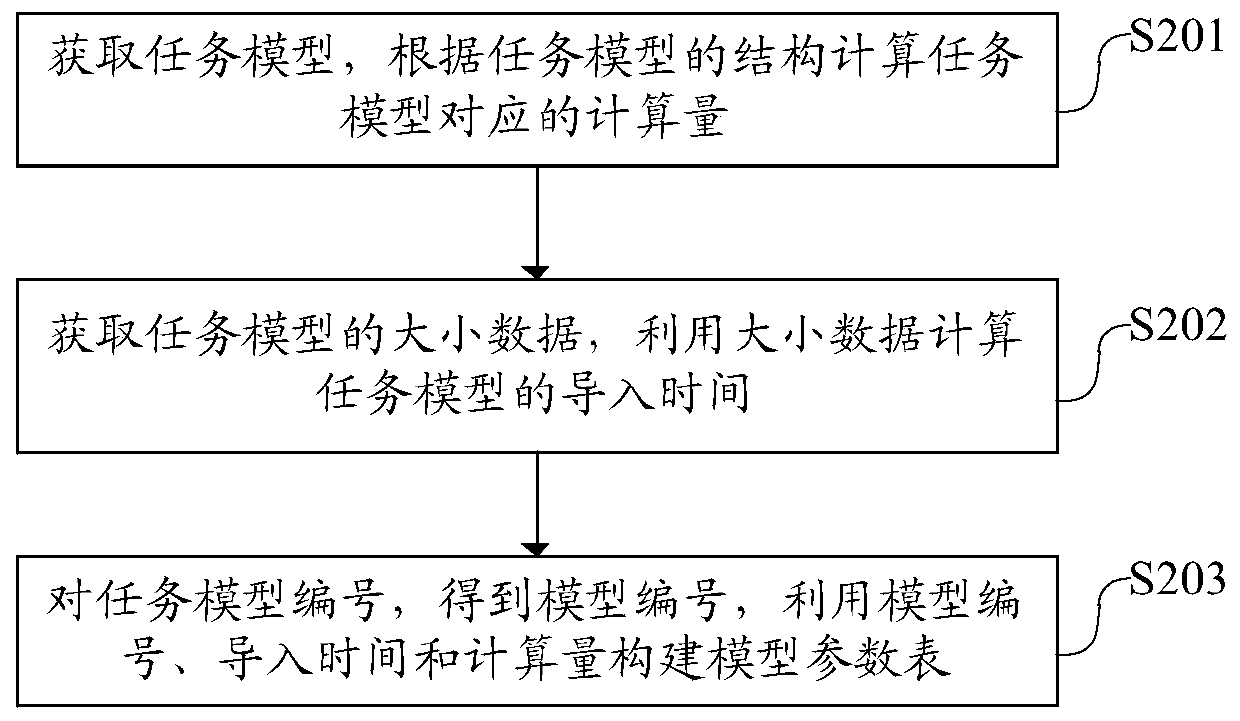

[0074] Before using the method in Embodiment 1 to allocate the computing resources of the edge server, a model parameter table needs to be established in advance, so as to obtain the execution information of the new task by using the model parameter table. Please refer to figure 2 , figure 2 A flowchart of another resource scheduling method provided by an embodiment of the present invention.

[0075] S201: Acquire a task model, and calculate a computation amount corresponding to the task model according to the structure of the task model.

[0076]Obtain different task models for performing different tasks, decompose each task model according to the neural network layer, and obtain the neural network layer information of each neural network layer. This embodiment does not limit the method for acquiring the information of the neural network layer. For example, a network layer parameter table may be preset, and the network layer information of different neural network layers ...

Embodiment 3

[0083] The following describes the resource scheduling apparatus provided by the embodiments of the present invention, and the resource scheduling apparatus described below and the resource scheduling method described above may refer to each other correspondingly.

[0084] Please refer to Figure 5 , Figure 5 A schematic structural diagram of a resource scheduling apparatus provided by an embodiment of the present invention, including:

[0085] The execution information obtaining module 100 is used to obtain the execution information of the new task, and input the execution information into the task queue;

[0086] The current reward calculation module 200 is used to obtain the current state of the task queue, and use the current state to calculate the current reward;

[0087] The task action acquisition module 300 is used to input the current reward and the current state into the deep reinforcement neural network model to obtain the current task action;

[0088] The compu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com