Video behavior identification method based on space-time adversarial generative network

A recognition method and network technology, applied in the field of computer vision and pattern recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] The present invention will be further described below through specific embodiments.

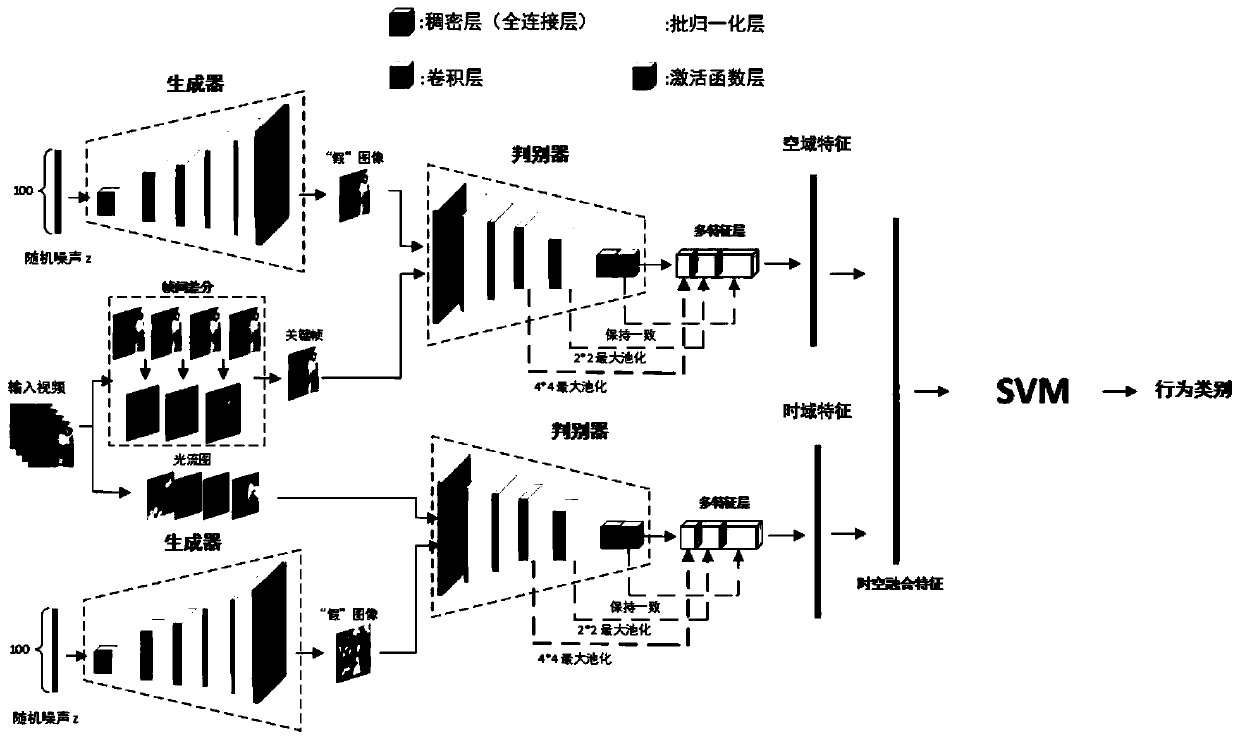

[0018] In order to solve the problem that most of the behavior recognition methods in the prior art still need to mark the data set and the existing database scale, the present invention provides a video behavior recognition method based on spatio-temporal confrontation generation network, such as figure 1 As shown, the inventive method comprises a feature extraction process and a recognition process, and the specific steps are as follows:

[0019] Feature extraction process:

[0020] 1) Extract keyframes and optical flow maps from video sequences. The keyframe is used as the input of the spatio-temporal generation adversarial network, and the optical flow map is used as the input of the temporal generation adversarial network.

[0021] Specifically, the present invention extracts the key frames of the video sequence through an inter-frame difference method. The inter-frame differen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com