Word bag generation method and device based on feature matching

A feature matching and bag-of-words technology, applied in the computer field, can solve the problem of long time consumption and achieve the effect of reducing the time consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

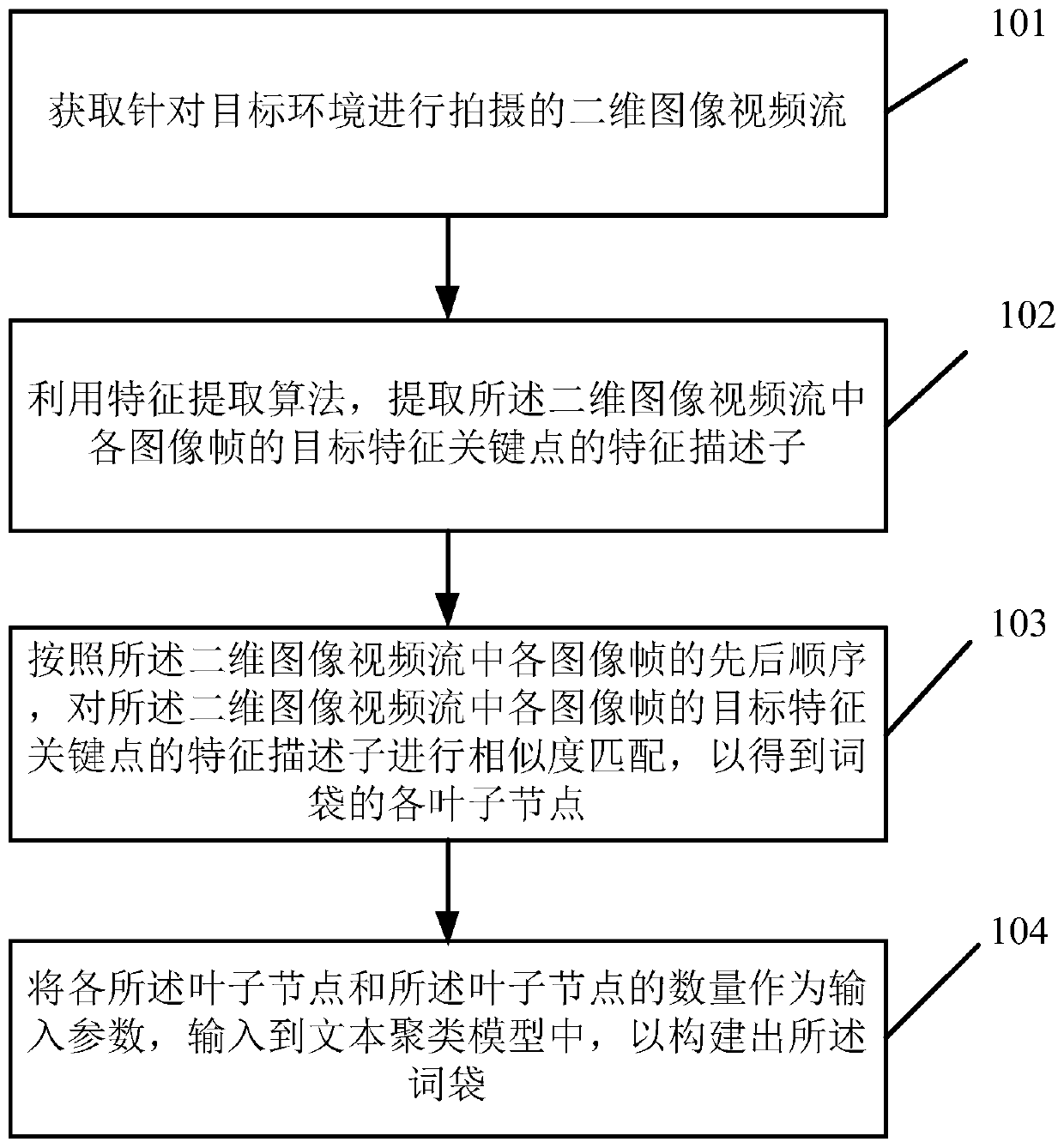

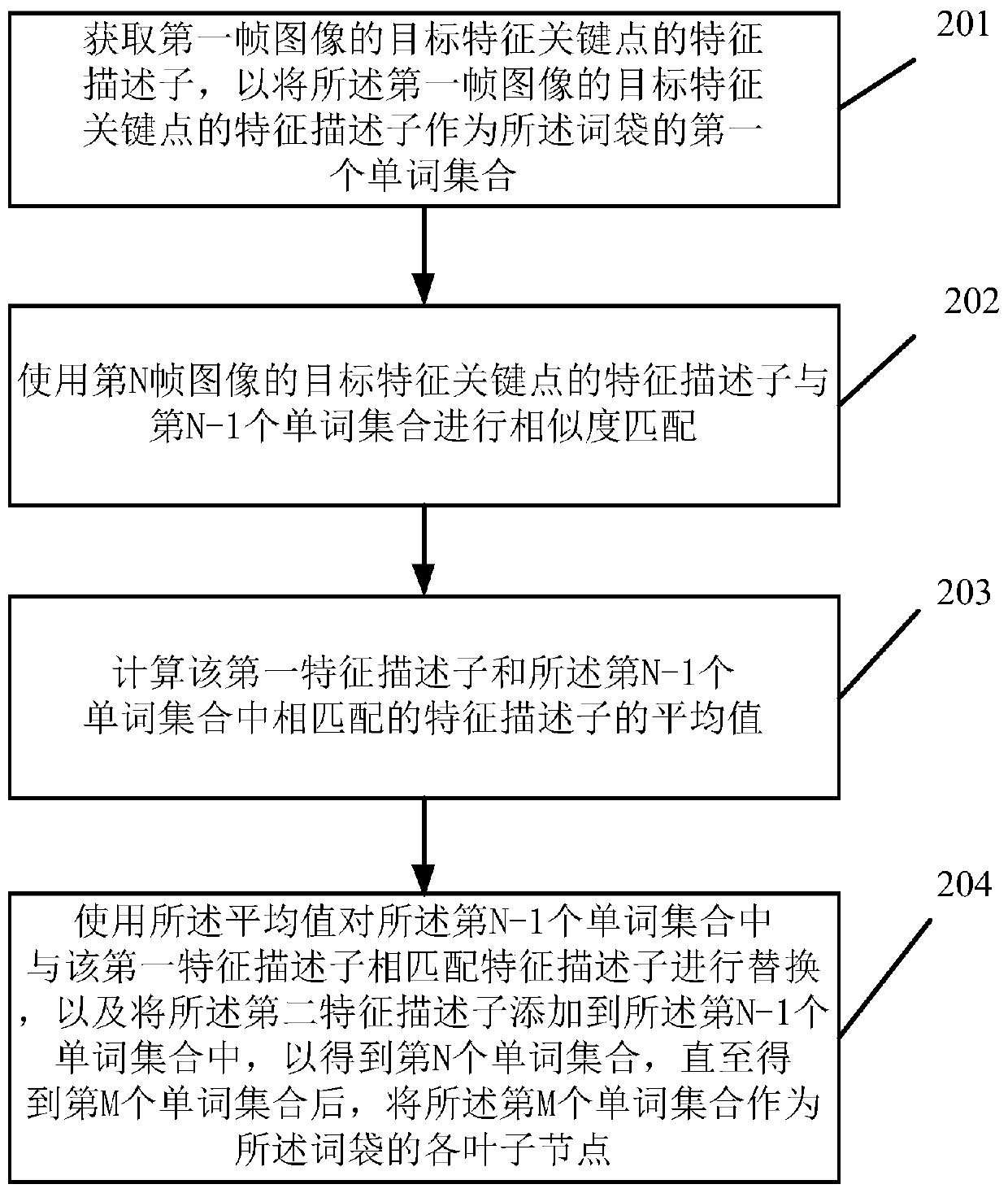

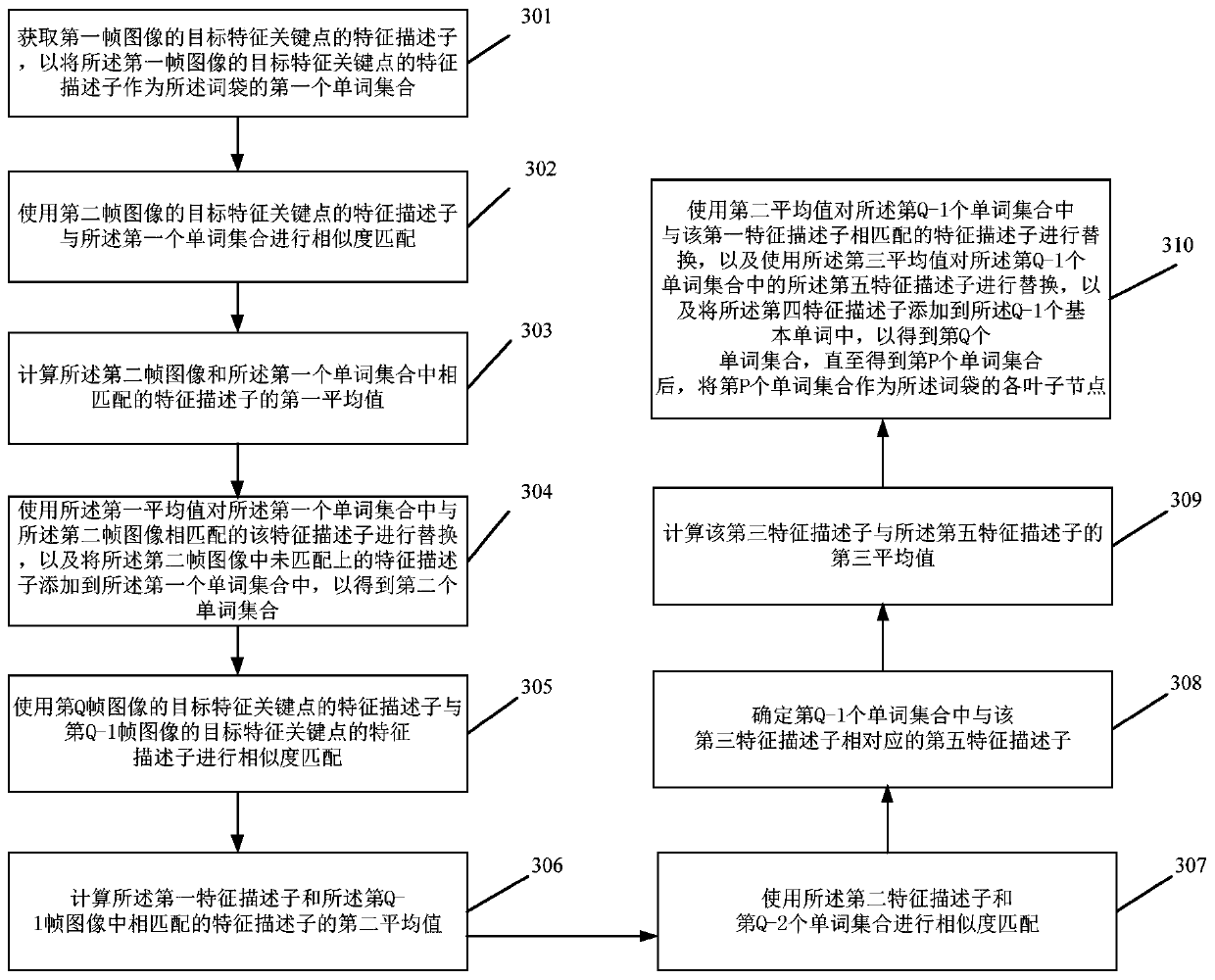

[0068] figure 1 A schematic flow diagram of a bag-of-words generation method based on feature matching provided in Embodiment 1 of the present application, as shown in figure 1 As shown, the bag-of-words generation method based on feature matching includes the following steps:

[0069] Step 101. Obtain a two-dimensional image video stream shot for a target environment, wherein the target environment is the environment corresponding to the map constructed by the target device when performing simultaneous localization and mapping SLAM.

[0070] Specifically, when an intelligent robot performs a task in an unknown environment, it needs to use SLAM technology to construct a map. Due to the accumulation of errors in the process of constructing the map, the constructed map is often not a closed map. At this time, it is necessary to continue to obtain the unknown environment. The two-dimensional image video stream of , so as to use the video stream to correct the constructed map.

...

Embodiment 2

[0113] Figure 4 A schematic structural diagram of a bag-of-words generation device based on feature matching provided in Embodiment 2 of the present application, as shown in Figure 4 Shown, this bag-of-words generating device based on feature matching comprises:

[0114] The acquisition unit 41 is configured to acquire a two-dimensional image video stream shot for a target environment, wherein the target environment is the environment corresponding to the map constructed by the target device during simultaneous localization and mapping SLAM;

[0115] The extraction unit 42 is configured to use a feature extraction algorithm to extract a feature descriptor of a target feature key point of each image frame in the two-dimensional image video stream, wherein the extracted two-dimensional image video stream of each image frame The number of target feature key points is at least one;

[0116] The matching unit 43 is configured to perform similarity matching on the feature descri...

Embodiment 3

[0141] Figure 6 A schematic structural diagram of an electronic device provided in Embodiment 3 of the present application, including: a processor 601, a storage medium 602, and a bus 603, and the storage medium 602 includes such as Figure 4 In the shown bag-of-words generation device based on feature matching, the storage medium 602 stores machine-readable instructions executable by the processor 601. When the electronic device runs the above-mentioned bag-of-words generation method based on feature matching, the The processor 601 communicates with the storage medium 602 through a bus 603, and the processor 601 executes the machine-readable instructions to perform the following steps:

[0142] Obtaining a two-dimensional image video stream shot for the target environment, wherein the target environment is the environment corresponding to the map constructed by the target device during simultaneous localization and mapping SLAM;

[0143] Using a feature extraction algorithm...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com