Neural machine translation method based on sample guidance

A machine translation and sample technology, applied in the neural field, can solve problems such as inapplicability of NMT, inability to fully use translation memory, incomplete solution, etc., to achieve the effect of avoiding interference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

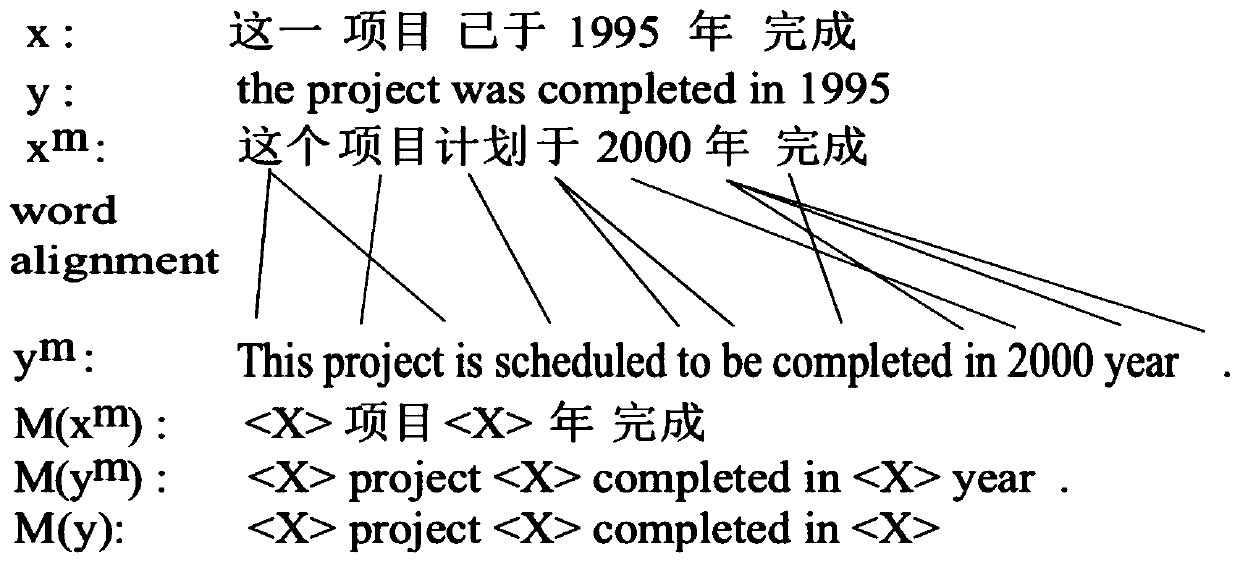

Examples

Embodiment Construction

[0036] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments, so that those skilled in the art can better understand the present invention and implement it, but the examples given are not intended to limit the present invention.

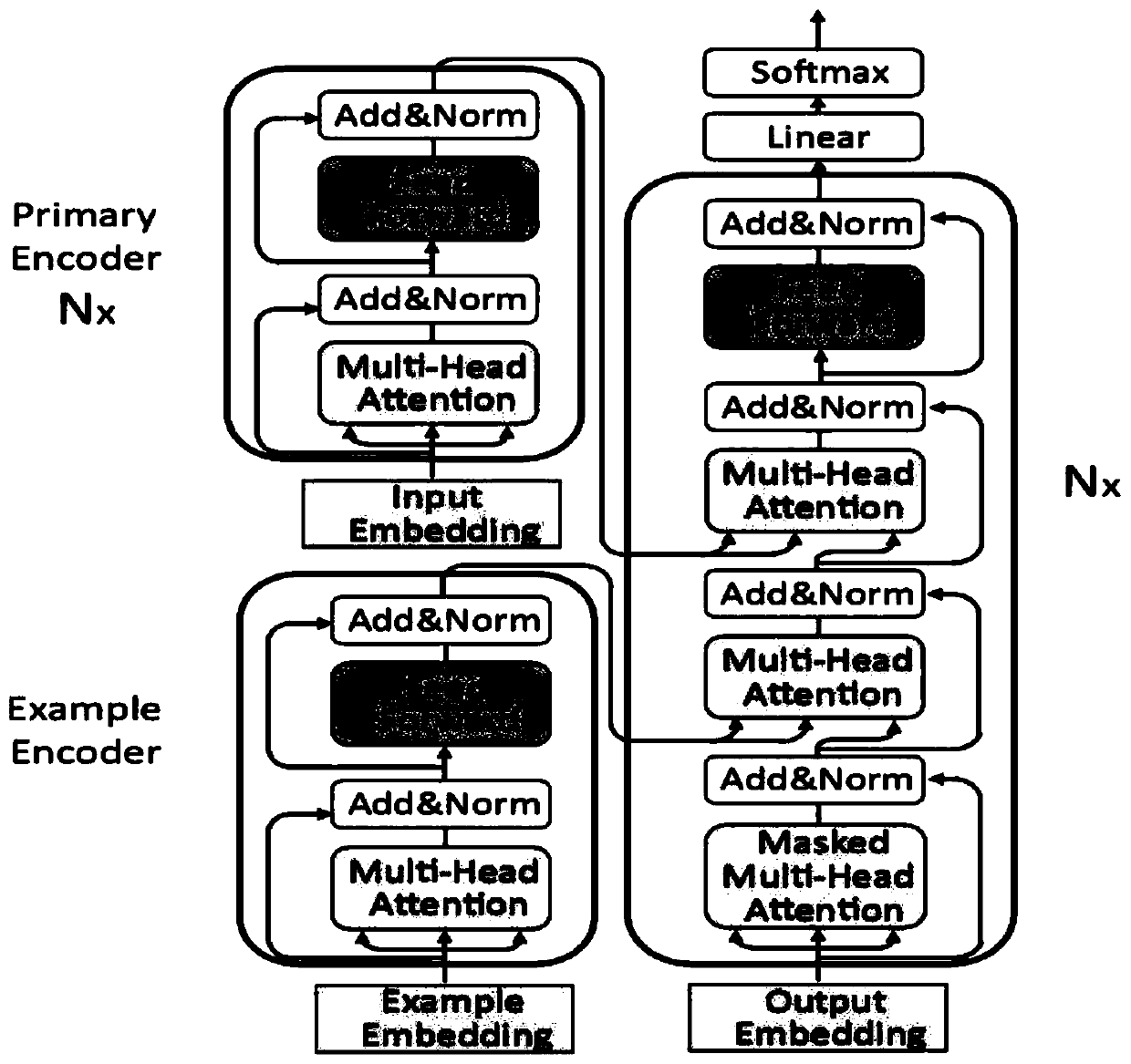

[0037] Background: NMT model based on attention mechanism (attention)

[0038] In the neural machine translation system, the encoder-decoder framework is generally used to achieve translation. For each word in the training corpus, a word vector is initialized for it, and the word vectors of all words constitute a word vector dictionary. A word vector is generally a multi-dimensional vector. Each dimension in the vector is a real number. The size of the dimension is generally determined according to the results of the experiment process. For example, for the word "we", its word vector may be .

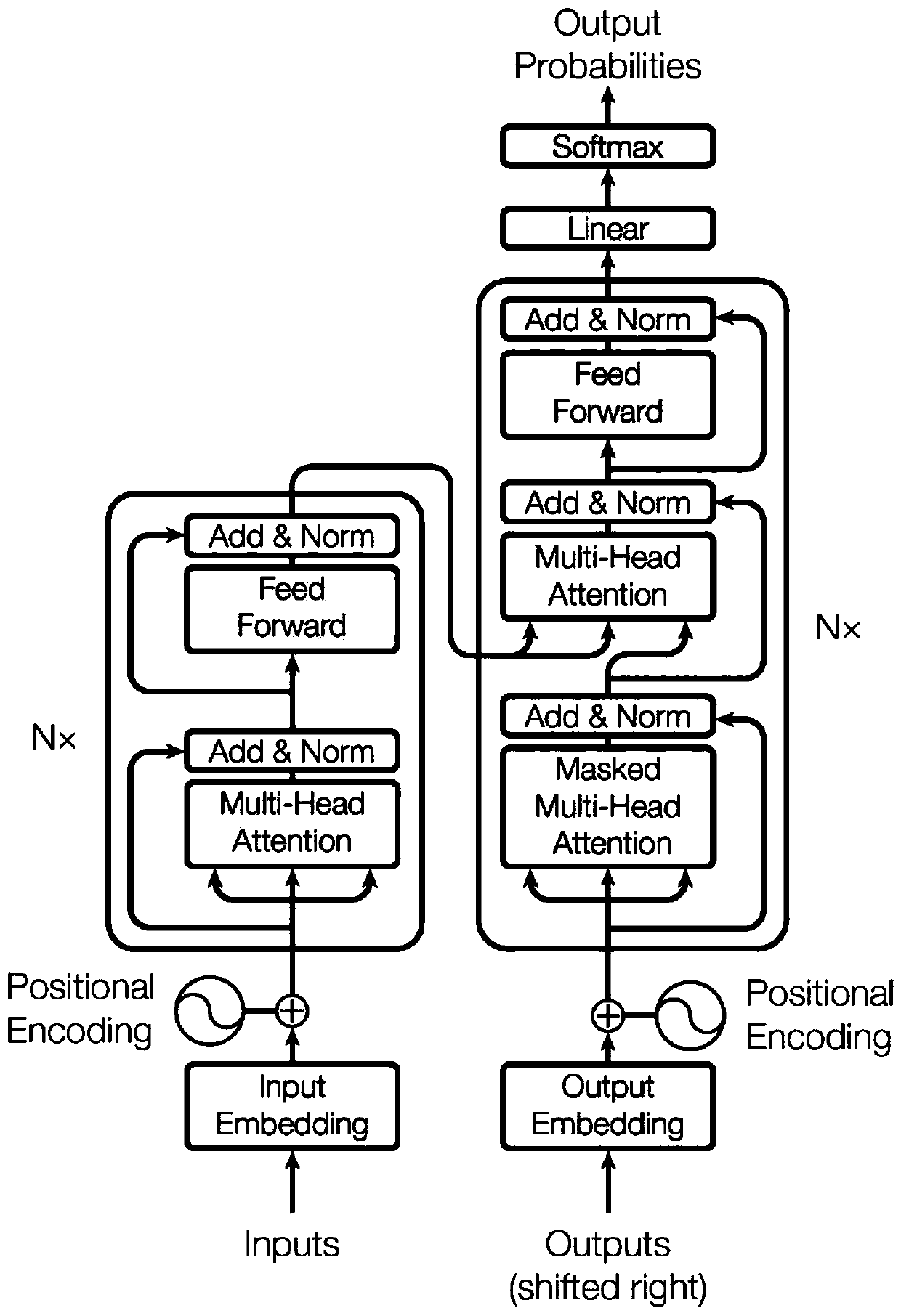

[0039] Transformer is a model proposed by Google in 2017, the structure is as...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com