Dynamic gesture recognition method and system based on two-dimensional convolutional network

A two-dimensional convolution and dynamic gesture technology, which is applied in neural learning methods, character and pattern recognition, biological neural network models, etc., can solve the problems of increasing the difficulty of recognition tasks, reduce feature redundancy, and reduce the amount of calculation , The effect of improving the accuracy of recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

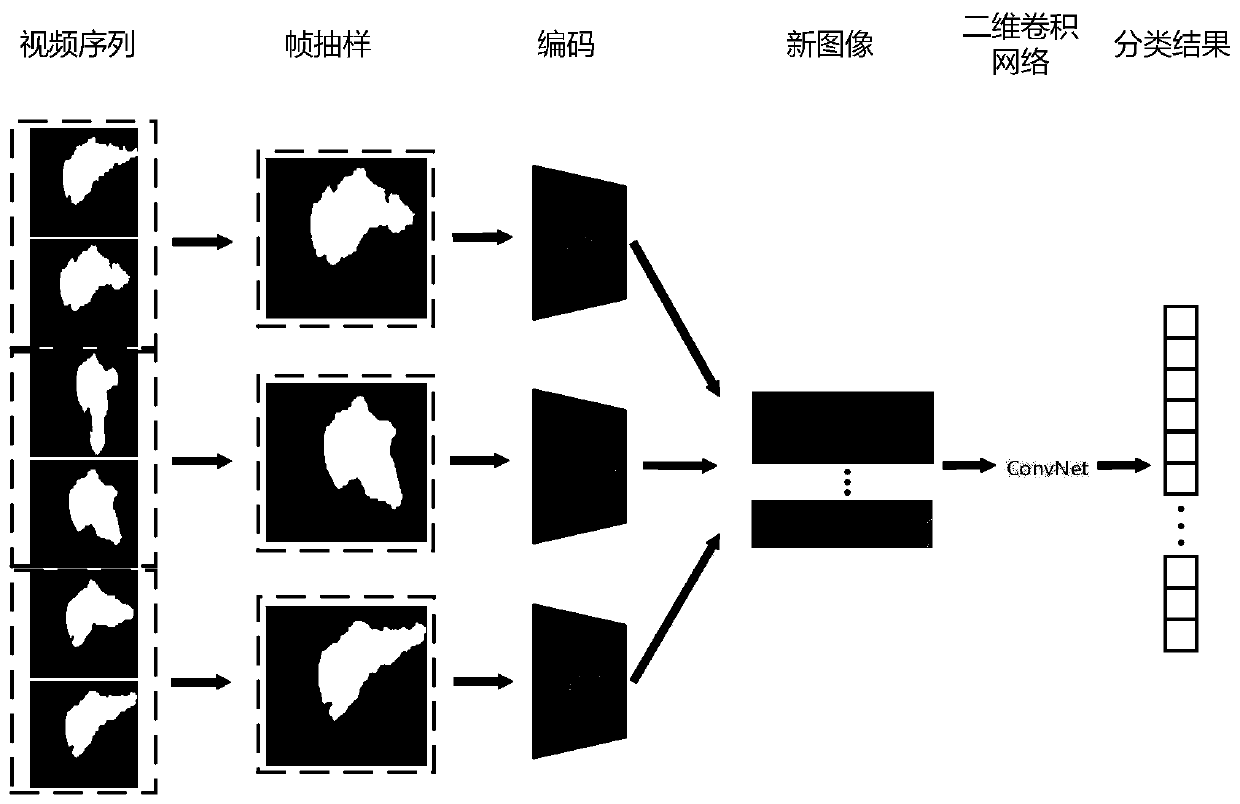

[0076] like figure 1 and image 3 As shown, set the input as a video sequence W

[0077] S1 frame sampling

[0078] Due to the continuity of the video, the difference between several adjacent frames is small. If the video sequence is not frame-sampled, the resulting action feature redundancy will be too high, which will increase the amount of calculation and reduce the recognition efficiency. precision.

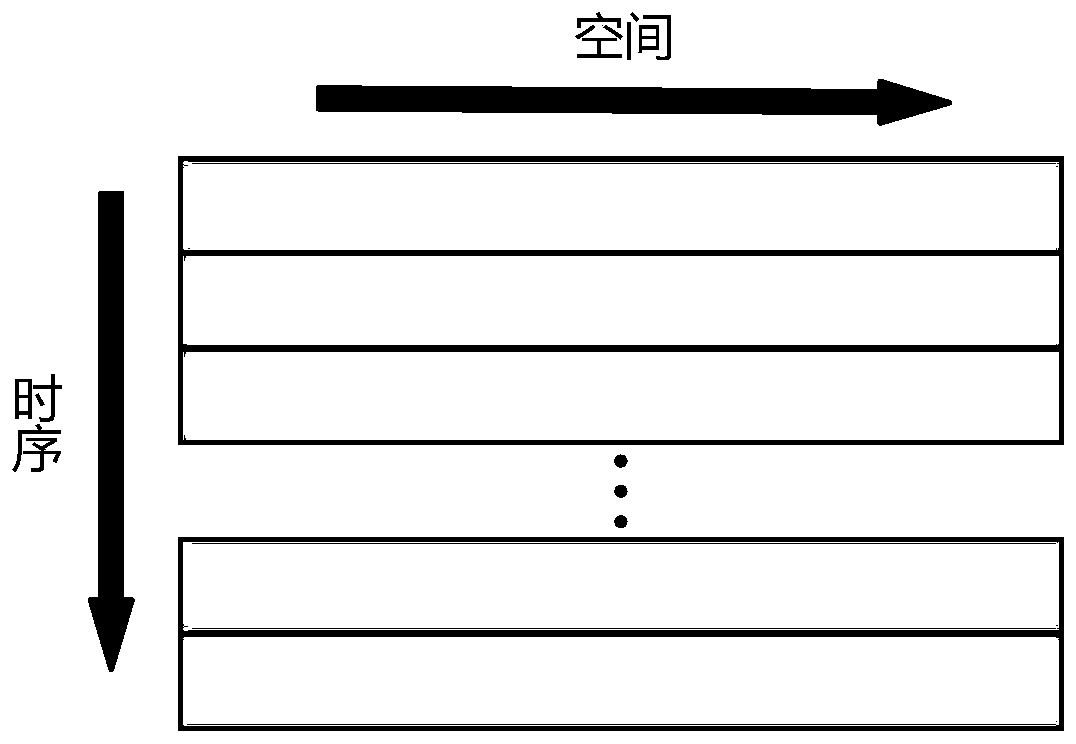

[0079] For the input video sequence W, we equally divide it into K segments: {S 1 , S 2 , S 3 ,...,S K}. These K video segments have images with the same number of frames. Then we for each video segment S k , k=1, 2,..., K extracts a frame of image from it in a certain way, denoted as T k , note that S k and T k It is one-to-one correspondence. Through frame sampling, we will sample the image sequence {T 1 ,T 2 ,T 3 ,...,T K} to represent the original video V. In this way, the amount of calculation is greatly reduced, and at the same time, the ability to mod...

Embodiment 2

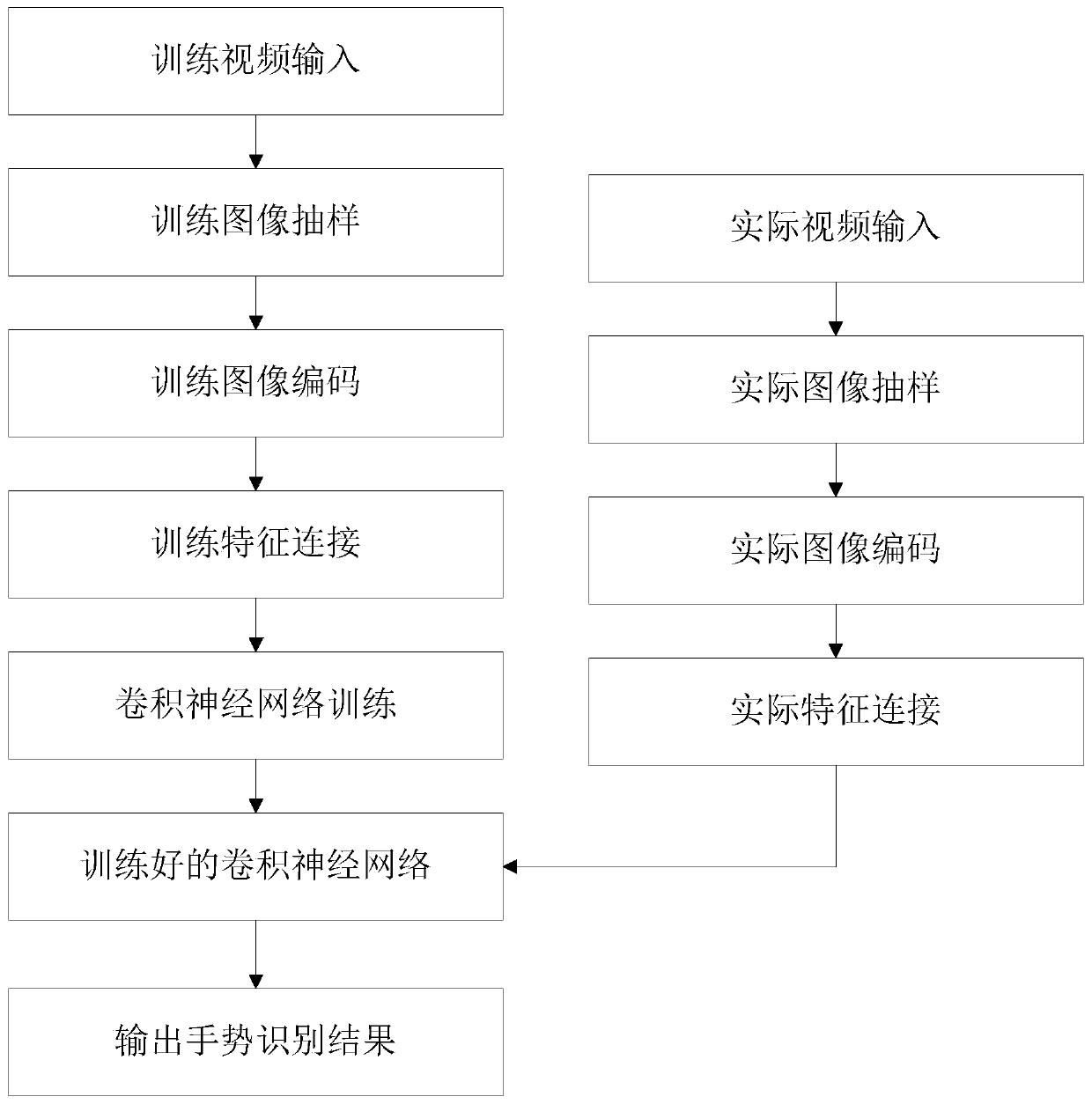

[0112] Embodiment 2: as Figure 4 as shown,

[0113] A dynamic gesture recognition system based on two-dimensional convolutional network, including:

[0114] The frame sampling module collects the actual dynamic gesture video, and processes the video by frame; performs frame sampling on the actual image after frame division;

[0115] An image encoding module, which encodes the actual image after the frame sampling to obtain the actual feature vector of the actual image;

[0116] The feature vector fusion module is used to fuse the actual feature vectors to obtain the actual feature matrix;

[0117] The gesture recognition module inputs the actual feature matrix into the trained two-dimensional convolutional neural network and outputs gesture recognition results.

[0118] Therefore, in the embodiment of the application, the source video stream is processed into a frame of image and sent to the two-dimensional convolutional network to obtain the classification result of the g...

Embodiment 3

[0120] The present disclosure also provides an electronic device, including a memory, a processor, and computer instructions stored in the memory and executed on the processor. When the computer instructions are executed by the processor, each operation in the method is completed. For brevity, I won't repeat them here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com