A Speech Synthesis Method Based on Speech Radar and Video

A technology of speech synthesis and speech, which is applied in the field of radar, can solve the problem of speech synthesis of radar signal and image information, and achieve the effects of natural pronunciation, wide application scenarios and strong anti-noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

preparation example Construction

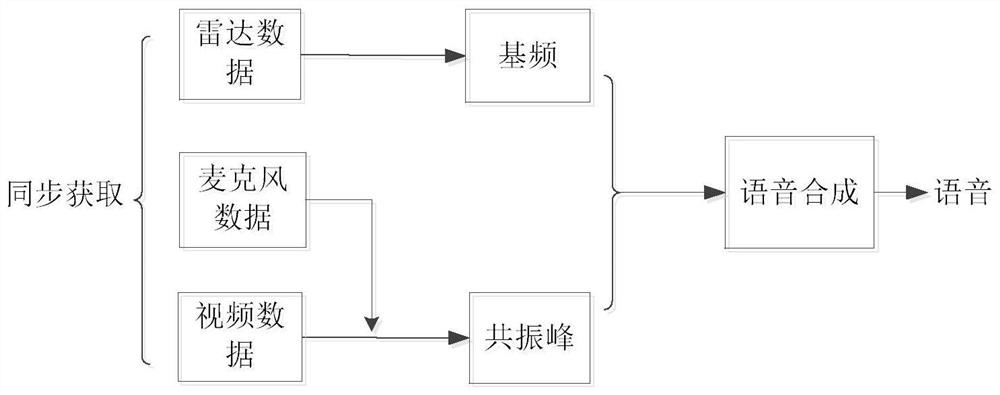

[0016] In conjunction with accompanying drawing, a kind of voice synthesis method based on voice radar and video of the present invention comprises the following steps:

[0017] Step 1. Use the radar echo signal to obtain the fundamental frequency information of the voice, specifically: the non-contact voice radar sends a continuous sine wave to the speaker, the receiving antenna receives the echo signal, and then preprocesses the received echo signal, Fundamental frequency and higher harmonic mode decomposition, time-frequency signal processing, so as to obtain the frequency of time-varying vocal fold vibration, that is, the fundamental frequency of the speech signal;

[0018] The radar echo signal is the vocal fold vibration signal of the speaker collected by the radar echo; the speaker's pronunciation is the sound of a certain character.

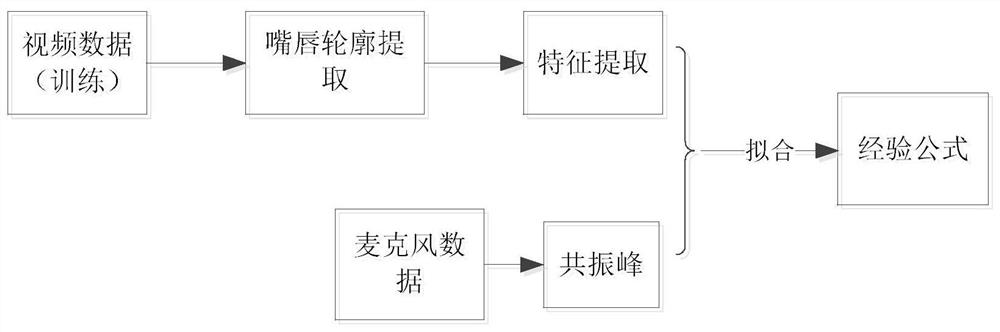

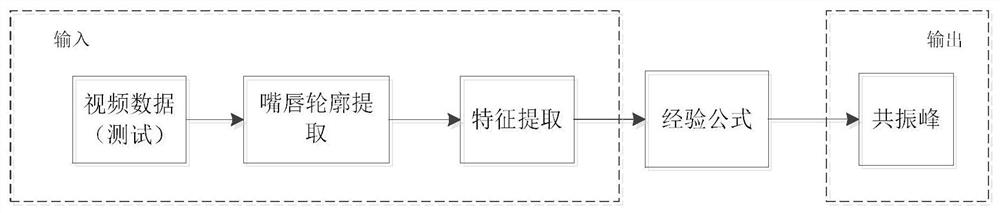

[0019] Step 2. Fit the time-varying motion feature extracted from the lip video information when the speaker is speaking and the time-va...

Embodiment

[0040] In this embodiment, an adult man sends an English character "A", and the speaker obtains the fundamental frequency information of the voice by the radar echo signal when sending "A". Antenna reception, preprocessing of the echo, decomposition of the fundamental frequency and high-order harmonic mode, and time-frequency signal processing, so as to obtain the time-varying frequency of vocal fold vibration, that is, the fundamental frequency of the speech signal.

[0041] The motion features extracted from lip video information and the formants extracted from the voice signal obtained synchronously by the microphone when other speakers pronounce "A" are fitted to obtain the empirical formula for the mapping relationship between lip motion features and three groups of formants; from the empirical formula, The video information of the speaker's lips to be synthesized is used as input for calculation, and the output is three sets of time-varying formants of the speaker's voice...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com