A video jitter removing method based on a fusion motion model

A motion model and video technology, applied in TV, color TV, color TV components and other directions, can solve the problems of video de-shake method failure, reconstruction failure, etc., to achieve the effect of optimizing the viewing experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0025] The video de-shake method based on the fusion motion model is implemented according to the following steps:

[0026] 1. Obtain feature point matching and optical flow between adjacent video frames:

[0027] Convert the image to a grayscale image, extract SIFT feature points, and use the k-nearest neighbor method to find the two most similar feature points in the descriptor Euclidean space in adjacent frames for each feature point. Calculate the difference between the two feature points and the original image feature point descriptor distance. If it is greater than a certain threshold, it is considered to be a correct match, and the matching result is retained.

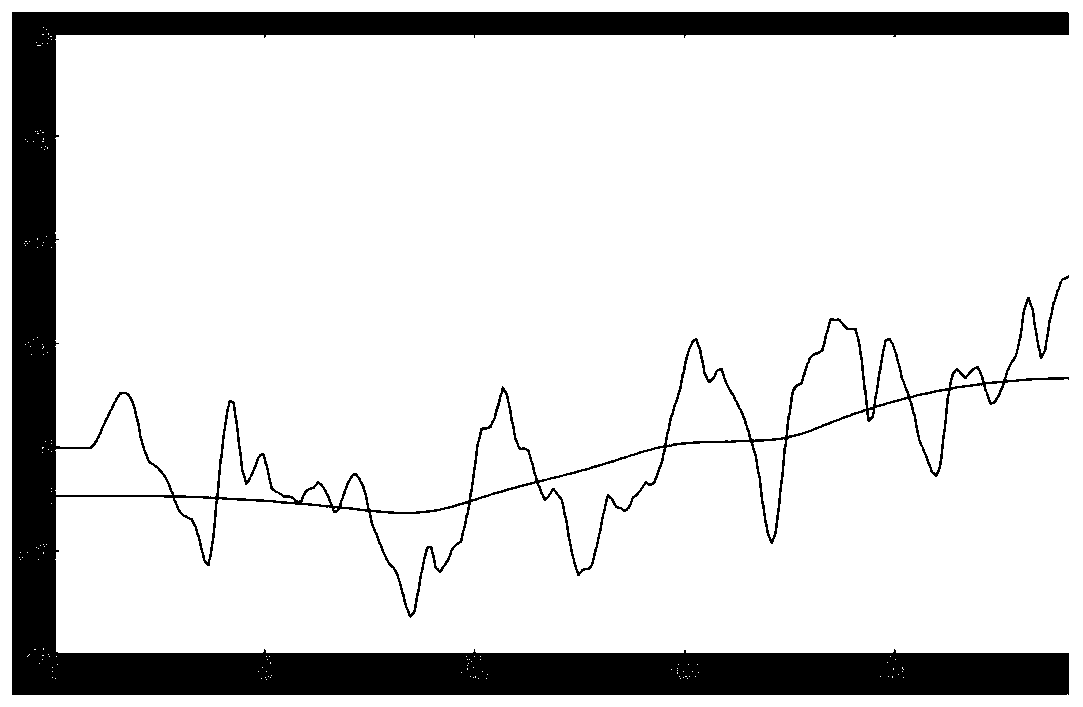

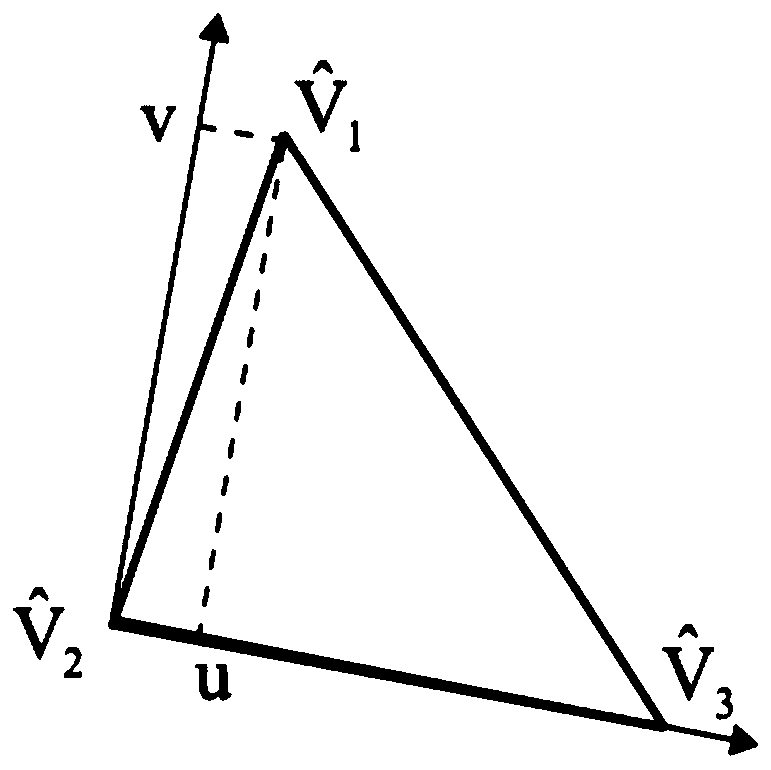

[0028] The Lucas-Kanade optical flow algorithm is used to estimate the motion of each pixel between adjacent frames of the video m=(u, v), where u is the movement of the x-axis coordinate of the image, and v is the movement of the y-axis coordinate of the image.

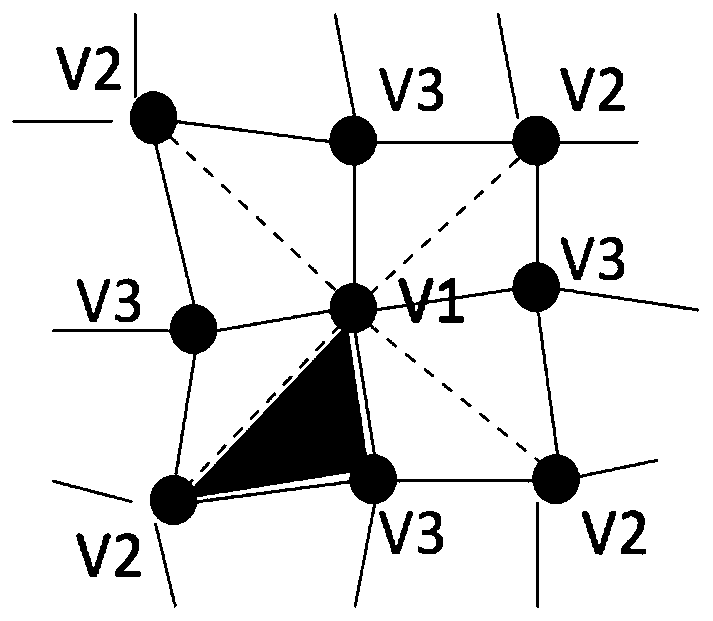

[0029] 2. Perform 3D reconstruction according t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com