Method and system for distributed deep learning parameter quantification communication optimization

A deep learning and distributed technology, applied in the field of deep learning, can solve problems such as the limitation of distributed deep learning model training speed, achieve the effect of reducing the amount of communication data, increasing the training speed, and reducing the impact

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

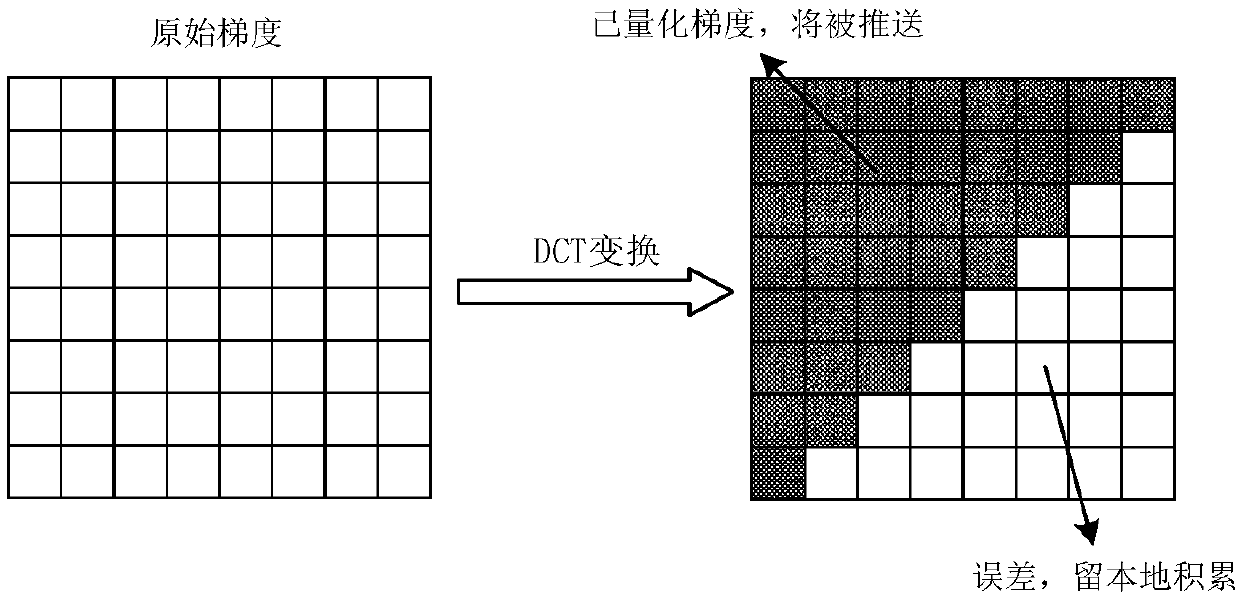

[0058] In the embodiment of the present invention, the gradient DCT transformation and quantization transmission methods are as Figure 7 , its specific implementation is as follows:

[0059] S2.1: The number of floating-point numbers contained in the known Gradient is Num, which is stored on the GPU when it is calculated. According to the number of floating-point numbers contained in the Gradient is Num, record the square matrix G 1 ={Gradient i , 0i Represents the i-th floating-point number in the Gradient. Remember the square matrix G 2 ={Gradient i , n*nk (0≤k<Num / (n*n)), wherein, " / " means division and rounding, and the G subscript k means the index of the divided square matrix. The remaining Num% (n*n) floating-point numbers, wherein "%" means division and taking the remainder, the number of floating-point numbers is less than n*n, and the number that cannot form a square matrix is recorded as G';

[0060] S2.2: according to the number of square matrices Num / (n*n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com