Neural network relation classification method and an implementation system thereof, which fuse discrimination degree information

A relationship classification and neural network technology, applied in the field of natural language processing, can solve the problems of different entity directions and easy confusion.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0077] A neural network relationship classification method for fusing discrimination information, comprising the following steps:

[0078] (1) Data preprocessing: This application uses public data sets for result evaluation, but the public data sets are raw data, which do not meet the input requirements of the model and require preprocessing. First use the one-hot form to represent the entity words in the data set; for example, express the entities in the data set as 1.0, and the others as 0.0; then classify the data according to the text categories in the data set; the text categories in the data set are altogether It is divided into 19 categories, and a 19-dimensional one-hot vector is used to represent the category of each category. The position corresponding to 1 in the one-hot vector is the index position of the category. Put the 19-dimensional vector and the sentence in the text in the In the same line, separated by " / ", when reading the data, the samples and labels are ...

Embodiment 2

[0087] According to a kind of neural network relation classification method of fusing discrimination degree information described in embodiment 1, the difference is that,

[0088] In step (2), the training word vector includes:

[0089] A. Download the English data of Wikipedia for the whole day on November 6, 2011 as the initial training data, and clean these initial training data, remove meaningless special characters and formats, and process the data in HTML format into data in TXT format ;

[0090] B. Feed the data processed in step A into Word2vec for training. During training, use the skip-gram model, set the window size to 3-8, set the iteration cycle to 2-15, and set the dimension of the word vector to 200-400 Dimension, after training, get a word vector mapping table;

[0091] C. Obtain the word vector corresponding to each word in the training set according to the word vector mapping table obtained in step B. In order to speed up the training, this patent correspo...

Embodiment 3

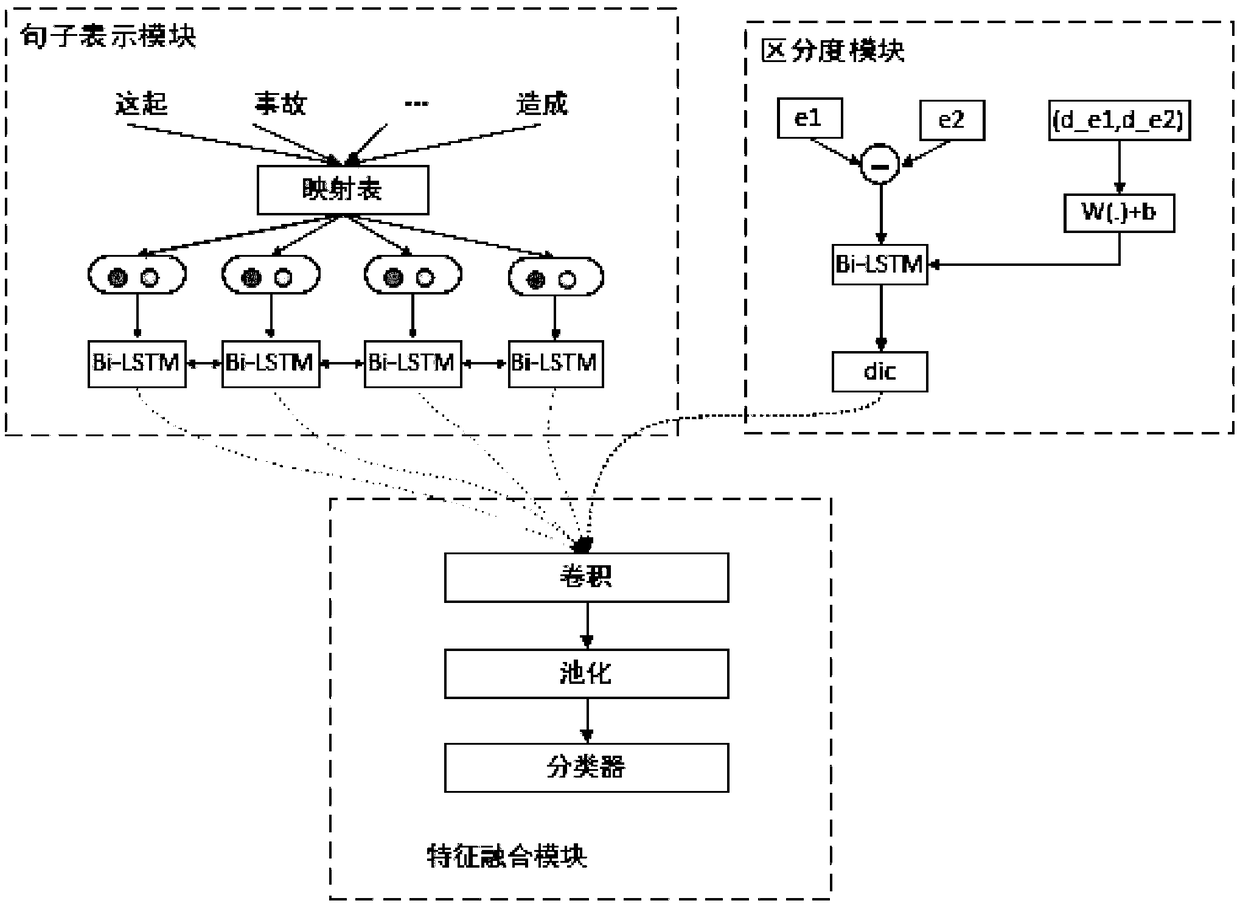

[0132] The realization system of the above-mentioned neural network relation classification method, such as figure 1 As shown, it includes a sentence representation module, a discrimination module and a feature fusion module, and the sentence representation module and the discrimination module are respectively connected to the feature fusion module;

[0133] The sentence representation module is used to: correspond each word in the sentence in the training set to the dictionary, find its corresponding word vector, turn it into a vector form that can be processed by the computer, obtain the position vector, and combine the obtained position vector with the previous word vector Cascading, the obtained new vector is used as the input of the Bi-LSTM unit, and the semantic features of the sentence are obtained after being encoded by the Bi-LSTM unit;

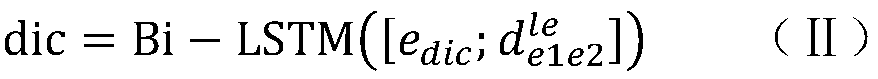

[0134] The discrimination module is used to: subtract the word vectors of the two entity words specified in the sentence, concatena...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com