Visual and inertial navigation fusion SLAM-based external parameter and time sequence calibration method on mobile platform

A mobile platform, visual technology, used in navigation, mapping and navigation, navigation calculation tools, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

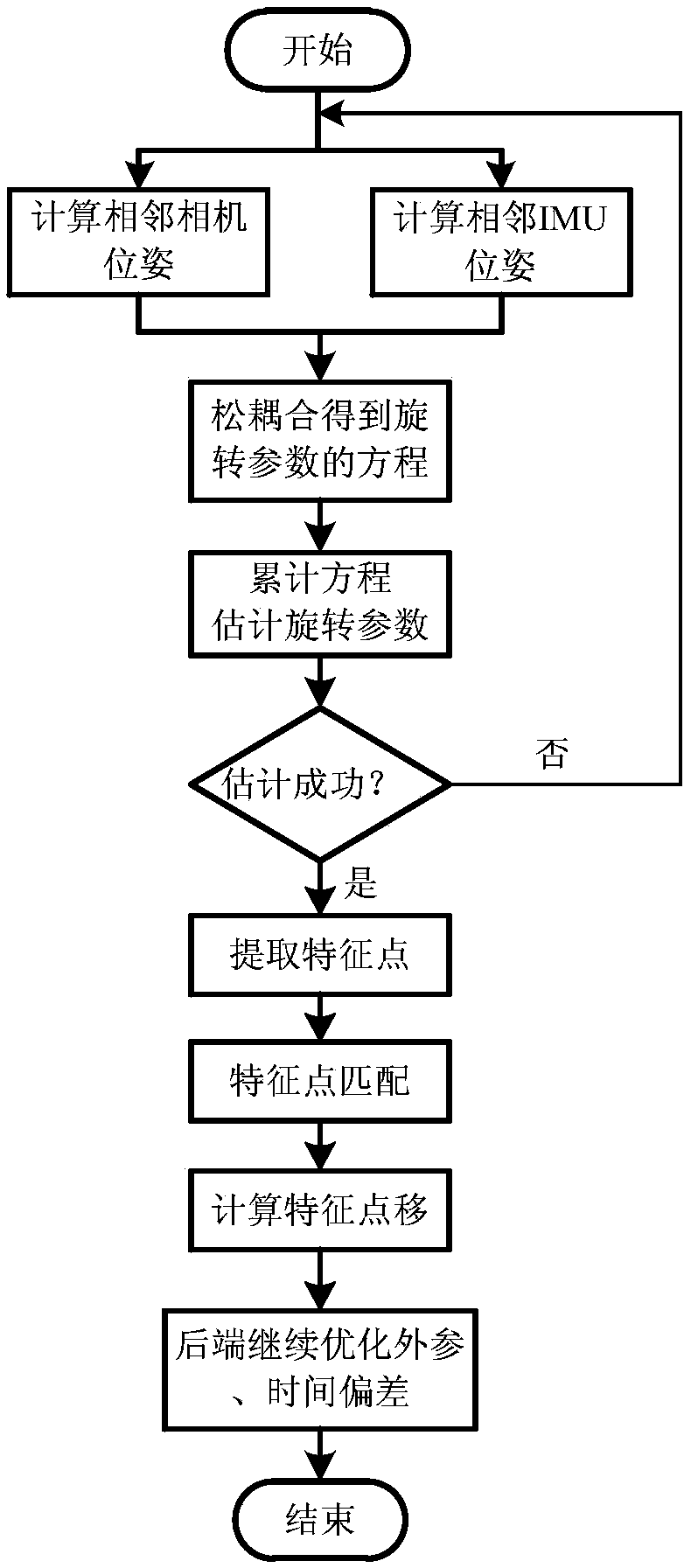

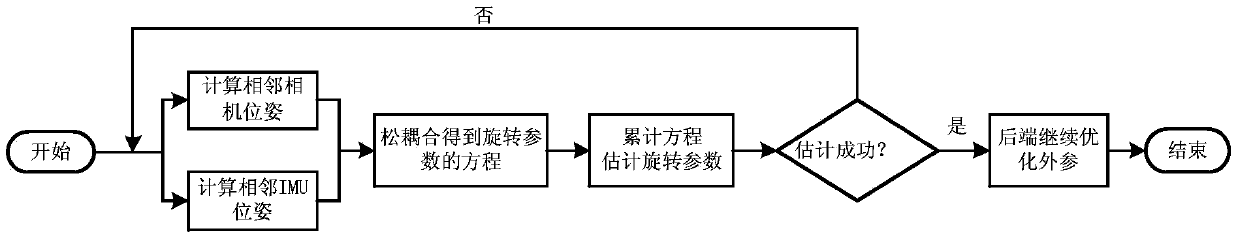

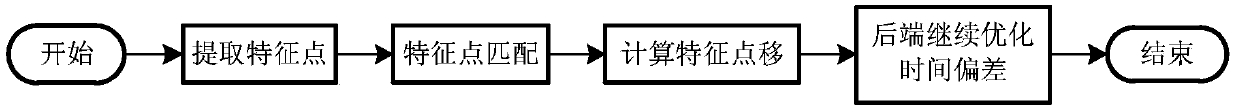

[0094] The technical solution of the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0095] Aiming at the problem that the current monocular visual SLAM system cannot estimate the scale, the present invention adopts the visual inertial navigation SLAM system integrated with the IMU, and for the application on the mobile device, proposes a method for automatic calibration of the external parameters of the camera and the IMU, which can Solve the problem of online external parameter calibration. Aiming at the problem of asynchronous sensor clocks on mobile devices, a method for sensor fusion under asynchronous conditions is proposed. Experimental results show that the method proposed by the present invention can effectively solve the above problems.

[0096] A method for calibrating external parameters and timing based on visual and inertial navigation fusion SLAM on a mobile platform of the present in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com