A Video Stitching Method Based on Optical Flow

A video stitching and optical flow technology, applied in the field of video stitching, can solve the problems of large lighting differences, jumping between frames, affecting visual effects, etc., and achieve the effects of saving calculation time, reducing calculation amount, and accurate optical flow field

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

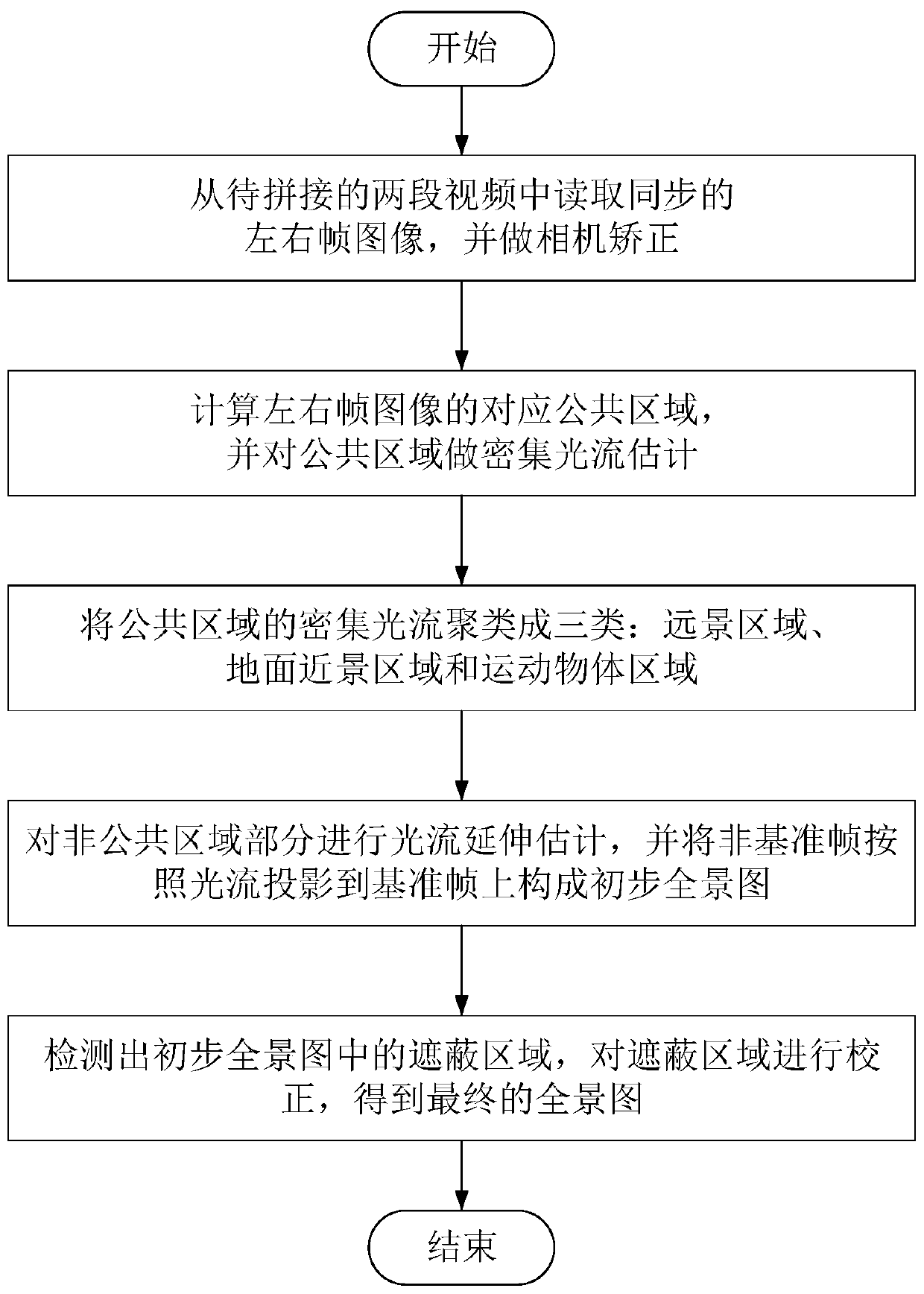

[0034] The present embodiment provides a video splicing method based on optical flow, and the method includes the following steps:

[0035] Step 1: Reading and preprocessing of left and right frame images;

[0036] Step 2: Calculate the corresponding common areas of the left and right frame images, form the common areas of the video to be spliced, and perform dense optical flow estimation on the common areas;

[0037] Step 3: Cluster the dense optical flow in the public area into three categories;

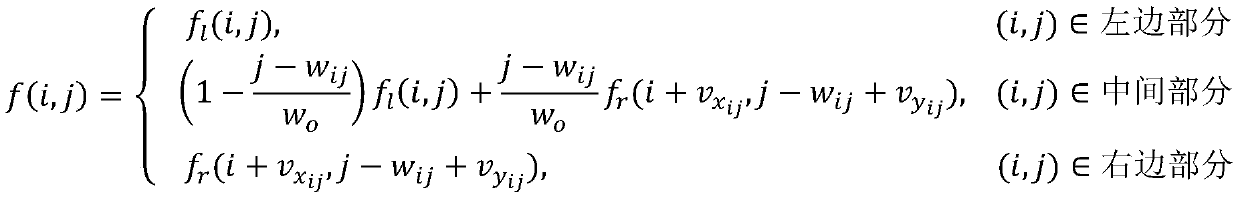

[0038] Step 4: Perform extended estimation of optical flow for non-public areas, and project non-reference frames onto reference frames according to optical flow to form a preliminary panorama;

[0039] Step 5: Detect and correct the masked area of the preliminary panorama to obtain the final panorama;

[0040] Step 6: Go back to Step 1 and read the next pair of left and right frame images.

[0041] Perform steps 1 to 6 again.

[0042] Further, the video to be spliced confo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com