Memory allocation method based on fine granularity

A memory allocation, fine-grained technology, applied in the direction of program control design, instrumentation, electrical digital data processing, etc., can solve the problems of memory expansion, memory allocation overhead increase, memory deduplication rate reduction, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

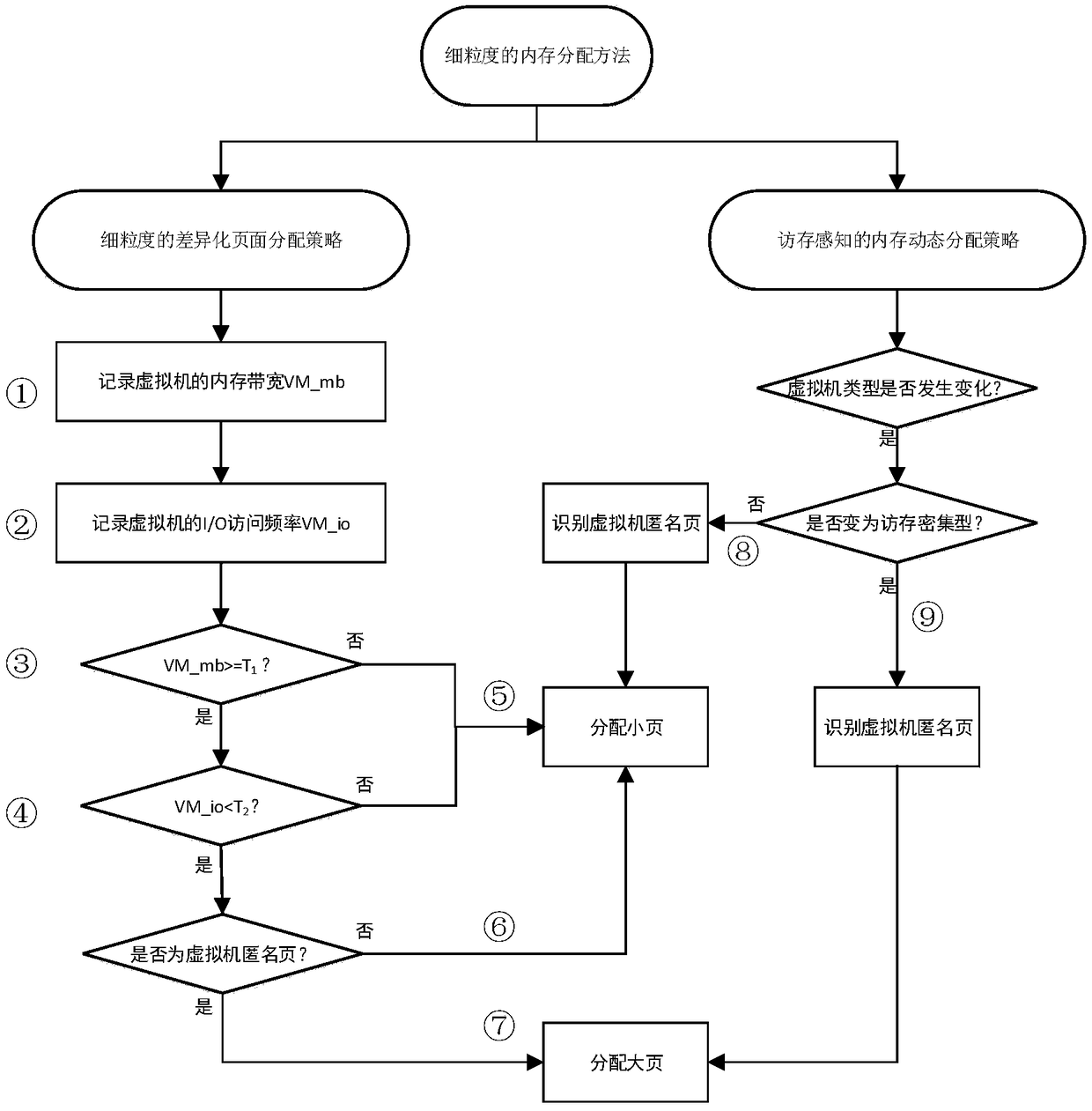

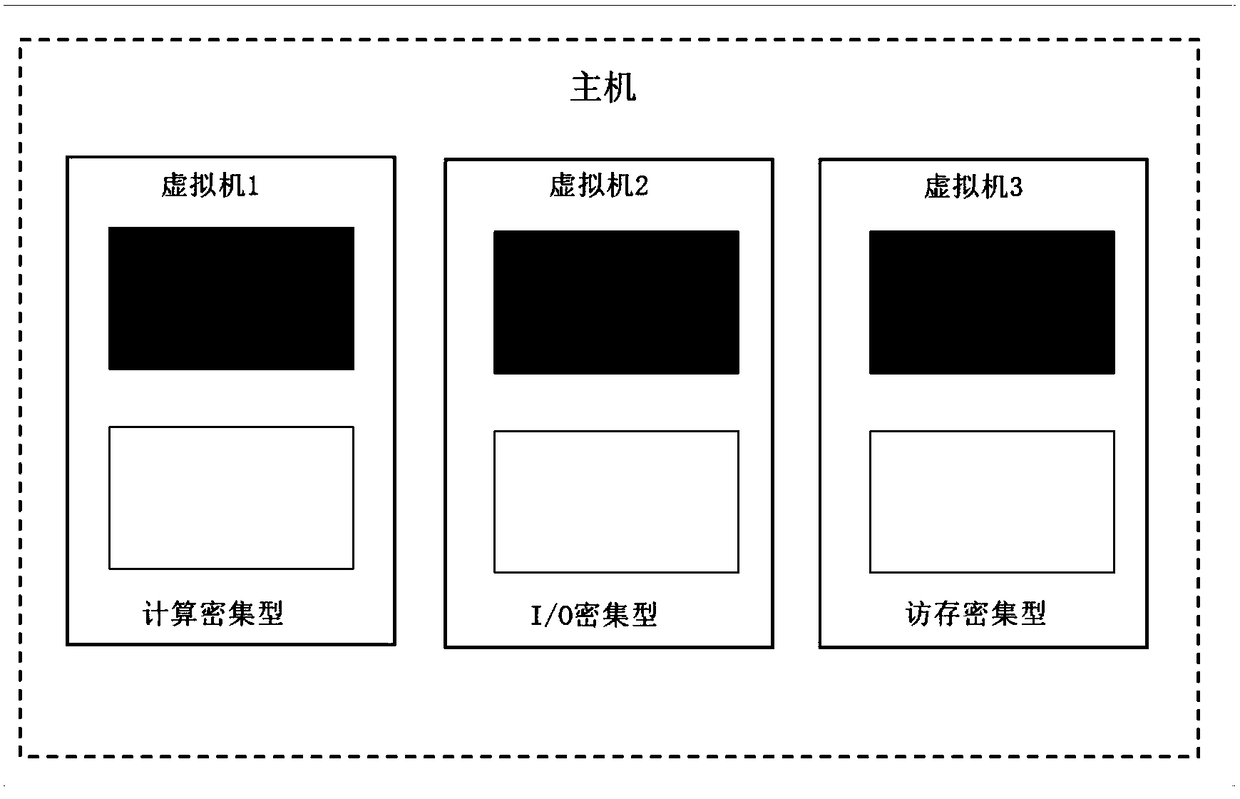

[0022] figure 1 It is a schematic diagram of the implementation operation flow of the fine-grained memory allocation method based on the present invention, figure 2 It is a schematic diagram of the memory usage of the virtual machine under the default configuration of the system.

[0023] This embodiment is based on a fine-grained memory allocation method, which specifically includes the following steps:

[0024] The first step: detection of virtual machine type

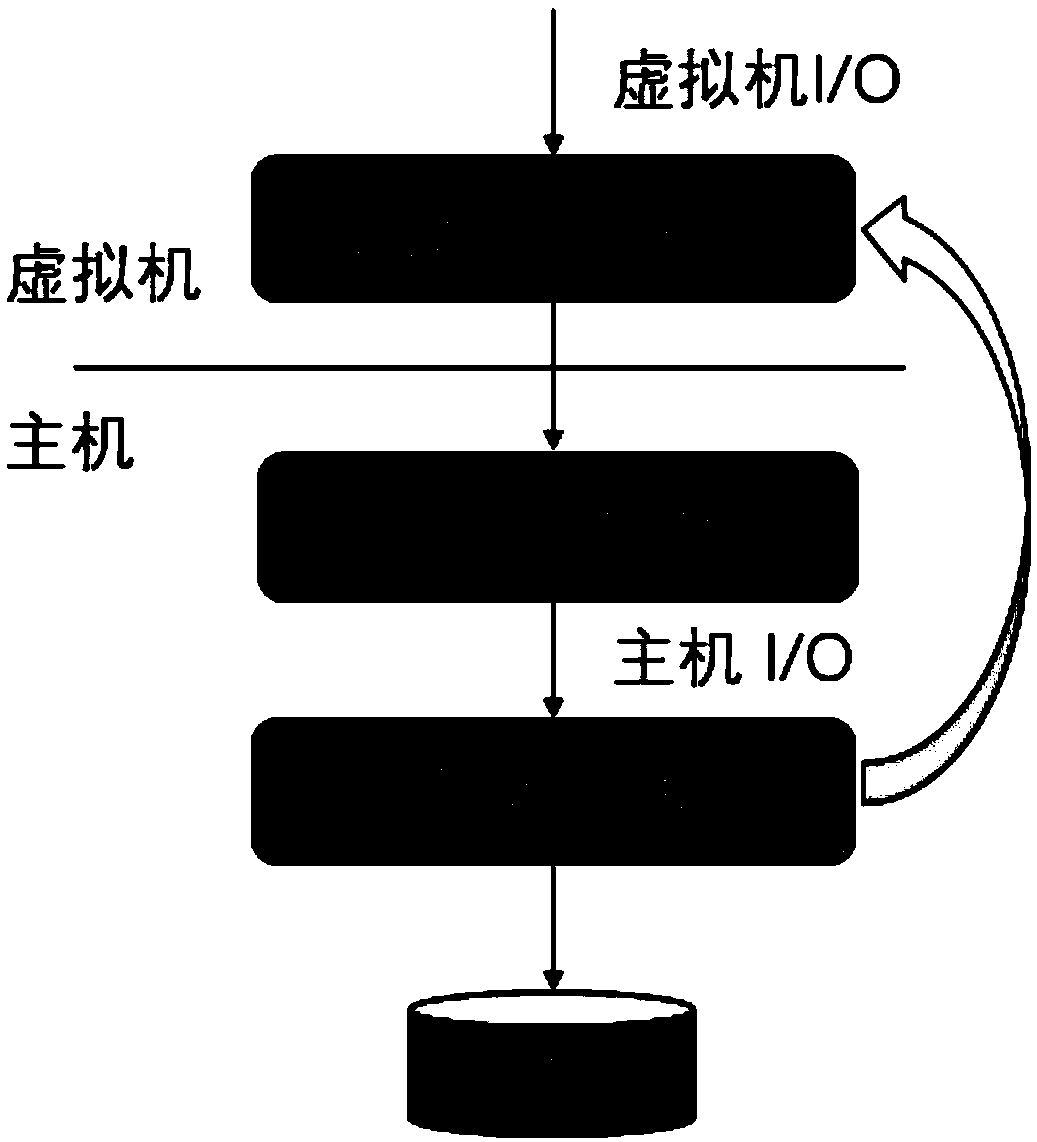

[0025] For all running virtual machines, obtain the memory bandwidth of the virtual machine through the hardware performance counter (see attached figure 1 In the process operation box ①), by intercepting the access path of virtual machine input / output (I / O) (see figure 1 In the process operation box ②), obtain the I / O access frequency of the virtual machine; image 3 A schematic diagram of the I / O access path of the virtual machine is given: when the virtual machine initiates I / O requests, these I / O requests wi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com