Multi-target tracking method based on lstm network and deep reinforcement learning

A technology of multi-target tracking and enhanced learning, which is applied in the field of multi-target tracking based on LSTM network and deep reinforcement learning, can solve the problems of incomplete models and inaccurate tracking results, and achieve the goal of overcoming incomplete models, improving accuracy and multiple The effect of object tracking accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

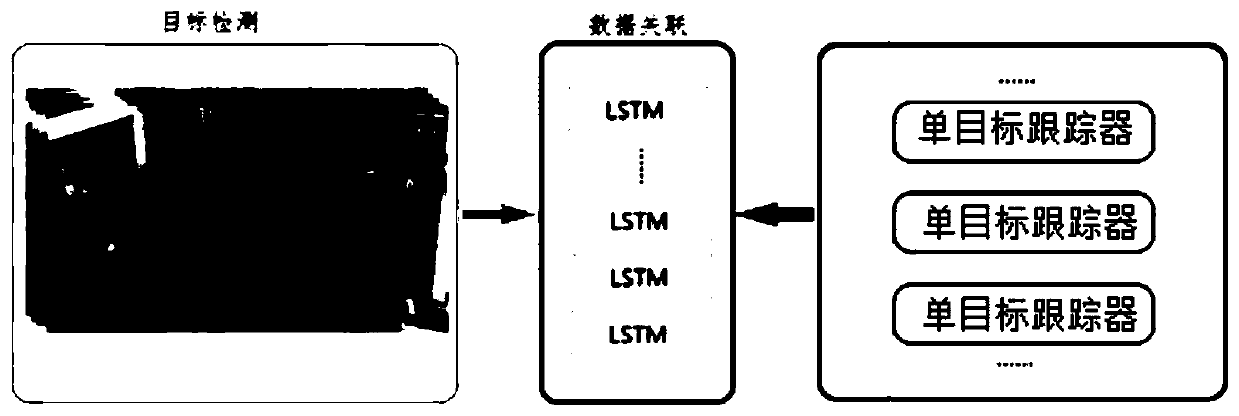

[0026] Such as figure 1 As shown, the multi-target tracking method based on LSTM network and deep reinforcement learning includes the following steps:

[0027] (1) Use the YOLO V2 target detector to detect each frame of the image in the video to be tested, and output the detection result, and set the detection result of the corresponding image at time t as a set is the jth detection result corresponding to the image at time t, and N is the total number of detections;

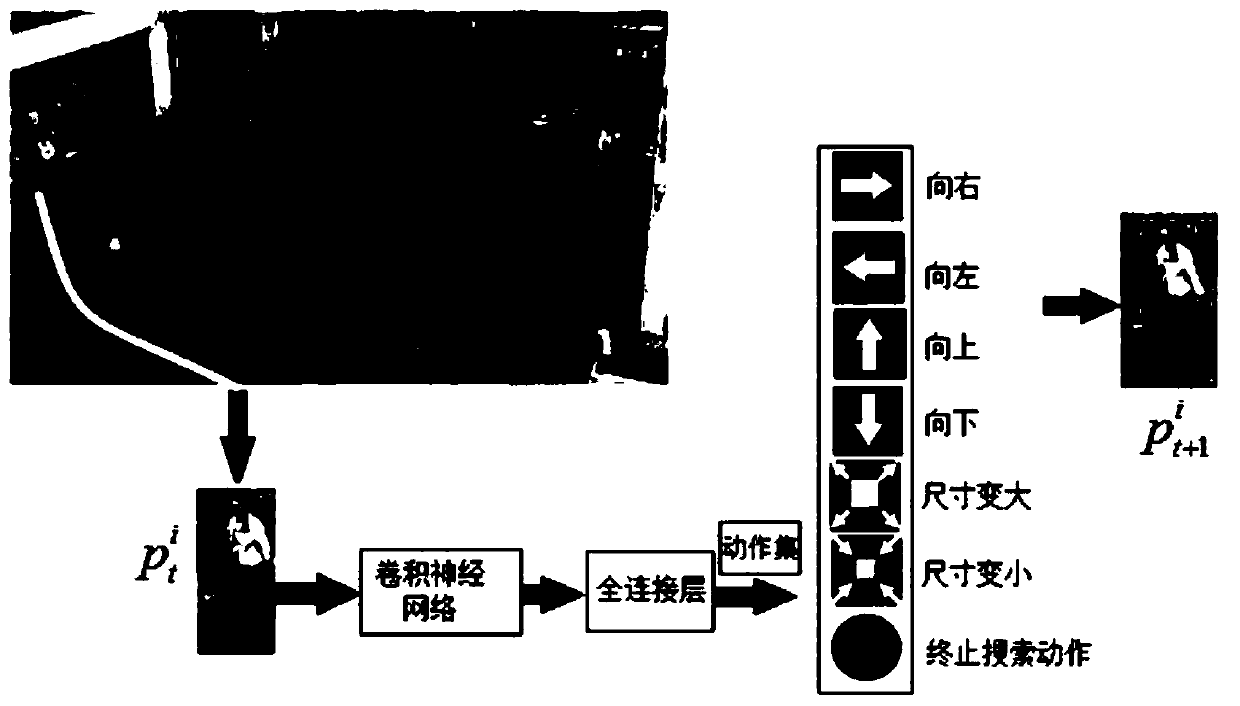

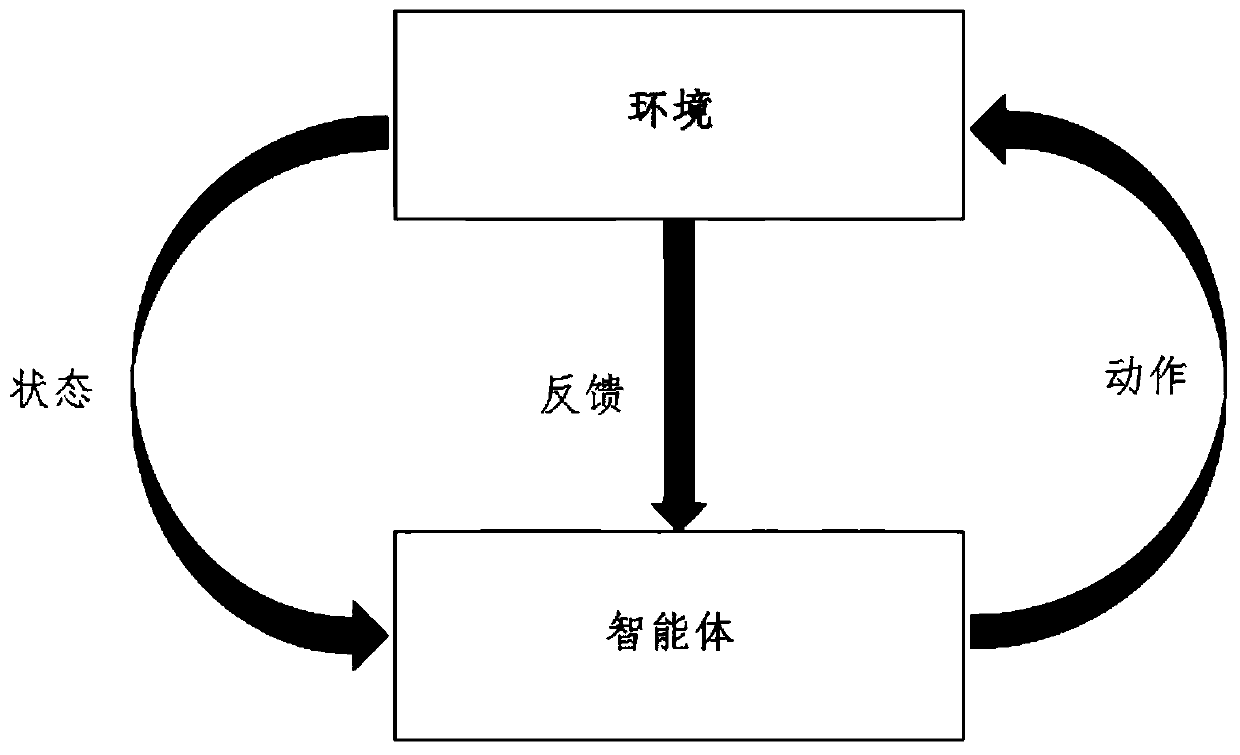

[0028] (2) if figure 2 As shown, multiple single-target trackers based on deep reinforcement learning technology are constructed, each single-target tracker includes a convolutional neural network CNN and a fully connected layer FC, and the convolutional neural network is built on the basis of the VGG-16 network , VGG-16 belongs to the state-of-the-art and has wide applica...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com