Human skeleton joint point behavior motion expression method based on energy function

A technology of human skeleton and energy function, which is applied in the direction of instruments, character and pattern recognition, computer components, etc., can solve the problems of misjudgment as a static state, the expression method is not intuitive enough, and the expression method is difficult to explain intuitively, so as to improve the accuracy Effects on Sex and Reliability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

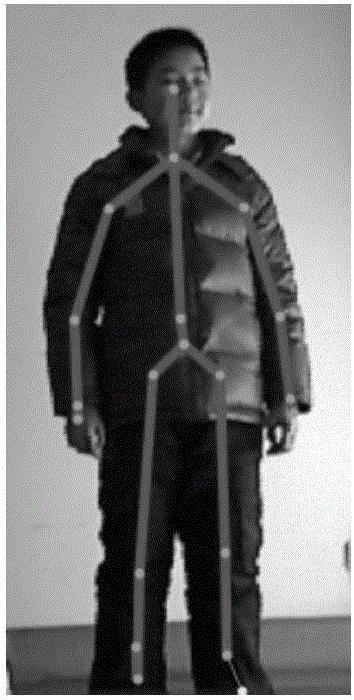

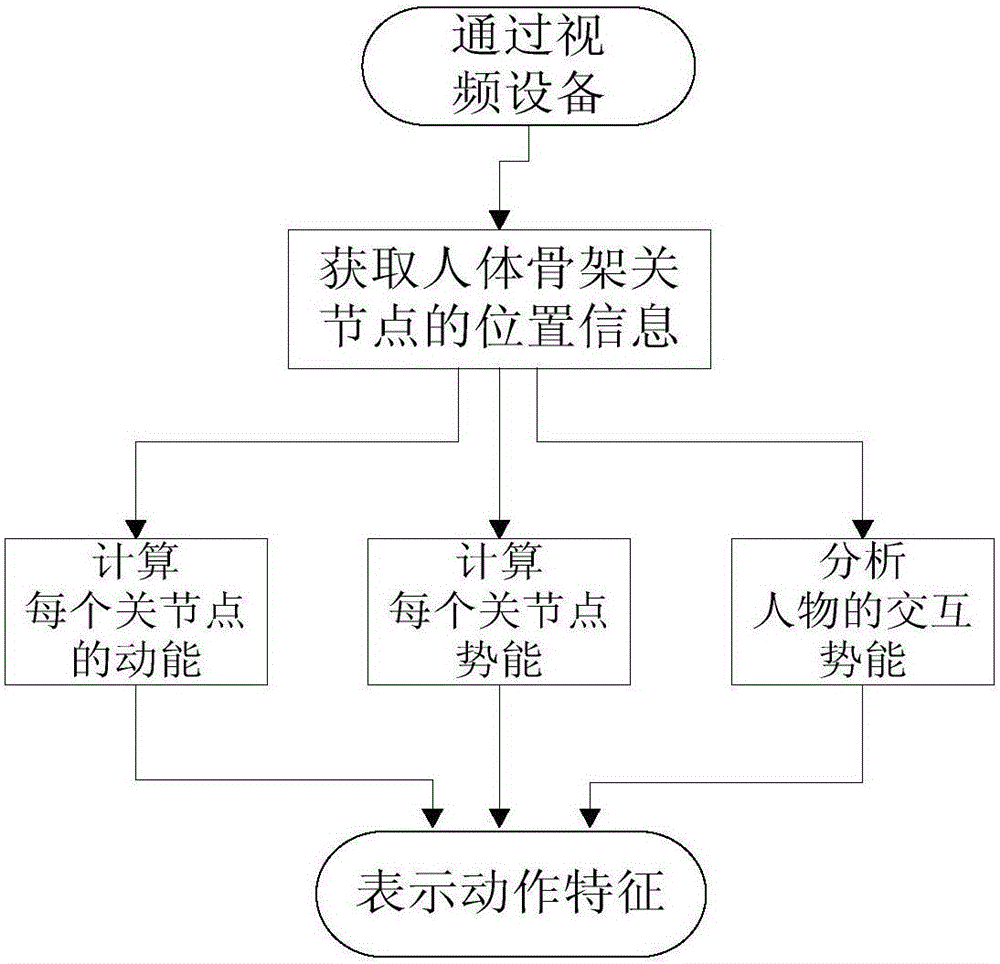

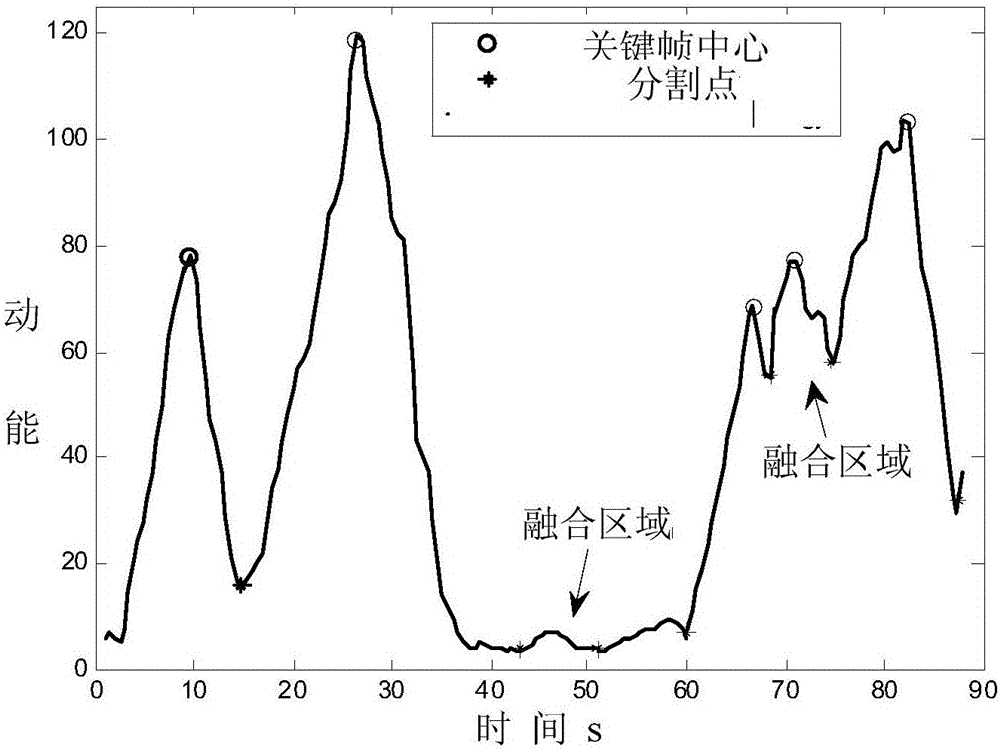

[0025] The method first obtains the position information of the joint points of the human skeleton through the video equipment, and then calculates the kinetic energy and potential energy of each joint point of the human skeleton and the interaction potential energy information of the characters to quantitatively represent the action characteristics of the person. Finally, this representation method is applied to the sub-action division of long videos, so as to obtain sub-action video sequences with complete action meanings.

[0026] In this embodiment, we first use the above method to test the simple actions of the Microsoft Research Cambridge-12 (MSRC-12) action data set of Microsoft Research Cambridge, and then conduct a test experiment on the Dataset-1200 (CAD-120) data set of Cornell University. complex actions, verifying the effectiveness of our segmentation method for complex action recognition.

[0027] This embodiment includes the following steps:

[0028] The first ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com