A Distributed Cache Implementation Method

A distributed cache and implementation method technology, applied in the computer field, can solve the problems of affecting the reading and writing efficiency and increasing the time, and achieve the effect of eliminating time overhead, improving efficiency and development efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] In order to make the purpose, technical means and advantages of the present application clearer, the present application will be further described in detail below in conjunction with the accompanying drawings.

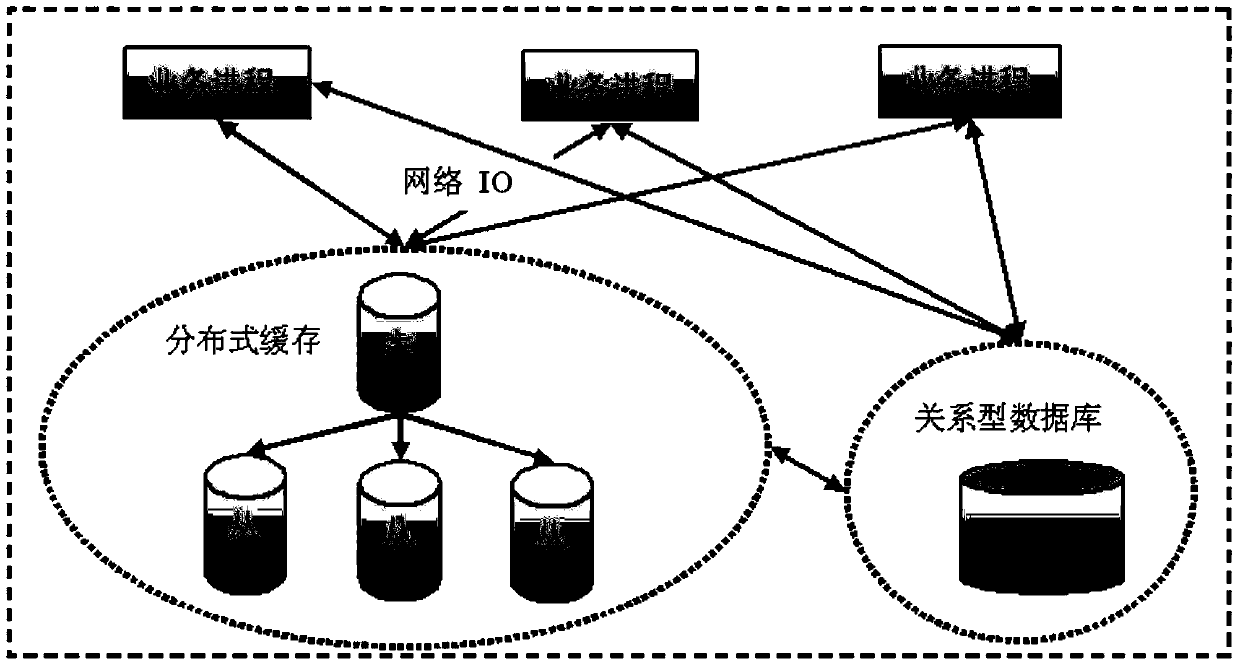

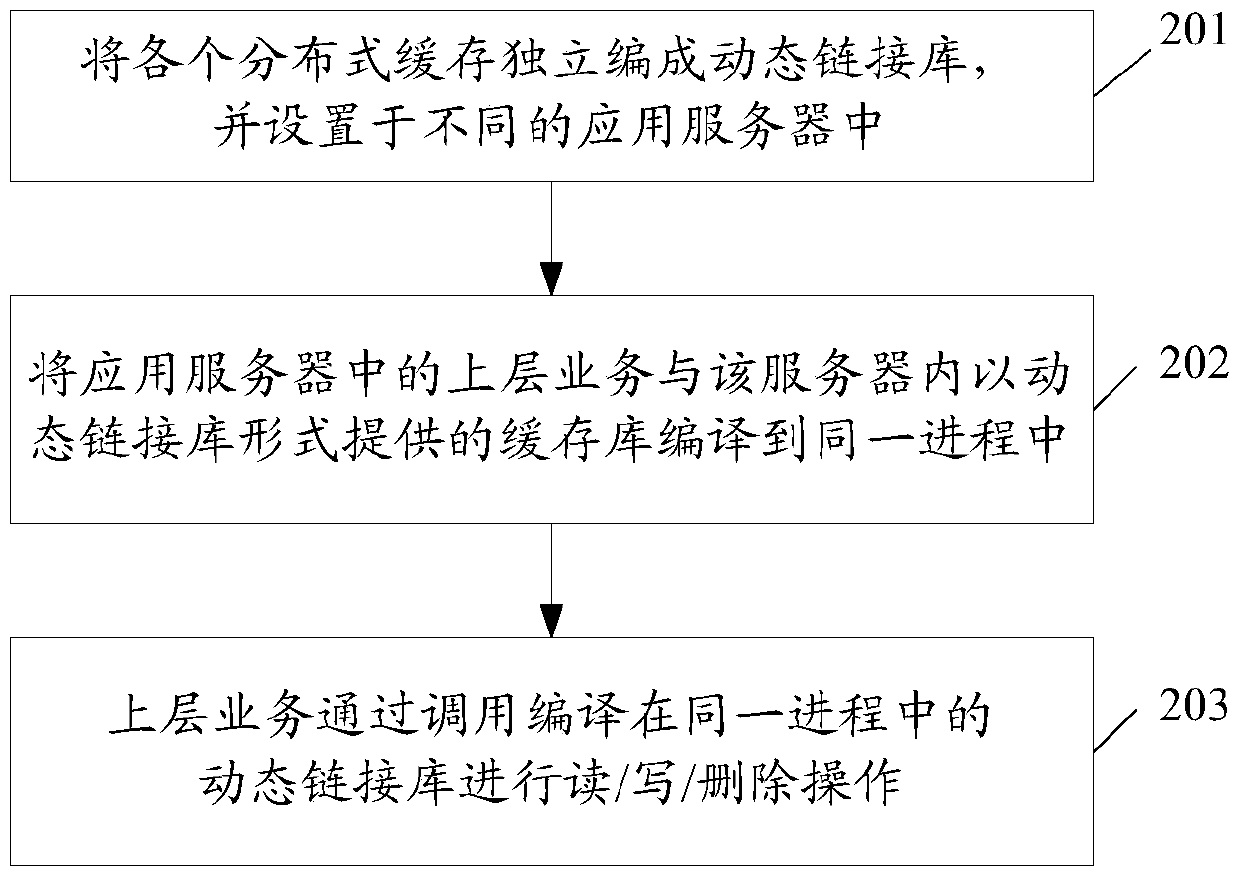

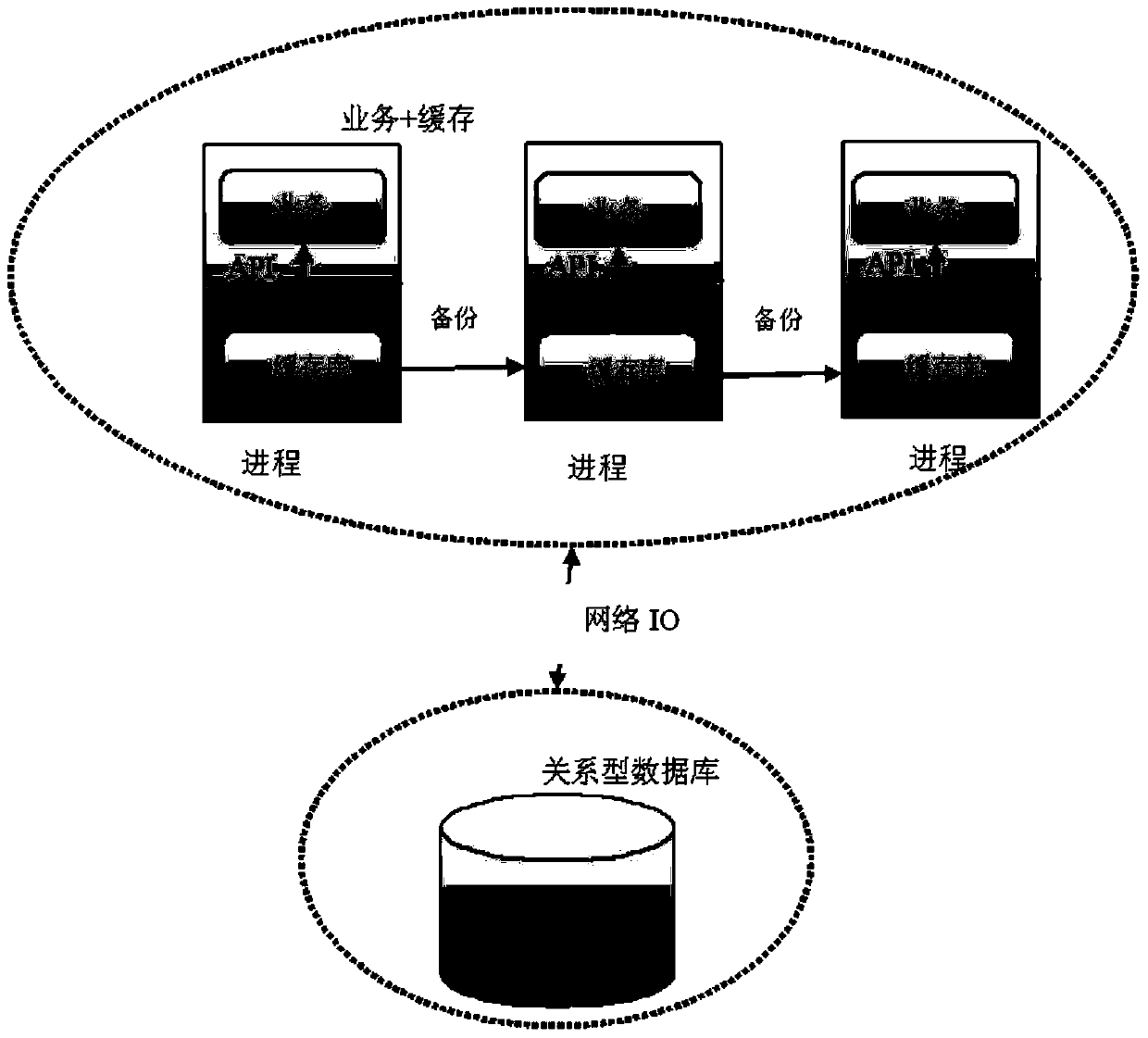

[0024] In order to reduce the network communication time consumption in the stage of reading and writing cached data, achieve faster data reading and writing operations, and avoid developers from judging whether the cache hits or not, this solution designs the distributed cache module as a dynamic link library. In this way, the business process and the distributed cache are compiled into the same process, so that the business can realize the read and write operations of the cache only by reading and writing the local memory, and realize the judgment of whether the data to be accessed is hit in the cache, thereby improving the read and write efficiency and development efficiency.

[0025] In order to reduce the network communication time consumption in the stage ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com