A Deep Neural Network and Acoustic Target Voiceprint Feature Extraction Method

A deep neural and voiceprint feature technology, applied in the field of target recognition, can solve problems such as difficult to achieve results, poor local optimum, etc., and achieve the effect of reducing the impact

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

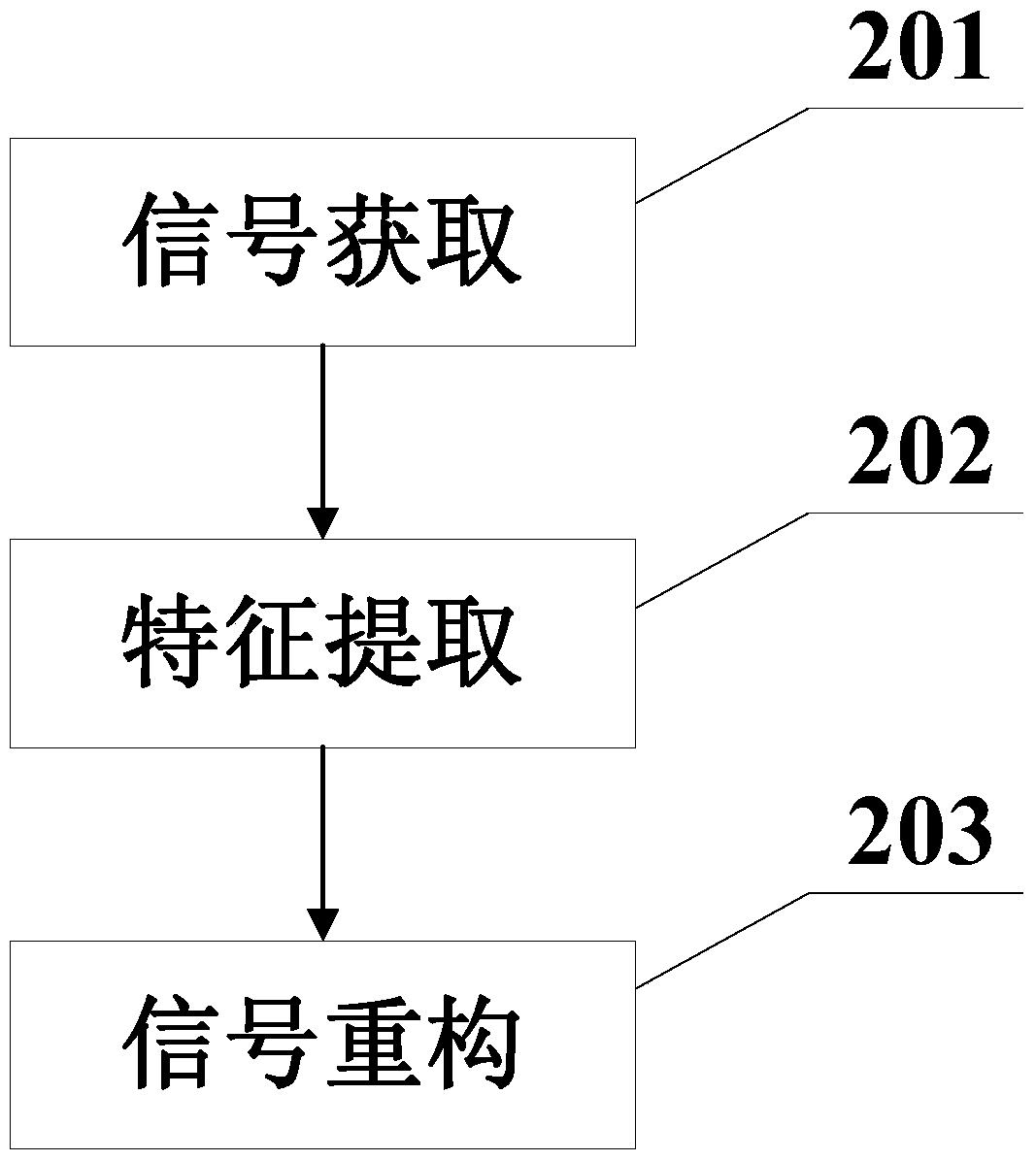

Method used

Image

Examples

specific Embodiment

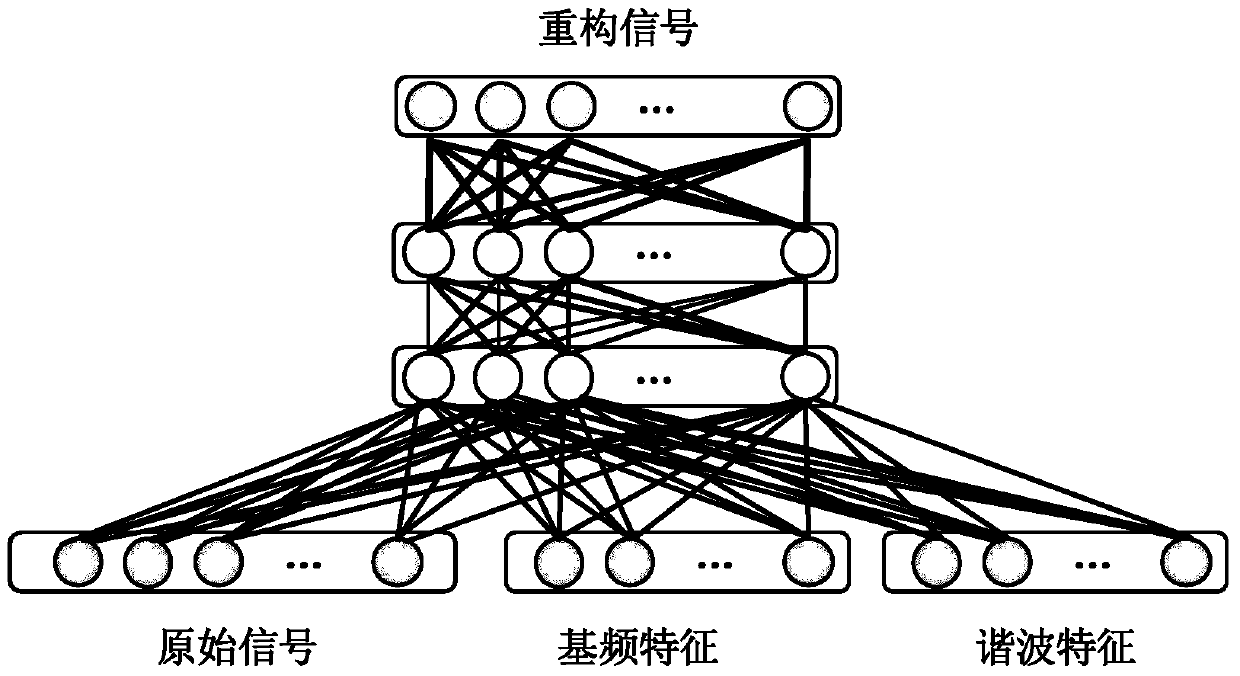

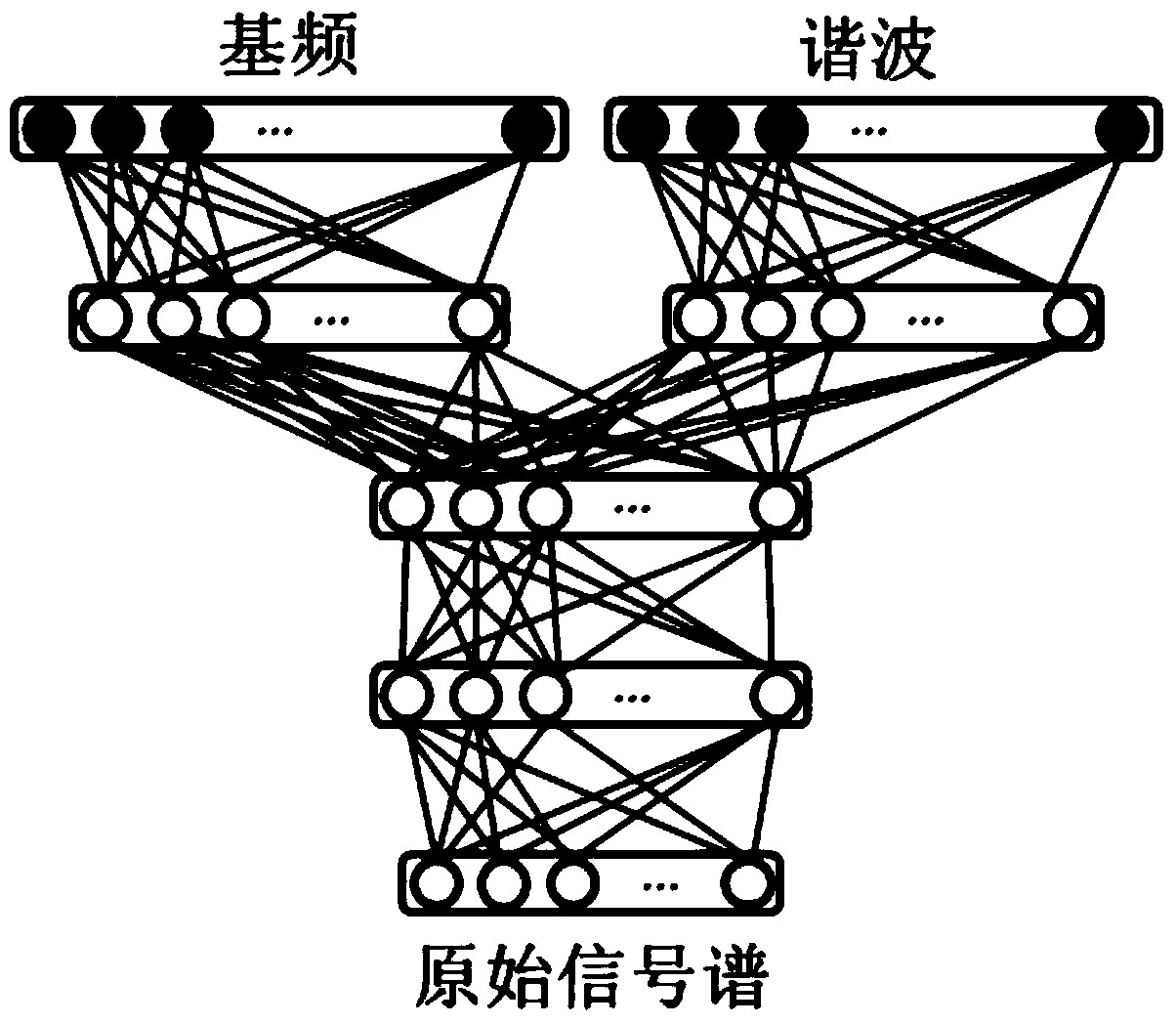

[0058] The autoencoder network used in this paper has three hidden layers, and the number of nodes in each layer is shown in Table 1. Among them, 500 nodes in the input layer are the number of frequency points of the original signal spectrum, 51 nodes correspond to all frequencies within the value range of the fundamental frequency, and 5 nodes are the 5th harmonic order from 3 to 7.

[0059] Table 1

[0060]

input layer

hidden layer 2

hidden layer 3

output layer

Number of nodes

500+51+5

200

50

200

500

[0061] Using the training data, train a single hidden layer neural network. The number of network input nodes is 556, the number of output nodes is 500, and the number of hidden layer nodes is 100. Figure 5 The reconstruction error is given as a function of the number of iterations. from Figure 5 It can be seen that when the number of nodes is less than 100, the reconstruction error decreases exponent...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com