Hybrid tone mapping method for machine vision

A technology of tone mapping and machine vision, applied in instruments, image data processing, computing, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The technical solution of this patent will be further described in detail below in conjunction with specific embodiments.

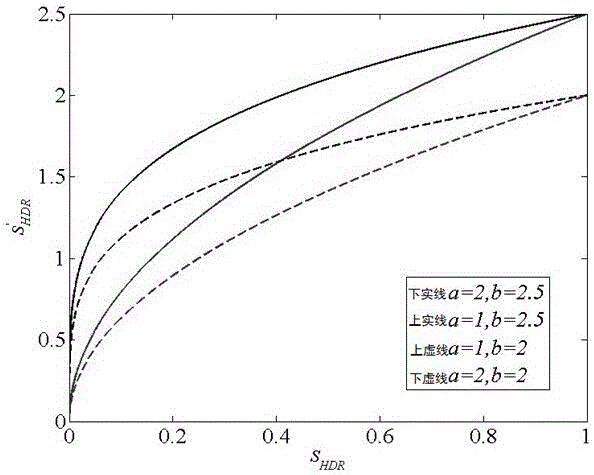

[0035] see Figure 1-3 , a hybrid tone mapping method that can be used in machine vision, the specific steps are as follows:

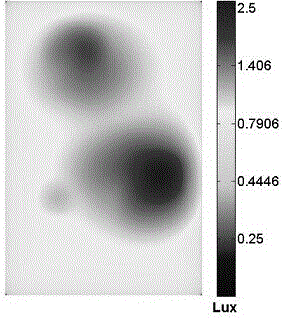

[0036] (1) Perform tone mapping on the input HDR image according to the tone mapping empirical model to obtain the mapped image LDR 1 , the tone mapping empirical model includes a gradient domain tone mapping method.

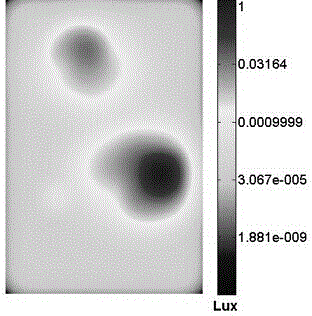

[0037] (2) Use the visual saliency map to calculate the model to calculate the visual saliency map S of the HDR image HDR , the visual saliency map calculation model includes an image saliency map calculation method;

[0038] (3) Combine the HDR image and the visual saliency map S HDR Perform logarithmic transformation to obtain the visual saliency map S HDR1 :

[0039] S HDR1 =ln(S HDR )(Formula 1)

[0040] (4) The visual saliency map S in (Formula 1) HDR 1 is quantified to obtain the visual salien...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com