Multi-focus image fusing method based on dual-channel PCNN (Pulse Coupled Neural Network)

A multi-focus image and fusion method technology, which is applied in image enhancement, image data processing, instruments, etc., can solve the problems of large approximation error, large fusion rules, and many parameters, so as to achieve good image singularity and strengthen self-adaptiveness , the effect of a simple network structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

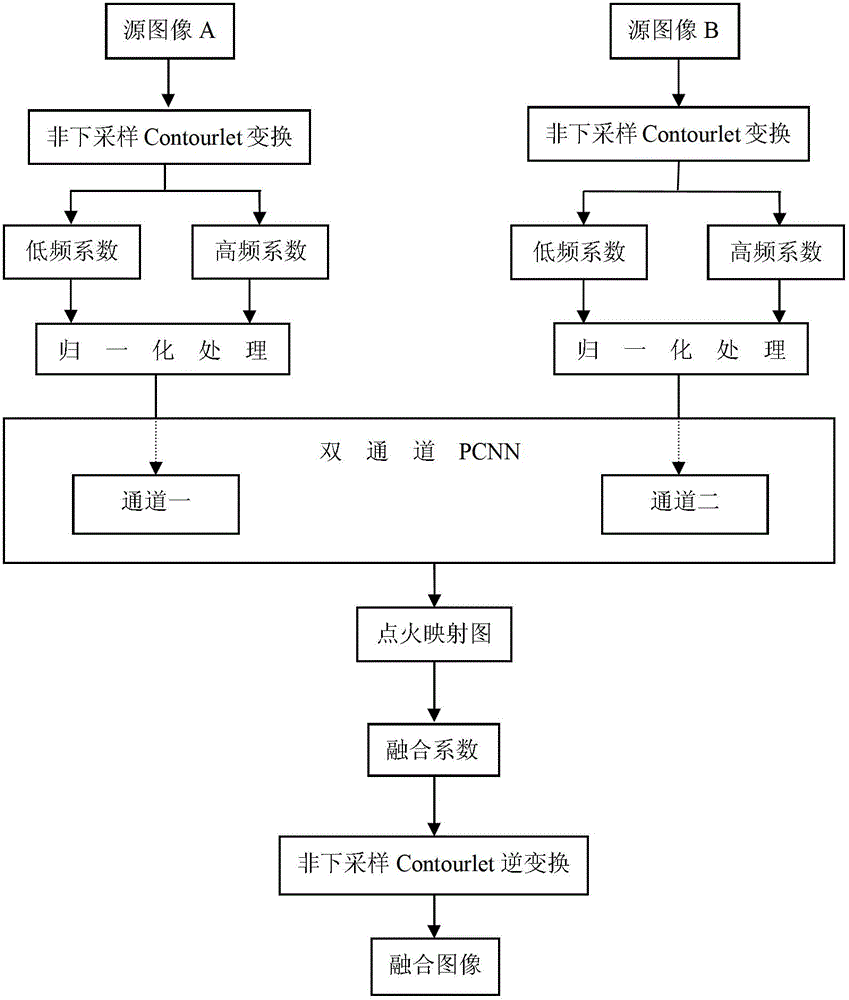

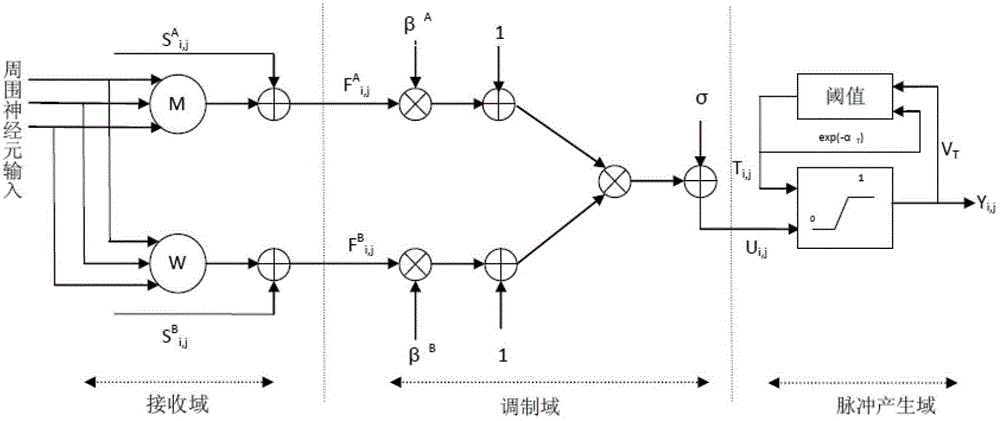

[0027] Such as figure 1 As shown, this embodiment includes the following steps:

[0028]Step 1: Left focus the original image I , which has been registered to reflect the same content A and right focus the original image I B Perform non-subsampled Contourlet transform respectively to obtain the directional subband coefficient image in the stationary Contourlet transform domain;

[0029] In the described non-subsampling Contourlet transform: the scale decomposition filter adopted is realized by the CDF9 / 7 tower wavelet filter, the direction decomposition filter adopted is the pkva direction filter, and the scale decomposition of two layers is carried out to the original image to obtain the low The pass component image and the bandpass component image, that is, the low frequency sub-image I A-lf and I B-lf and high-frequency sub-images and Among them: the first layer has 4 direction sub-bands, and the second layer has 8 direction sub-bands, where: k is the number of laye...

Embodiment 2

[0052] The method of embodiment 1 and embodiment 2 are the same, but the experimental images are different.

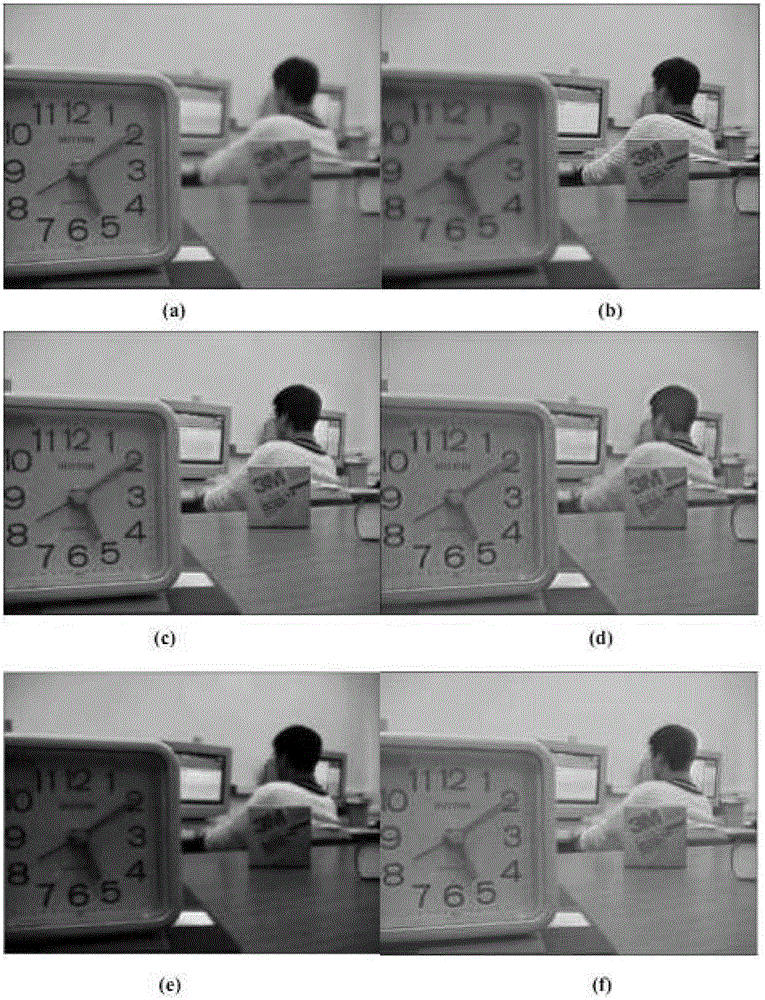

[0053] In summary, through image 3 , Figure 4 From the comparison of the effects, it can be seen that this method better integrates the respective information of the multi-focus image, not only effectively enriches the background information of the image, but also protects the details of the image to the greatest extent, which is in line with the visual characteristics of the human eye. Therefore, in terms of fused images being faithful to the real information of the source image, the method of the present invention is obviously higher than the fusion effect based on Laplace pyramid transformation, dual-channel PCNN, and PCNN.

[0054] Such as image 3 (c), (d), (e), (f) and Table 1 list the objective evaluation indicators of the fusion results of the four methods.

[0055] Table 1 Comparison table of experimental results

[0056]

[0057] Such as Figure 4 (...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com